RTI Connext

Core Libraries and Utilities

User’s Manual

Part 3 — Advanced Concepts

Chapters

Version 5.0

RTI Connext

Core Libraries and Utilities

User’s Manual

Part 3 — Advanced Concepts

Chapters

Version 5.0

© 2012

All rights reserved.

Printed in U.S.A. First printing.

August 2012.

Trademarks

Copy and Use Restrictions

No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form (including electronic, mechanical, photocopy, and facsimile) without the prior written permission of Real- Time Innovations, Inc. The software described in this document is furnished under and subject to the RTI software license agreement. The software may be used or copied only under the terms of the license agreement.

Note: In this section, "the Software" refers to

This product implements the DCPS layer of the Data Distribution Service (DDS) specification version 1.2 and the DDS Interoperability Wire Protocol specification version 2.1, both of which are owned by the Object Management, Inc. Copyright

Portions of this product were developed using ANTLR (www.ANTLR.org). This product includes software developed by the University of California, Berkeley and its contributors.

Portions of this product were developed using AspectJ, which is distributed per the CPL license. AspectJ source code may be obtained from Eclipse. This product includes software developed by the University of California, Berkeley and its contributors.

Portions of this product were developed using MD5 from Aladdin Enterprises.

Portions of this product include software derived from Fnmatch, (c) 1989, 1993, 1994 The Regents of the University of California. All rights reserved. The Regents and contributors provide this software "as is" without warranty.

Portions of this product were developed using EXPAT from Thai Open Source Software Center Ltd and Clark Cooper Copyright (c) 1998, 1999, 2000 Thai Open Source Software Center Ltd and Clark Cooper Copyright (c) 2001, 2002 Expat maintainers. Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

Technical Support

232 E. Java Drive

Sunnyvale, CA 94089

Phone: |

(408) |

Email: |

support@rti.com |

Website: |

Contents, Part 3

|

|||

|

|||

|

|||

10.3.4 Controlling Heartbeats and Retries with DataWriterProtocol QosPolicy |

|||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

10.3.5 Avoiding Message Storms with DataReaderProtocol QosPolicy |

|||

10.3.6 Resending Samples to |

|||

|

|||

|

|||

|

|||

|

|||

iii

iv

|

|||||

|

|

||||

|

|

|

|||

|

|

|

|||

|

|

||||

|

|

||||

|

|||||

|

|

||||

|

|

|

|||

|

|

|

14.3.1.2 Maintaining DataWriter Liveliness for kinds AUTOMATIC and |

|

|

|

|

|

|

||

|

|

||||

|

|

||||

|

|

||||

|

|||||

|

|||||

|

|

||||

|

|

||||

|

|

14.5.3 Automatic Selection of participant_id and Port Reservation |

|||

|

|

||||

|

|||||

|

|||||

|

|||||

|

|||||

|

Setting Builtin Transport Properties of the Default Transport Instance |

|

|||

|

|

||||

|

Setting Builtin Transport Properties with the PropertyQosPolicy |

||||

|

|

||||

|

|

15.6.2 Setting the Maximum |

|||

|

|

15.6.3 Formatting Rules for IPv6 ‘Allow’ and ‘Deny’ Address Lists |

|||

|

Installing Additional Builtin Transport Plugins with register_transport() |

||||

|

|

||||

|

|

||||

|

|

||||

|

Installing Additional Builtin Transport Plugins with PropertyQosPolicy |

||||

|

|||||

|

|

||||

|

|

||||

|

|

||||

|

|||||

|

|||||

|

|||||

|

|

||||

v

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

||

|

||

|

||

|

||

vi

|

|||

|

|||

|

|||

|

|||

20.1.4 |

|

20.2.1 Memory Management for DataReaders Using Generated |

|

20.2.6 |

vii

Chapter 10 Reliable Communications

Connext uses

This chapter includes the following sections:

❏Sending Data Reliably (Section 10.1)

❏Overview of the Reliable Protocol (Section 10.2)

❏Using QosPolicies to Tune the Reliable Protocol (Section 10.3)

10.1Sending Data Reliably

The DCPS reliability model recognizes that the optimal balance between

The QosPolicies provide a way to customize the determinism/reliability

There are two delivery models:

❏

❏Reliable delivery model “Make sure all samples get there, in order.”

10.1.1

By default, Connext uses the

The

10.1.2Reliable Delivery Model

Reliable delivery means the samples are guaranteed to arrive, in the order published.

The DataWriter maintains a send queue with space to hold the last X number of samples sent. Similarly, a DataReader maintains a receive queue with space for consecutive X expected samples.

The send and receive queues are used to temporarily cache samples until Connext is sure the samples have been delivered and are not needed anymore. Connext removes samples from a publication’s send queue after the sample has been acknowledged by all reliable subscriptions. When positive acknowledgements are disabled (see DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3) and DATA_READER_PROTOCOL QosPolicy (DDS Extension) (Section 7.6.1)), samples are removed from the send queue after the corresponding keep- duration has elapsed (see Table 6.36, “DDS_RtpsReliableWriterProtocol_t,” on page

If an

DataReader.

DataWriters can be set up to wait for available queue space when sending samples. This will cause the sending thread to block until there is space in the send queue. (Or, you can decide to sacrifice sending samples reliably so that the sending rate is not compromised.) If the DataWriter is set up to ignore the full queue and sends anyway, then older cached samples will be pushed out of the queue before all DataReaders have received them. In this case, the DataReader (or its Subscriber) is notified of the missing samples through its Listener and/or Conditions.

Connext automatically sends acknowledgments (ACKNACKs) as necessary to maintain reliable communications. The DataWriter may choose to block for a specified duration to wait for these acknowledgments (see Waiting for Acknowledgments in a DataWriter (Section 6.3.11)).

Connext establishes a virtual reliable channel between the matching DataWriter and all DataReaders. This mechanism isolates DataReaders from each other, allows the application to control memory usage, and provides mechanisms for the DataWriter to balance reliability and determinism. Moreover, the use of send and receive queues allows Connext to be implemented efficiently without introducing unnecessary delays in the stream.

Note that a successful return code (DDS_RETCODE_OK) from write() does not necessarily mean that all DataReaders have received the data. It only means that the sample has been added to the DataWriter’s queue. To see if all DataReaders have received the data, look at the RELIABLE_WRITER_CACHE_CHANGED Status (DDS Extension) (Section 6.3.6.7) to see if any samples are unacknowledged.

Suppose DataWriter A reliably publishes a Topic to which DataReaders B and C reliably subscribe. B has space in its queue, but C does not. Will DataWriter A be notified? Will DataReader C receive any error messages or callbacks? The exact behavior depends on the QoS settings:

❏If HISTORY_KEEP_ALL is specified for C, C will reject samples that cannot be put into the queue and request A to resend missing samples. The Listener is notified with the on_sample_rejected() callback (see SAMPLE_REJECTED Status (Section 7.3.7.8)). If A has a queue large enough, or A is no longer writing new samples, A won’t notice unless it checks the RELIABLE_WRITER_CACHE_CHANGED Status (DDS Extension) (Section 6.3.6.7).

❏If HISTORY_KEEP_LAST is specified for C, C will drop old samples and accept new ones. The Listener is notified with the on_sample_lost() callback (see SAMPLE_LOST Status (Section 7.3.7.7)). To A, it is as if all samples have been received by C (that is, they have all been acknowledged).

10.2Overview of the Reliable Protocol

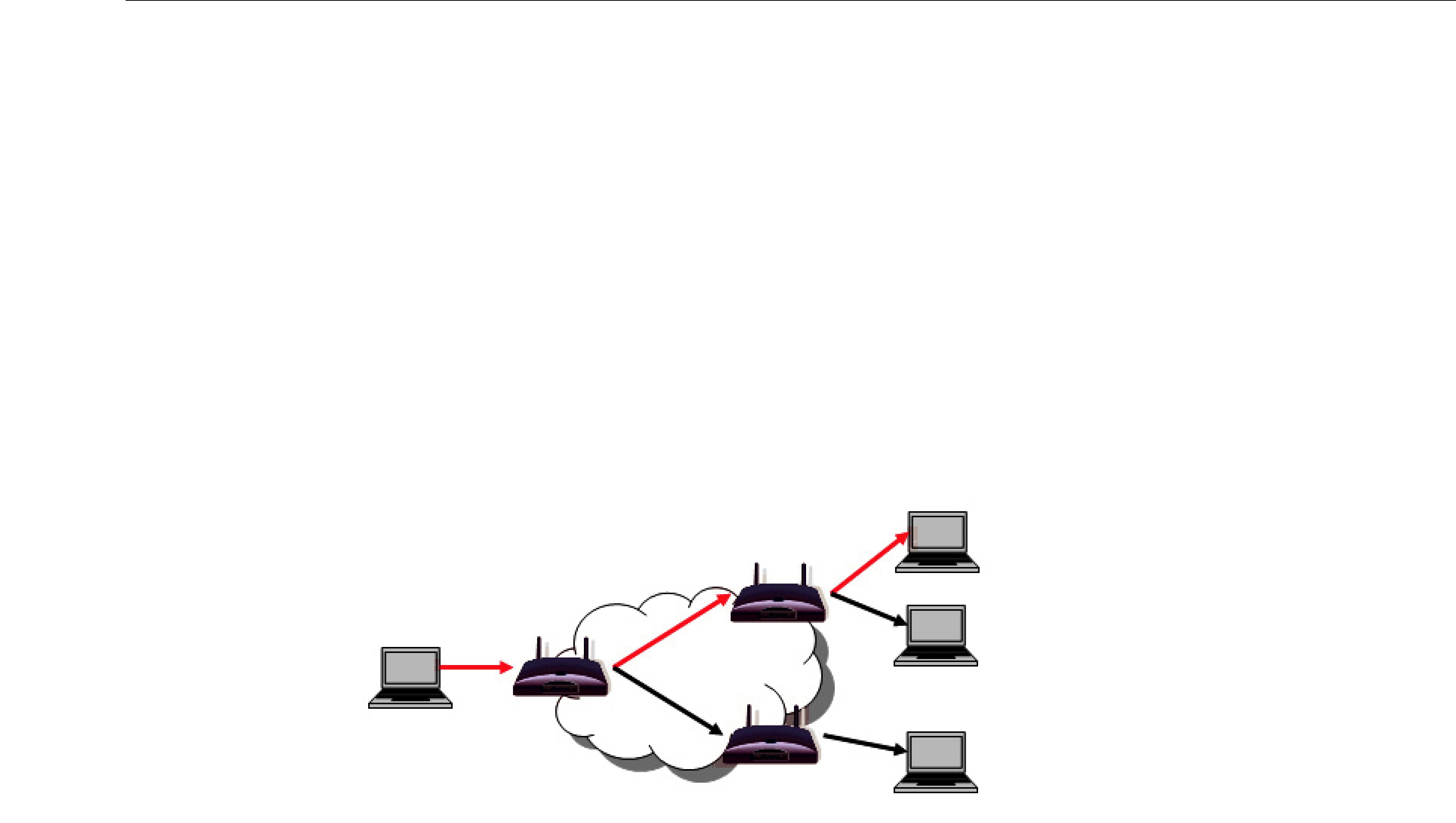

An important advantage of Connext is that it can offer the reliability and other QoS guarantees mandated by DDS on top of a very wide variety of transports, including

In order to work in this wide range of environments, the reliable protocol defined by RTPS is highly configurable with a set of parameters that let the application

The most important features of the RTPS protocol are:

❏Support for both push and pull operating modes

❏Support for both positive and negative acknowledgments

❏Support for high

❏Support for multicast DataReaders

❏Support for

In order to support these features, RTPS uses several types of messages: Data messages (DATA), acknowledgments (ACKNACKs), and heartbeats (HBs).

❏DATA messages contain snapshots of the value of

❏HB messages announce to the DataReader that it should have received all snapshots up to the one tagged with a range of sequence numbers and can also request the DataReader to send an acknowledgement back. For example,

❏ACKNACK messages communicate to the DataWriter that particular snapshots have been successfully stored in the DataReader’s history. ACKNACKs also tell the DataWriter which snapshots are missing on the DataReader side. The ACKNACK message includes a set of sequence numbers represented as a bit map. The sequence numbers indicate which ones the DataReader is missing. (The bit map contains the base sequence number that has not been received, followed by the number of bits in bit map and the optional bit map.

The maximum size of the bit map is 256.) All numbers up to (not including) those in the set are considered positively acknowledged. They are represented in Figure 10.1 through Figure 10.7 as

1. For a link to the RTPS specification, see the RTI website, www.rti.com.

missing>). For example, ACKNACK(4) indicates that the snapshots with sequence numbers 1, 2, and 3 have been successfully stored in the DataReader history, and that 4 has not been received.

It is important to note that Connext can bundle multiple of the above messages within a single network packet. This ‘submessage bundling’ provides for higher performance communications.

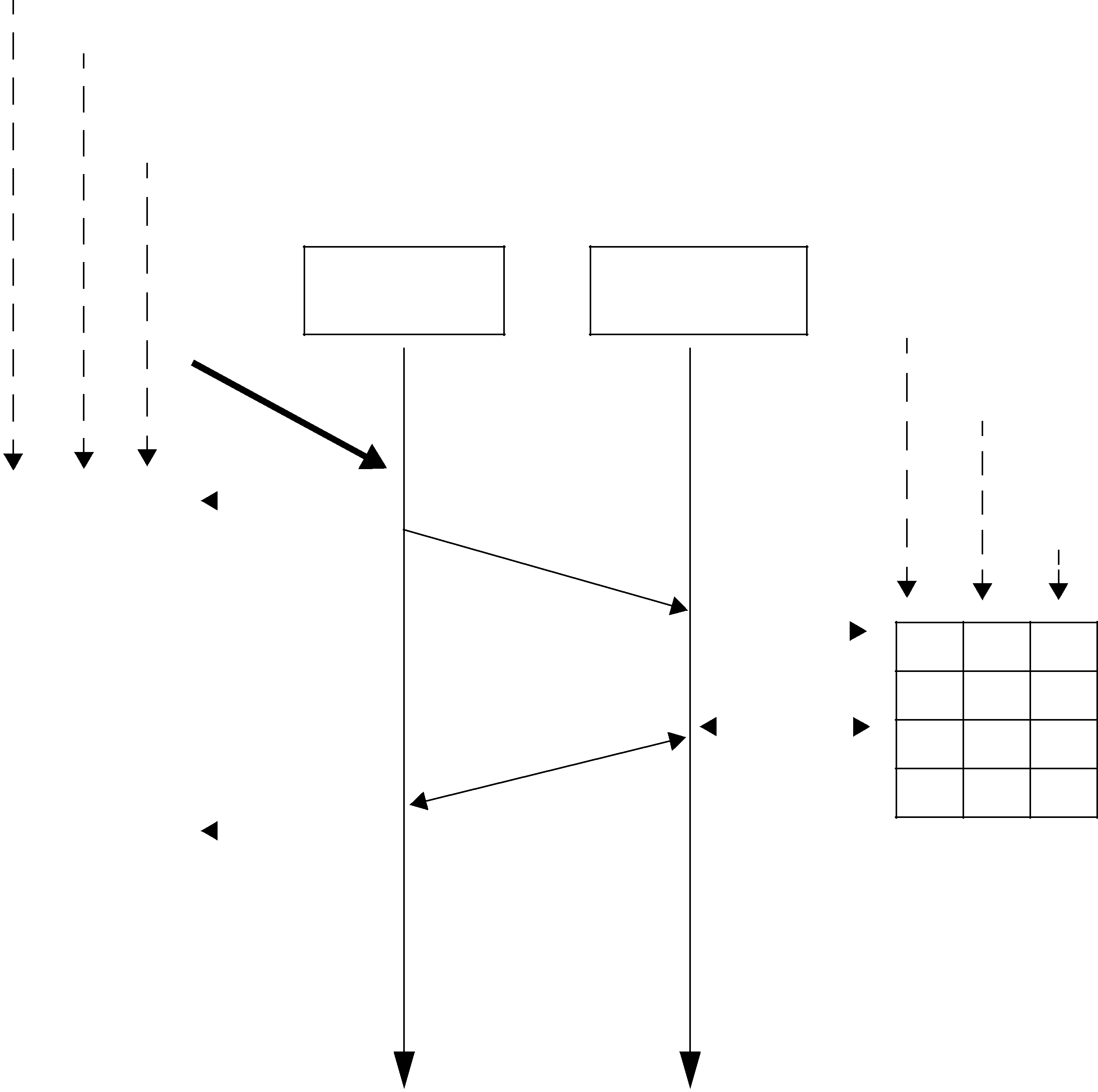

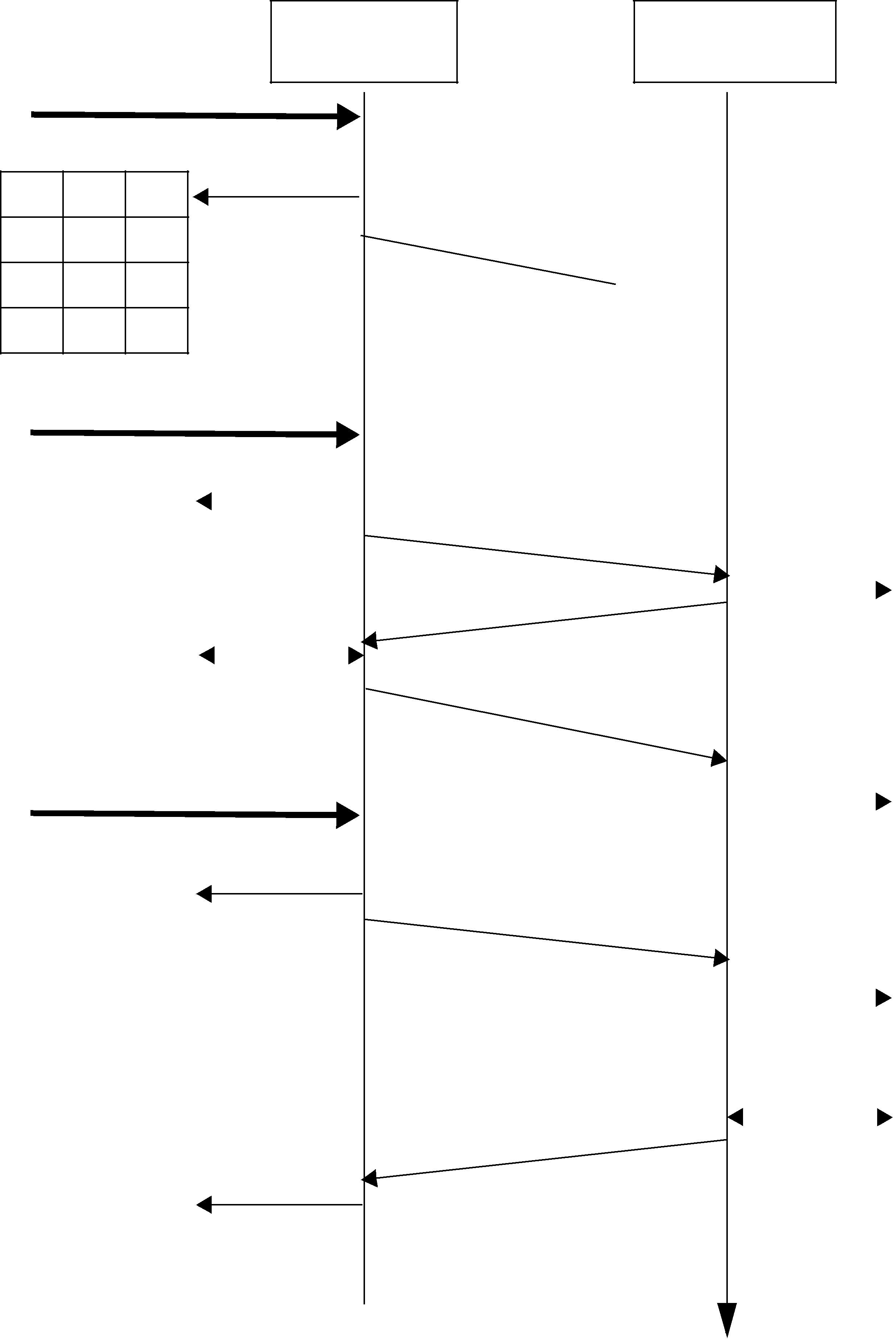

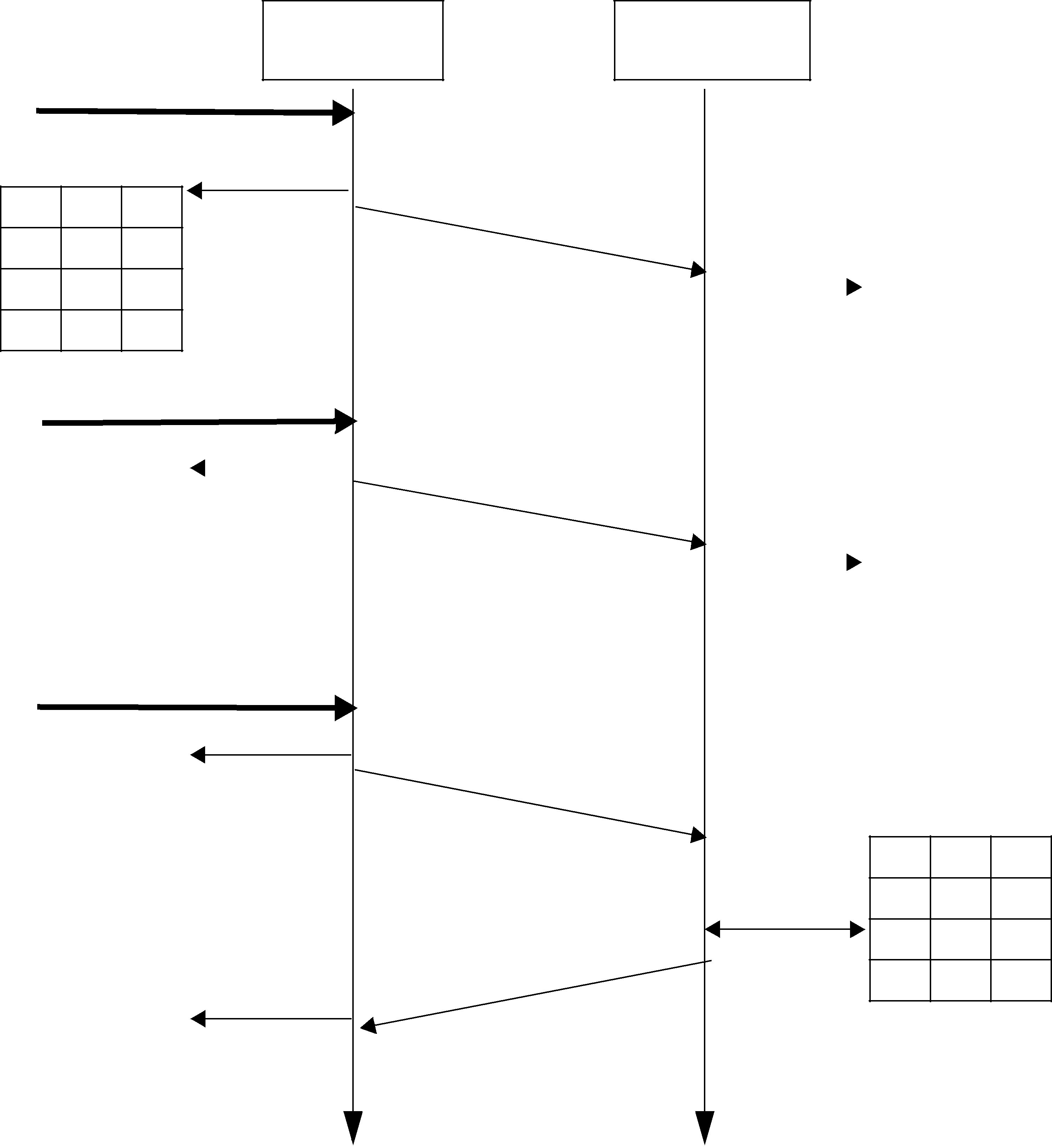

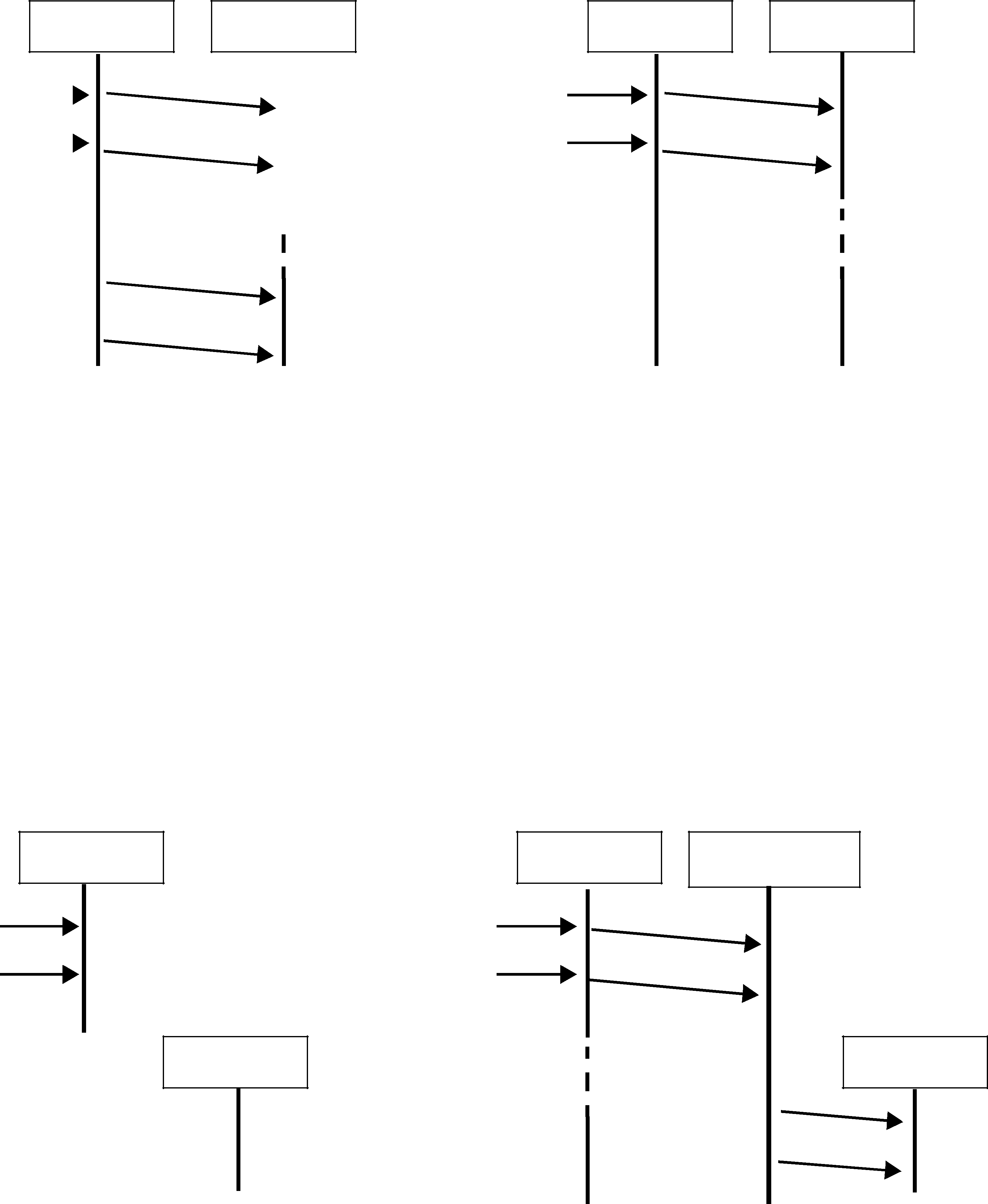

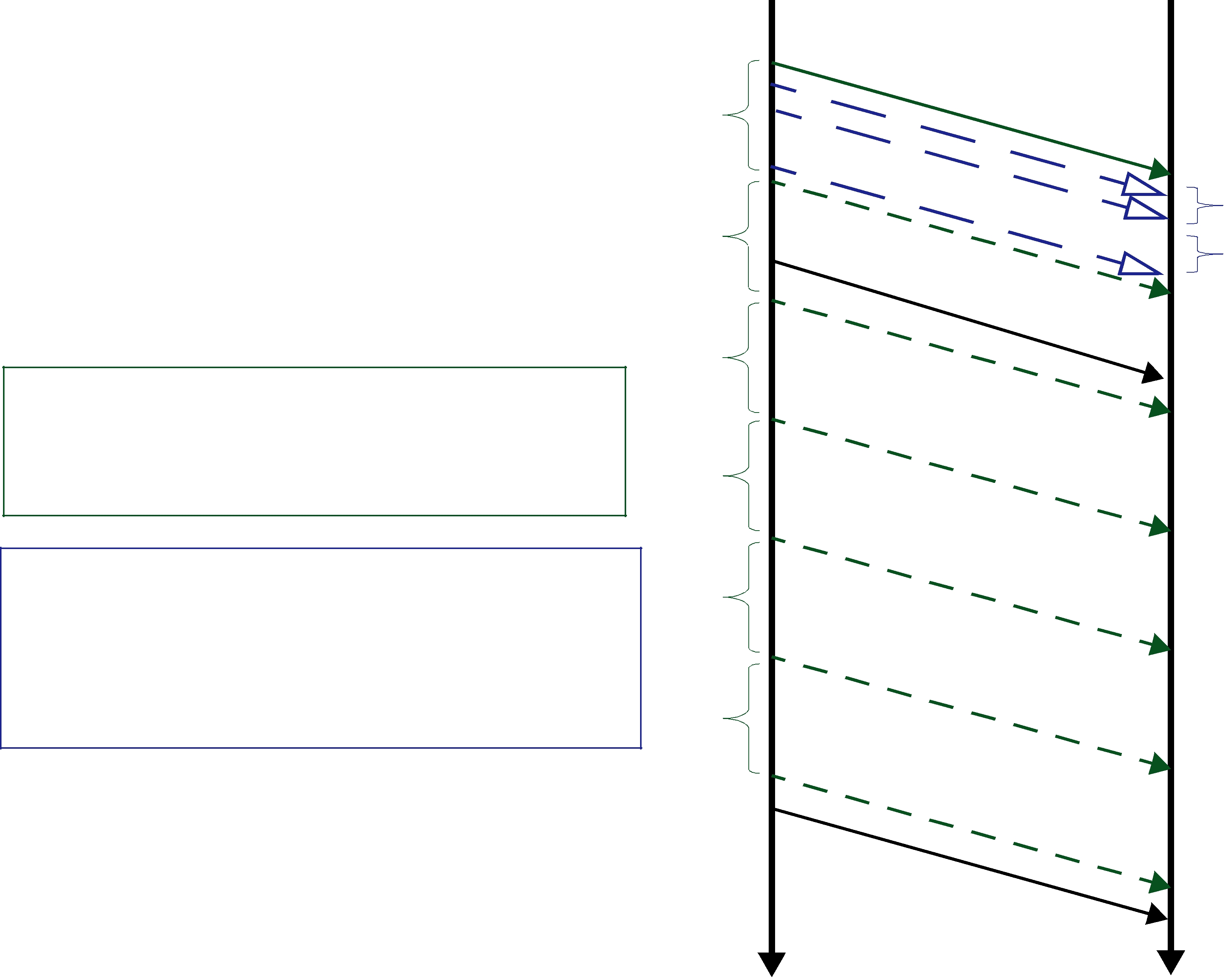

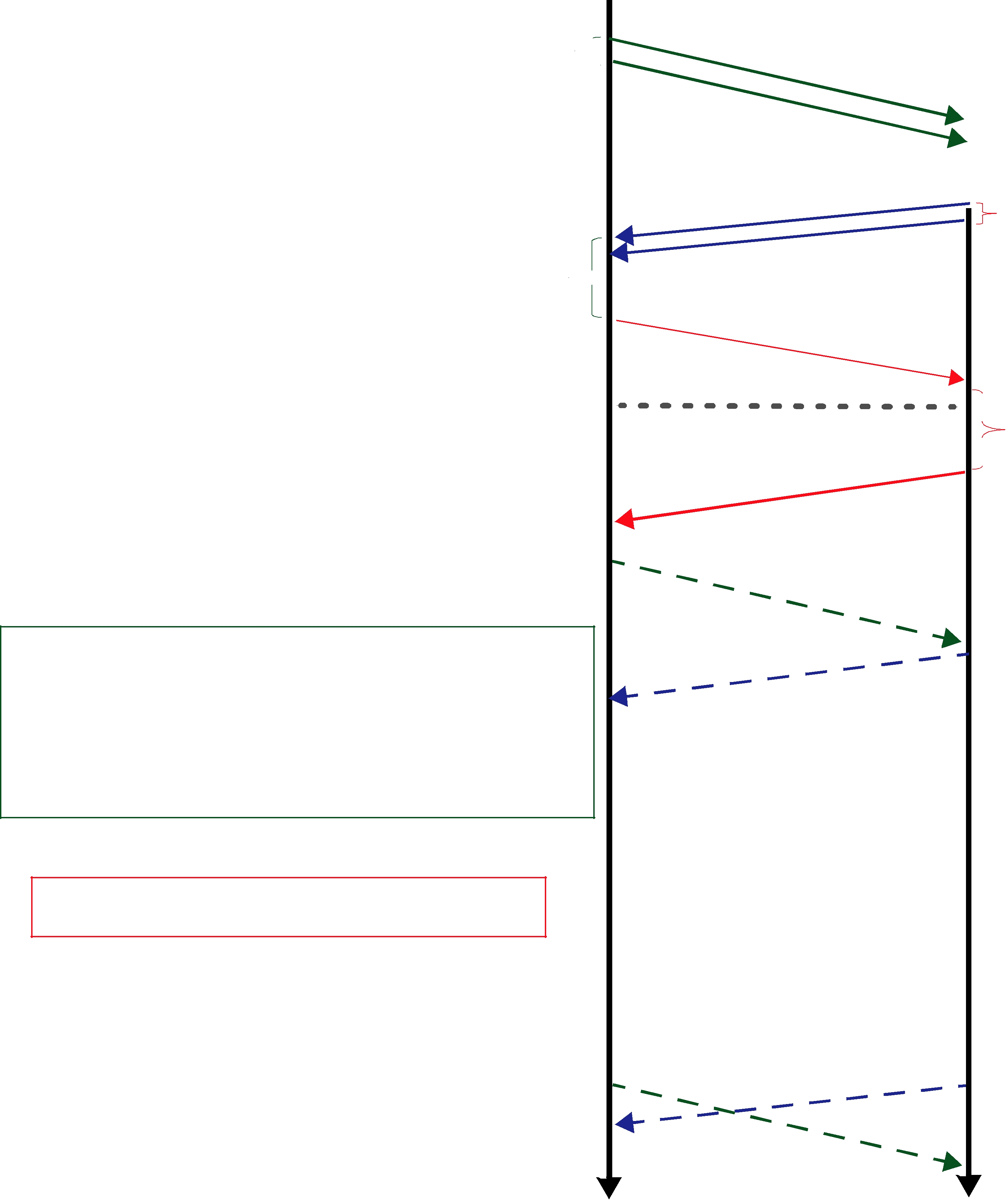

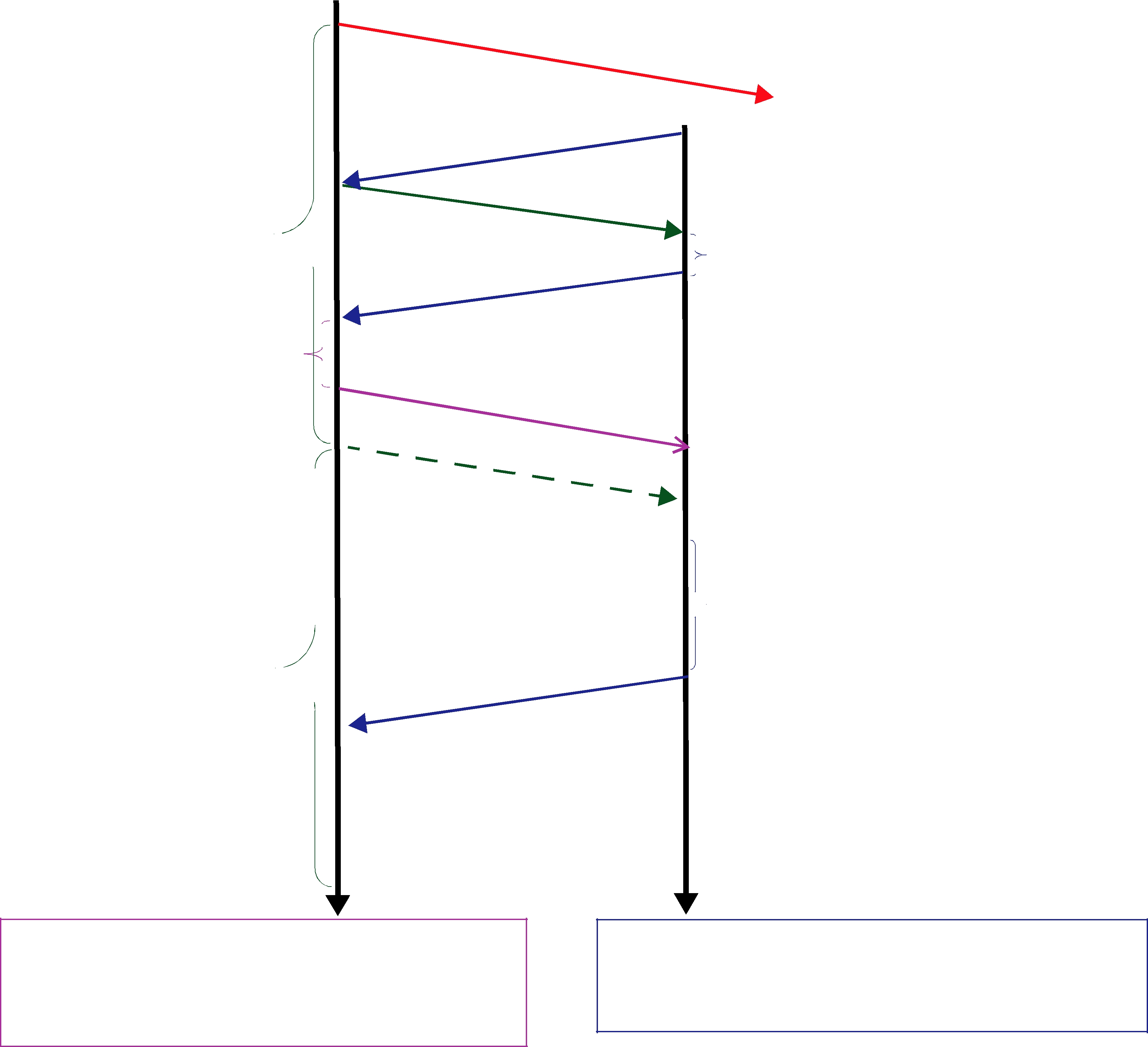

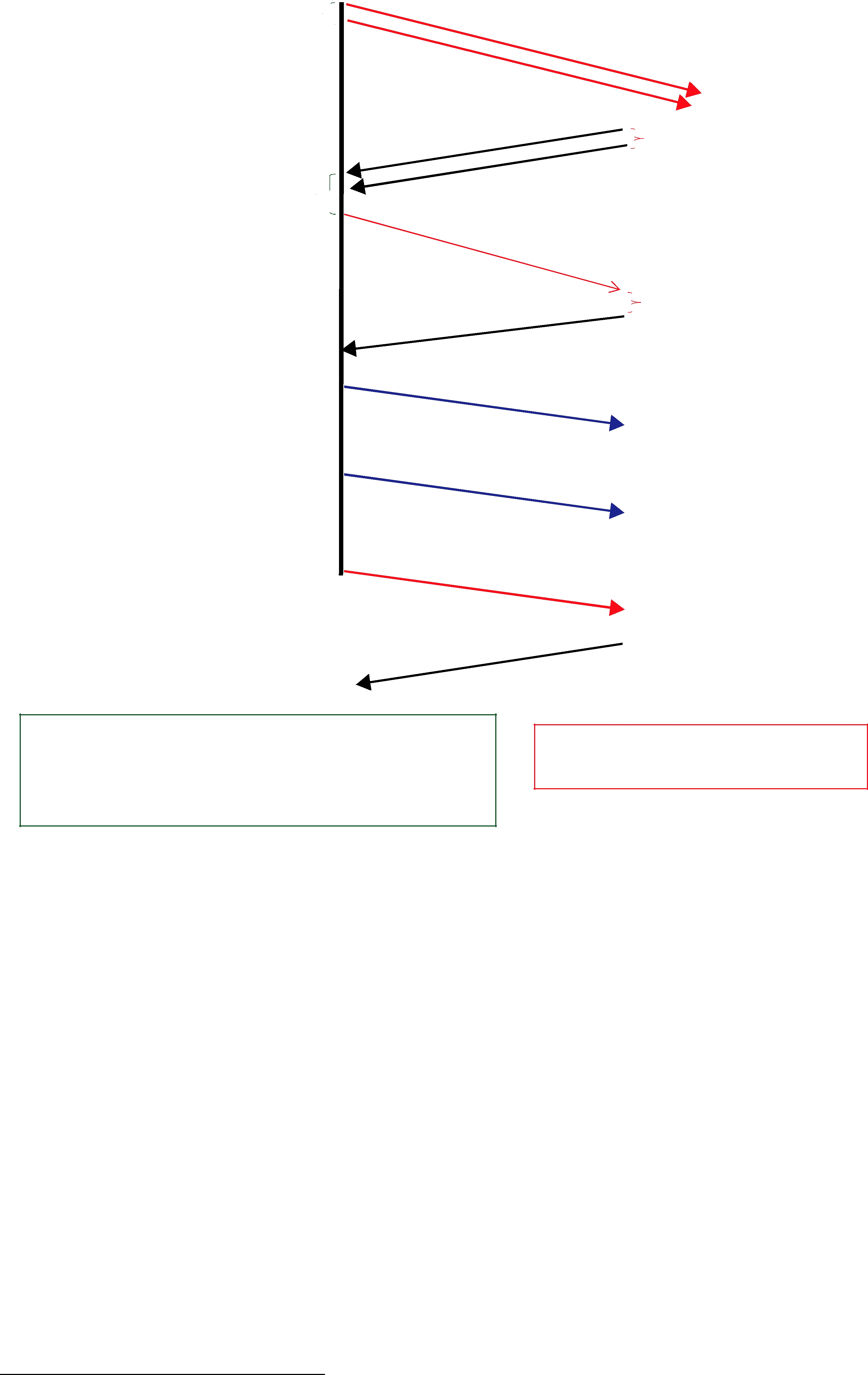

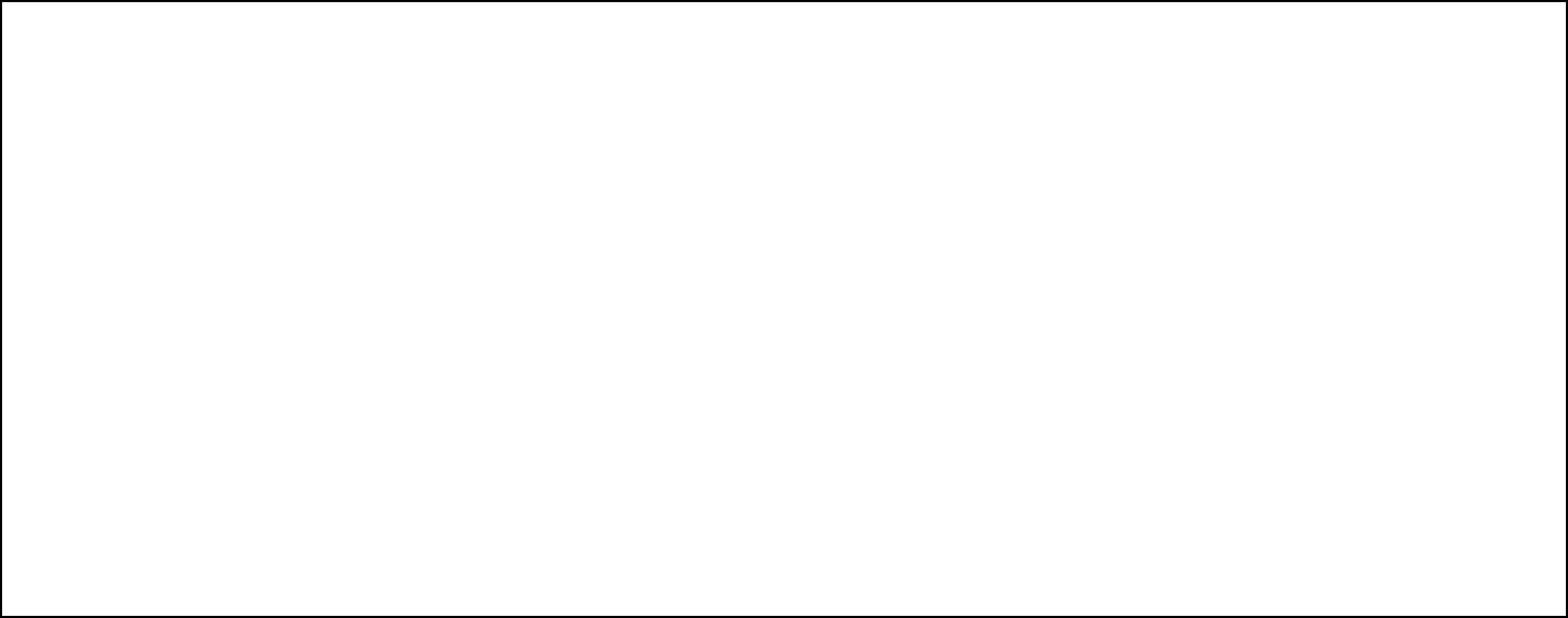

Figure 10.1 Basic RTPS Reliable Protocol

Assigned sequence number

History of send data values

Whether or not the sample has been delivered to the reader history

DataWriter DataReader

write

(A)

1 |

A |

X |

|

|

DATA (A,1); |

|

||

|

cache |

HB (1) |

|

|||||

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

(A, 1) |

|

|

cache |

|

|

|

|

|

|

|

|

(A, 1) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

check

(1)

|

|

|

|

|

|

ACKNACK |

1 |

A |

4 |

|

|

|

(2) |

|

acked |

|||||

|

|

|

|

|

||

|

|

|

|

|||

|

|

|

(1) |

|

|

|

|

|

|

|

|

time |

time |

|

|

|

|

|

||

|

|

|

|

|

||

|

|

|

||||

Assigned sequence number

DataReader history

Whether or not the sample is available for the application to read/take

1 A 4

Figure 10.1 illustrates the basic behavior of the protocol when an application calls the write() operation on a DataWriter that is associated with a DataReader. As mentioned, the RTPS protocol can bundle multiple submessages into a single network packet. In Figure 10.1 this feature is used to piggyback a HB message to the DATA message. Note that before the message is sent, the data is given a sequence number (1 in this case) which is stored in the DataWriter’s send queue. As soon as the message is received by the DataReader, it places it into the DataReader’s receive queue. From the sequence number the DataReader can tell that it has not missed any messages and therefore it can make the data available immediately to the user (and call the DataReaderListener). This is indicated by the “✔” symbol. The reception of the HB(1) causes the DataReader to check that it has indeed received all updates up to and including the one with sequenceNumber=1. Since this is true, it replies with an ACKNACK(2) to positively acknowledge all messages up to (but not including) sequence number 2. The DataWriter notes that the update has been acknowledged, so it no longer needs to be retained in its send queue. This is indicated by the “✔” symbol.

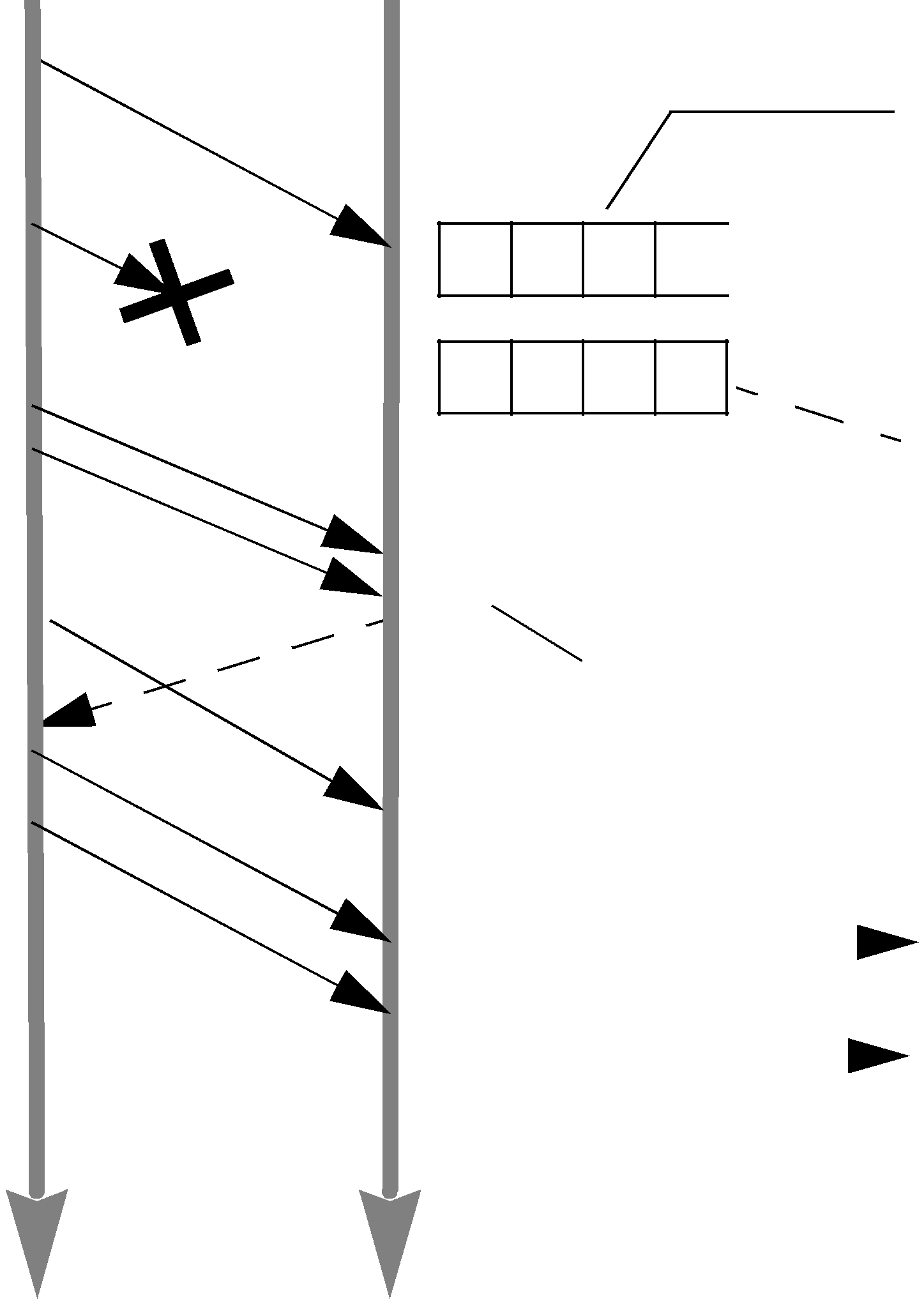

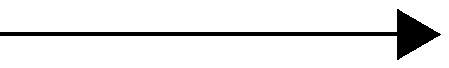

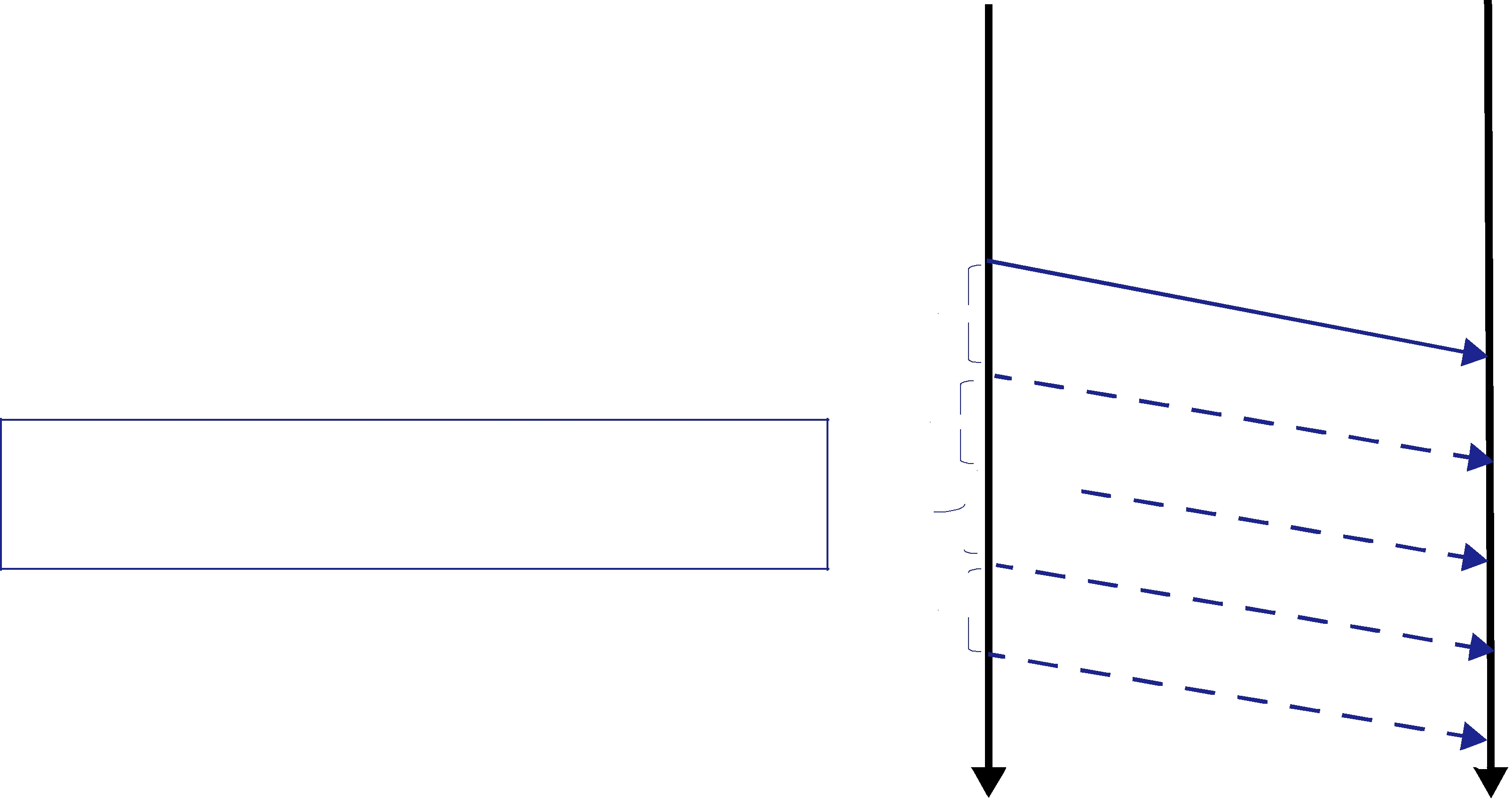

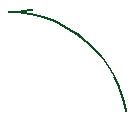

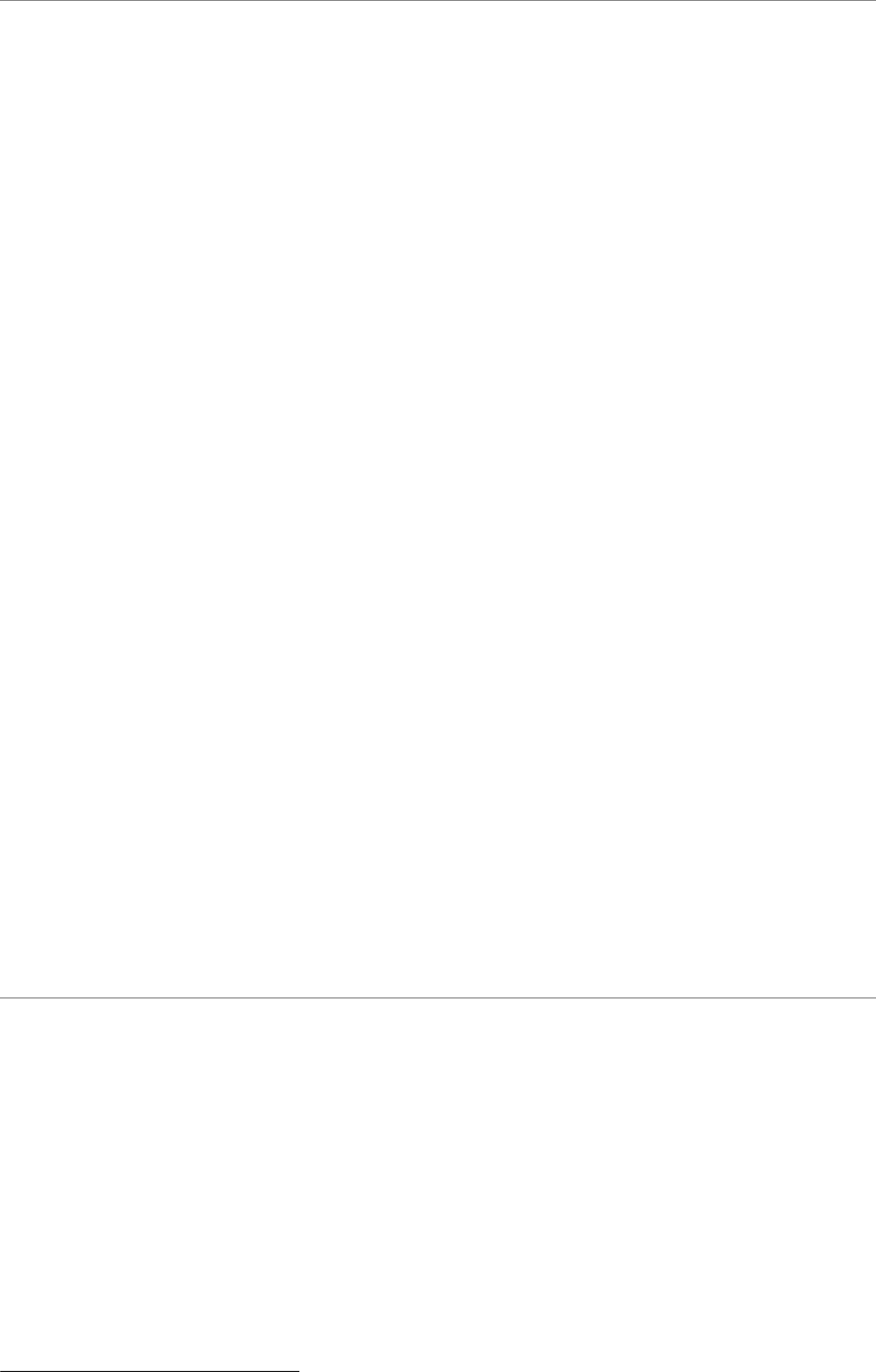

Figure 10.2 illustrates the behavior of the protocol in the presence of lost messages. Assume that the message containing DATA(A,1) is dropped by the network. When the DataReader receives

Figure 10.2 RTPS Reliable Protocol in the Presence of Message Loss

DataWriter

write(S01)

1 A X

cache (A, 1)

D |

|

AT |

|

A ( |

|

A, |

|

1); |

|

|

HB (1) |

DataReader

r

r

write(S02)

1 |

A |

X |

|

|

|

|

|

cache(B,2) |

|||||||

|

|

|

|||||

2 |

B |

X |

|||||

|

|

|

|||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

get(1)

write(S03)

DATA |

|

|

|

(B,2); |

|

|

|

HB |

|

K(1) |

|

KNAC |

|

|

AC |

|

|

D |

|

|

AT |

|

|

|

A ( |

|

|

A,1) |

|

1 |

A |

X |

cache(C,3) |

|

|

|

|

||

2 |

B |

X |

||

|

||||

|

|

|

|

|

3 |

C |

X |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

A |

4 |

|

|

|

|

|

|

|

2 |

B |

4 |

|

|

|

|

|

||

3 |

C |

4 |

||

|

||||

|

|

|

|

|

|

|

|

|

DATA (C,3); HB

|

K(4) |

KNAC |

|

AC |

|

time

time

|

|

|

|

1 |

|

X |

|

cache (B,2) |

|

||||||

|

|

|

|||||

2 |

B |

X |

|||||

|

|

|

|||||

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

1 |

A |

4 |

|

cache (A,1) |

|||||||

|

|

|

|||||

2 |

B |

4 |

|||||

|

|

|

|||||

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

1 |

A |

4 |

|

cache (C,3) |

|||||||

|

|

|

|||||

2 |

B |

4 |

|||||

|

|

|

|||||

|

|

|

|

|

|

||

|

|

|

|

3 |

C |

4 |

|

|

|

|

|

|

|

||

|

|

|

|

|

|

||

|

|

|

|||||

time |

See Figure 10.1 for |

|

meaning of table columns. |

||

|

the next message (DATA(B,2);

1.The data associated with sequence number 2 (B) is tagged with ‘X’ to indicate that it is not deliverable to the application (that is, it should not be made available to the application, because the application needs to receive the data associated with sample 1

(A) first).

2.An ACKNACK(1) is sent to the DataWriter to request that the data tagged with sequence number 1 be resent.

Reception of the ACKNACK(1) causes the DataWriter to resend DATA(A,1). Once the DataReader receives it, it can ‘commit’ both A and B such that the application can now access both (indicated

by the “✔”) and call the DataReaderListener. From there on, the protocol proceeds as before for the next data message (C) and so forth.

A subtle but important feature of the RTPS protocol is that ACKNACK messages are only sent as a direct response to HB messages. This allows the DataWriter to better control the overhead of these ‘administrative’ messages. For example, if the DataWriter knows that it is about to send a chain of DATA messages, it can bundle them all and include a single HB at the end, which minimizes ACKNACK traffic.

10.3Using QosPolicies to Tune the Reliable Protocol

Reliability is controlled by the QosPolicies in Table 10.1. To enable reliable delivery, read the following sections to learn how to change the QoS for the DataWriter and DataReader:

❏Enabling Reliability (Section 10.3.1)

❏Tuning Queue Sizes and Other Resource Limits (Section 10.3.2)

❏Controlling Heartbeats and Retries with DataWriterProtocol QosPolicy (Section 10.3.4)

❏Avoiding Message Storms with DataReaderProtocol QosPolicy (Section 10.3.5)

❏Resending Samples to

Then see this section to explore example use cases:

Table 10.1 QosPolicies for Reliable Communications

QosPolicy |

Description |

Related |

Reference |

|

Entitiesa |

||||

|

|

|

||

|

|

|

|

|

|

To establish reliable communication, this QoS must be |

|

||

Reliability |

set to DDS_RELIABLE_RELIABILITY_QOS for the |

DW, DR |

||

|

DataWriter and its DataReaders. |

|

||

|

|

|

||

|

|

|

|

|

|

This QoS determines the amount of resources each side |

|

|

|

|

can use to manage instances and samples of instances. |

|

|

|

|

Therefore it controls the size of the DataWriter’s send |

|

||

ResourceLimits |

queue and the DataReader’s receive queue. The send |

DW, DR |

||

|

queue stores samples until they have been ACKed by |

|

||

|

|

|

||

|

all DataReaders. The DataReader’s receive queue stores |

|

|

|

|

samples for the user’s application to access. |

|

|

|

|

|

|

|

|

History |

This QoS affects how a DataWriter/DataReader behaves |

DW, DR |

||

|

when its send/receive queue fills up. |

|

||

DataWriterProtocol |

This QoS configures |

DW |

||

|

QoS can disable positive ACKs for its DataReaders. |

|

||

|

When a reliable DataReader receives a heartbeat from a |

|

|

|

|

DataWriter and needs to return an ACKNACK, the |

|

||

DataReaderProtocol |

DataReader can choose to delay a while. This QoS sets |

DR |

||

|

the minimum and maximum delay. It can also disable |

|

||

|

|

|

||

|

positive ACKs for the DataReader. |

|

|

|

|

|

|

|

Table 10.1 QosPolicies for Reliable Communications

QosPolicy |

Description |

Related |

Reference |

|

Entitiesa |

||||

|

|

|

||

|

|

|

|

|

|

This QoS determines additional amounts of resources |

|

|

|

|

that the DataReader can use to manage samples |

|

|

|

DataReaderResource- |

(namely, the size of the DataReader’s internal queues, |

DR |

||

Limits |

which cache samples until they are ordered for reliabil- |

|

||

|

ity and can be moved to the DataReader’s receive queue |

|

|

|

|

for access by the user’s application). |

|

|

|

|

|

|

|

|

Durability |

This QoS affects whether |

DW, DR |

||

|

receive all |

|

a.DW = DataWriter, DR = DataReader

10.3.1Enabling Reliability

You must modify the RELIABILITY QosPolicy (Section 6.5.19) of the DataWriter and each of its reliable DataReaders. Set the kind field to DDS_RELIABLE_RELIABILITY_QOS:

❏ DataWriter

writer_qos.reliability.kind = DDS_RELIABLE_RELIABILITY_QOS;

❏ DataReader

reader_qos.reliability.kind = DDS_RELIABLE_RELIABILITY_QOS;

10.3.1.1Blocking until the Send Queue Has Space Available

The max_blocking_time property in the RELIABILITY QosPolicy (Section 6.5.19) indicates how long a DataWriter can be blocked during a write().

If max_blocking_time is

If the number of unacknowledged samples in the reliability send queue drops below max_samples (set in the RESOURCE_LIMITS QosPolicy (Section 6.5.20)) before max_blocking_time, the sample is sent and write() returns DDS_RETCODE_OK.

If max_blocking_time is zero and the reliability send queue is full, write() returns DDS_RETCODE_TIMEOUT and the sample is not sent.

10.3.2Tuning Queue Sizes and Other Resource Limits

Set the HISTORY QosPolicy (Section 6.5.10) appropriately to accommodate however many samples should be saved in the DataWriter’s send queue or the DataReader’s receive queue. The defaults may suit your needs; if so, you do not have to modify this QosPolicy.

Set the DDS_RtpsReliableWriterProtocol_t in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3) appropriately to accommodate the number of unacknowledged samples that can be

For more information, see the following sections:

❏Understanding the Send Queue and Setting its Size (Section 10.3.2.1)

❏Understanding the Receive Queue and Setting Its Size (Section 10.3.2.2)

Note: The HistoryQosPolicy’s depth must be less than or equal to the ResourceLimitsQosPolicy’s max_samples_per_instance; max_samples_per_instance must be less than or equal to the ResourceLimitsQosPolicy’s max_samples (see RESOURCE_LIMITS QosPolicy (Section 6.5.20)), and max_samples_per_remote_writer (see

DATA_READER_RESOURCE_LIMITS QosPolicy (DDS Extension) (Section 7.6.2)) must be less than or equal to max_samples.

❏depth <= max_samples_per_instance <= max_samples

❏max_samples_per_remote_writer <= max_samples

Examples:

❏ DataWriter

writer_qos.resource_limits.initial_instances = 10; writer_qos.resource_limits.initial_samples = 200; writer_qos.resource_limits.max_instances = 100; writer_qos.resource_limits.max_samples = 2000; writer_qos.resource_limits.max_samples_per_instance = 20; writer_qos.history.depth = 20;

❏ DataReader

reader_qos.resource_limits.initial_instances = 10; reader_qos.resource_limits.initial_samples = 200; reader_qos.resource_limits.max_instances = 100; reader_qos.resource_limits.max_samples = 2000; reader_qos.resource_limits.max_samples_per_instance = 20; reader_qos.history.depth = 20; reader_qos.reader_resource_limits.max_samples_per_remote_writer = 20;

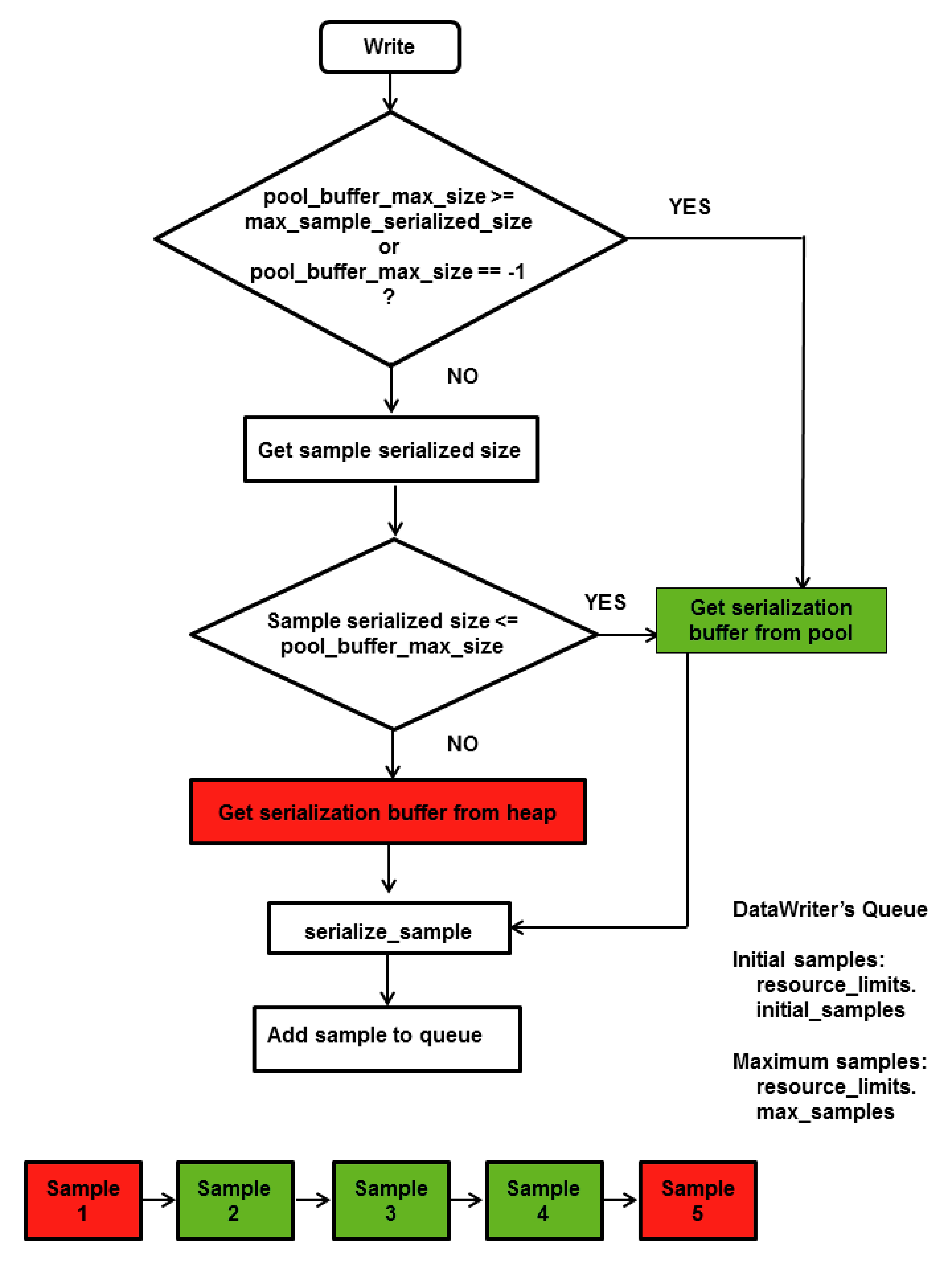

10.3.2.1Understanding the Send Queue and Setting its Size

A DataWriter’s send queue is used to store each sample it writes. A sample will be removed from the send queue after it has been acknowledged (through an ACKNACK) by all the reliable DataReaders. A DataReader can request that the DataWriter resend a missing sample (through an ACKNACK). If that sample is still available in the send queue, it will be resent. To elicit timely ACKNACKs, the DataWriter will regularly send heartbeats to its reliable DataReaders.

A DataWriter’s send queue size is determined by its RESOURCE_LIMITS QosPolicy (Section 6.5.20), specifically the max_samples field. The appropriate value depends on application parameters such as how fast the publication calls write().

A DataWriter has a "send window" that is the maximum number of unacknowledged samples allowed in the send queue at a time. The send window enables configuration of the number of samples queued for reliability to be done independently from the number of samples queued for history. This is of great benefit when the size of the history queue is much different than the size of the reliability queue. For example, you may want to resend a large history to

The send window is determined by the DataWriterProtocolQosPolicy, specifically the fields min_send_window_size and max_send_window_size within the rtps_reliable_writer field of type DDS_RtpsReliableWriterProtocol_t. Other fields control a dynamic send window, where the send window size changes in response to network congestion to maximize the effective send rate. Like for max_samples, the appropriate values depend on application parameters.

Strict reliability: If a DataWriter does not receive ACKNACKs from one or more reliable DataReaders, it is possible for the reliability send

reliability queue before writing any more samples. Connext provides two mechanisms to do this:

❏Allow the write() operation to block until there is space in the reliability queue again to store the sample. The maximum time this call blocks is determined by the max_blocking_time field in the RELIABILITY QosPolicy (Section 6.5.19) (also discussed in Section 10.3.1.1).

❏Use the DataWriter’s Listener to be notified when the reliability queue fills up or empties again.

When the HISTORY QosPolicy (Section 6.5.10) on the DataWriter is set to KEEP_LAST, strict reliability is not guaranteed. When there are depth number of samples in the queue (set in the HISTORY QosPolicy (Section 6.5.10), see Section 10.3.3) the oldest sample will be dropped from the queue when a new sample is written. Note that in such a reliable mode, when the send window is larger than max_samples, the DataWriter will never block, but strict reliability is no longer guaranteed.

If there is a request for the purged sample from any DataReaders, the DataWriter will send a heartbeat that no longer contains the sequence number of the dropped sample (it will not be able to send the sample).

Alternatively, a DataWriter with KEEP_LAST may block on write() when its send window is smaller than its send queue. The DataWriter will block when its send window is full. Only after the blocking time has elapsed, the DataWriter will purge a sample, and then strict reliability is no longer guaranteed.

The send queue size is set in the max_samples field of the RESOURCE_LIMITS QosPolicy (Section 6.5.20). The appropriate size for the send queue depends on application parameters (such as the send rate), channel parameters (such as

The DataReader’s receive queue size should generally be larger than the DataWriter’s send queue size. Receive queue size is discussed in Section 10.3.2.2.

A good rule of thumb, based on a simple model that assumes individual packet drops are not correlated and

Figure 10.3 Calculating Minimum Send Queue Size for a Desired Level of Reliability

NRTlog ( 1 – Q)

=2

log ( p)

Simple formula for determining the minimum size of the send queue required for strict reliability.

In the above equation, R is the rate of sending samples, T is the

Table 10.2 gives the required size of the send queue for several common scenarios.

Table 10.2 Required Size of the Send Queue for Different Network Parameters

Qa |

pb |

Tc |

|

Rd |

Ne |

|

|

|

|

|

|

99% |

1% |

0.001f sec |

100 Hz |

|

1 |

99% |

1% |

0.001 sec |

2000 Hz |

|

2 |

|

|

|

|

|

|

99% |

5% |

0.001 sec |

100 Hz |

|

1 |

|

|

|

|

|

|

99% |

5% |

0.001 sec |

2000 Hz |

|

4 |

|

|

|

|

|

|

99.99% |

1% |

0.001 sec |

100 Hz |

|

1 |

|

|

|

|

|

|

Table 10.2 Required Size of the Send Queue for Different Network Parameters

Qa |

pb |

|

Tc |

|

Rd |

Ne |

|

|

|

|

|

|

|

99.99% |

1% |

0.001 sec |

|

2000 Hz |

|

6 |

|

|

|

|

|

|

|

99.99% |

5% |

0.001 sec |

|

100 Hz |

|

1 |

|

|

|

|

|

|

|

99.99% |

5% |

0.001 sec |

|

2000 Hz |

|

8 |

|

|

|

|

|

|

|

a."Q" is the desired level of reliability measured as the probability that any data update will eventually be delivered successfully. In other words, percentage of samples that will be successfully delivered.

b."p" is the probability that any single packet gets lost in the network.

c."T" is the

d."R" is the rate at which the publisher is sending updates.

e."N" is the minimum required size of the send queue to accomplish the desired level of reliability "Q".

f.The typical

Note: Packet loss on a network frequently happens in bursts, and the packet loss events are correlated. This means that the probability of a packet being lost is much higher if the previous packet was lost because it indicates a congested network or busy receiver. For this situation, it may be better to use a queue size that can accommodate the longest period of network congestion, as illustrated in Figure 10.4.

Figure 10.4 Calculating Minimum Send Queue Size for Networks with Dropouts

N = RD( Q)

Send queue size as a function of send rate "R" and maximum dropout time D.

In the above equation R is the rate of sending samples, D(Q) is a time such that Q percent of the dropouts are of equal or lesser length, and Q is the required probability that a sample is eventually successfully delivered. The problem with the above formula is that it is hard to determine the value of D(Q) for different values of Q.

For example, if we want to ensure that 99.9% of the samples are eventually delivered successfully, and we know that the 99.9% of the network dropouts are shorter than 0.1 seconds, then we would use N = 0.1*R. So for a rate of 100Hz, we would use a send queue of N = 10; for a rate of 2000Hz, we would use N = 200.

10.3.2.2Understanding the Receive Queue and Setting Its Size

Samples are stored in the DataReader’s receive queue, which is accessible to the user’s application.

A sample is removed from the receive queue after it has been accessed by take(), as described in Accessing Data Samples with Read or Take (Section 7.4.3). Note that read() does not remove samples from the queue.

A DataReader's receive queue size is limited by its RESOURCE_LIMITS QosPolicy (Section 6.5.20), specifically the max_samples field. The storage of

A DataReader can maintain reliable communications with multiple DataWriters (e.g., in the case of the OWNERSHIP_STRENGTH QosPolicy (Section 6.5.16) setting of SHARED). The maximum number of

The DataReader will cache samples that arrive out of order while waiting for missing samples to be resent. (Up to 256 samples can be resent; this limitation is imposed by the wire protocol.) If there is no room, the DataReader has to reject

The appropriate size of the receive queue depends on application parameters, such as the DataWriter’s sending rate and the probability of a dropped sample. However, the receive queue size should generally be larger than the send queue size. Send queue size is discussed in Section 10.3.2.1.

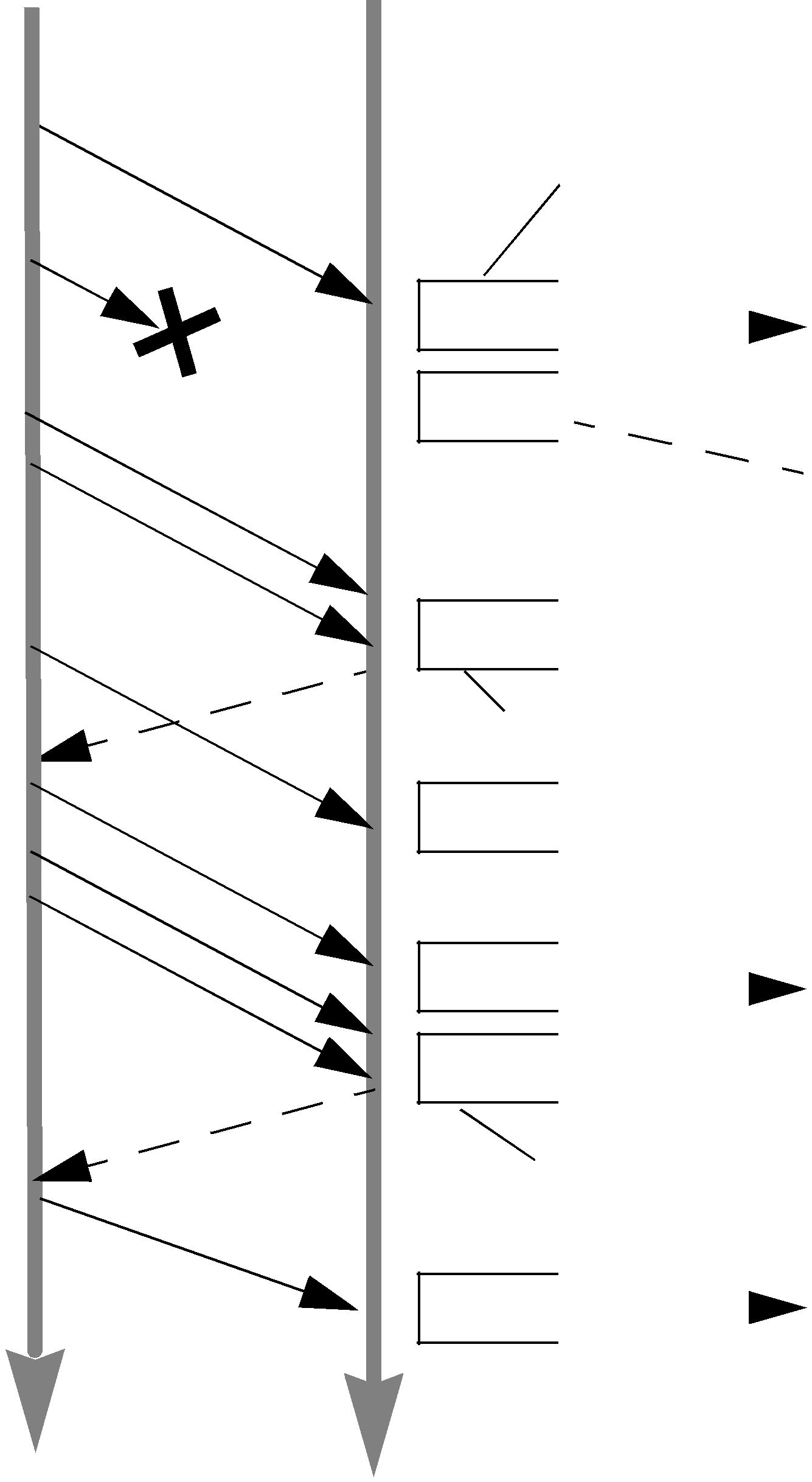

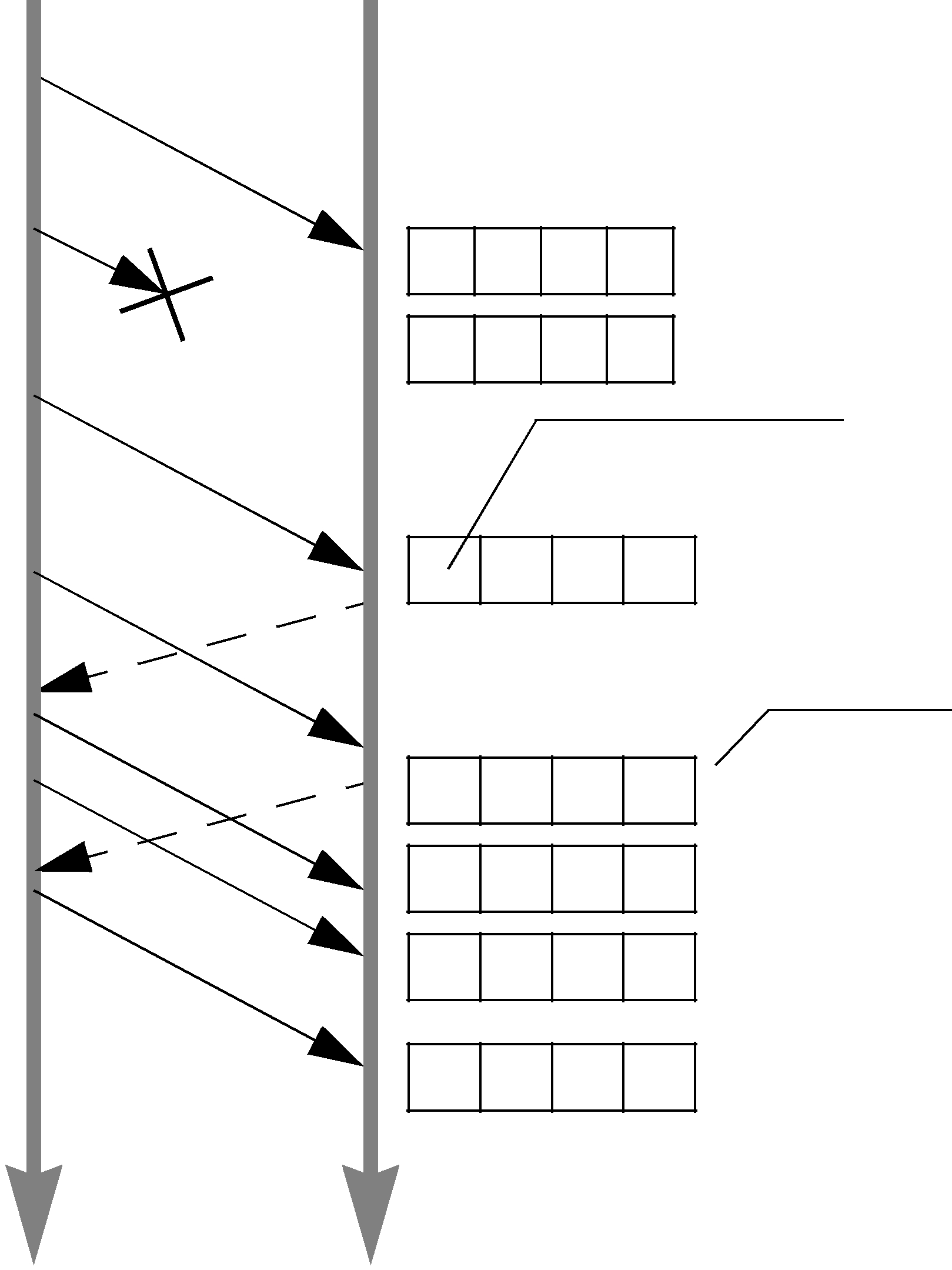

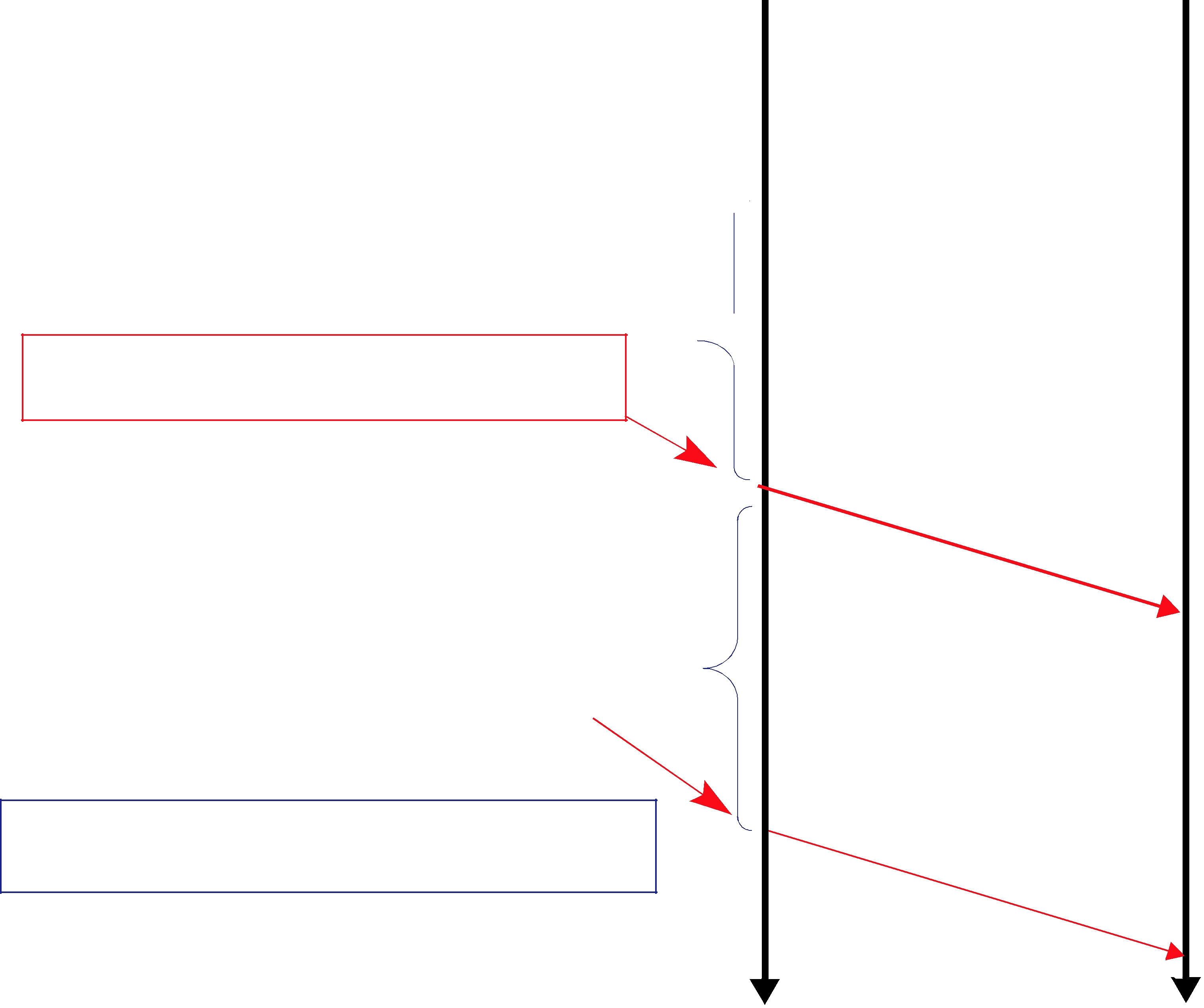

Figure 10.5 and Figure 10.6 compare two hypothetical DataReaders, both interacting with the same DataWriter. The queue on the left represents an ordering cache, allocated from receive

In Figure 10.6 on page

Figure 10.5 Effect of

DataWriter DataReader

Send Sample “1”

Send Sample “2”

1

Sample

Sample

Send Sample “3” “2” lost. Send HeartBeat

max_samples is 4. This also limits the number of unordered samples that can be cached.

Sample 1 is taken

Sample 1 is taken

Note: no unordered samples cached

Note: no unordered samples cached

Send Sample “4”

C |

||||

A |

||||

|

|

|

||

Send Sample “5” |

|

|||

|

|

|

|

|

( |

|

|

|

|

|

|

3 |

|

|

|

|

|

|

|

|

|

1- |

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

3 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

) |

“ |

” |

|

|

|

|

|

|

|

|

|

Space reserved for missing sample “2”. |

||

|

|

K |

|

|

|

|

|

|

|

|

|

|||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

|

C |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

A |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Samples “3” and “4” are cached |

|

|

|

|

|

|

|

3 |

4 |

|

|

|

|

|

|

while waiting for missing sample “2”. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2 |

3 |

4 |

|

|

|

|

|

|

Samples |

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5 |

|

|

|

|

|

|

|

|

|

Sample 5 is taken |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Figure 10.6 Effect of Receive Queue Size on Performance: Small Queue Size

DataWriter DataReader

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Send Sample “1” |

|

|

|

|

|

|

|

|

|

|

|

||

Send Sample “2” |

|

|

|

|

|

|

|

|

|

|

|

||

Send Sample “3” |

|

|

|

Sample |

|

|

|||||||

|

|

|

“2” lost |

|

|

|

|||||||

Send Heartbeat |

|

|

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

H |

|

|

|

|

|

|

|

|

|

|

|

|

|

B ( |

|

|

|

|

|

|

|

|

||

|

|

|

|

|

1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

- |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3 |

|

|

|

|

|

|

|

Send Sample “4” |

|

|

|

|

) |

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

2 |

) |

||||

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

|

|

|

|

|

|

( |

|

|

|

|

|

|

|

|

|

|

|

|

K |

|

|

|

|

|

|

|

|

|

|

|

|

C |

|

|

|

|

|

|

|

|

C |

|

A |

|

|

|

|

|

|||

A |

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

|

|

|

|

|

|

|

|||

Send Sample “5” |

|

|

|

|

|

|

|

|

|

|

|

||

Send Heartbeat |

|

|

|

|

|

|

|

|

|

|

|

||

|

|

H |

|

|

|

|

|

|

|

|

|

|

|

|

|

B ( |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

- |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5 |

|

|

|

|

|

|

|

|

|

|

|

|

|

) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

4 |

) |

|

|

|

|

|

|

|

|

|

|

|

( |

|

|

|

|

|

|

|

|

|

|

|

|

K |

|

|

|

|

|

|

|

|

|

|

|

|

C |

|

|

|

|

|

|

|

|

|

|

|

|

A |

|

|

|

|

|

|

|

|

N |

|

|

|

|

|

|

|||||

CK |

|

|

|

|

|

|

|

|

|||||

|

|

|

A |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

max_samples is 2. This also limits the |

|

|

|

|

|

|

|

|

|

|

|

number of unordered samples that |

|

|

|

|

|

|

|

|

|

|

|

can be cached. |

1 |

|

|

|

|

|

|

|

|

|

|

Move sample 1 to receive queue. |

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

Note: no unordered samples cached |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Space reserved for missing sample “2”. |

||

|

|

3 |

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

||

|

|

3 |

|

|

|

|

|

Sample “4” must be dropped |

|||

|

|

|

|

|

|

|

|||||

|

|

|

|

|

|

|

|||||

2 |

|

|

|

|

|

|

|

|

|

because it does not fit in the queue. |

|

|

|

|

|

|

|

|

|

|

Move samples 2 and 3 to receive queue. |

||

|

3 |

|

|

|

|

|

|

||||

|

|

||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5 |

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

Space reserved for missing sample “4”. |

|

4 |

|

|

|

|

|

|

|

|

Move samples 4 and 5 to receive queue. |

||

|

5 |

|

|

|

|

|

|

||||

|

|

||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

10.3.3Controlling Queue Depth with the History QosPolicy

If you want to achieve strict reliability, set the kind field in the HISTORY QosPolicy (Section 6.5.10) for both the DataReader and DataWriter to KEEP_ALL; in this case, the depth does not matter.

Or, for

The depth field in the HISTORY QosPolicy (Section 6.5.10) controls how many samples Connext will attempt to keep on the DataWriter’s send queue or the DataReader’s receive queue. For reliable communications, depth should be >= 1. The depth can be set to 1, but cannot be more than the max_samples_per_instance in RESOURCE_LIMITS QosPolicy (Section 6.5.20).

Example:

❏ DataWriter

writer_qos.history.depth = <number of samples to keep in send queue>;

❏ DataReader

reader_qos.history.depth = <number of samples to keep in receive queue>;

10.3.4Controlling Heartbeats and Retries with DataWriterProtocol QosPolicy

In the Connext reliability model, the DataWriter sends data samples and heartbeats to reliable DataReaders. A DataReader responds to a heartbeat by sending an ACKNACK, which tells the DataWriter what the DataReader has received so far.

In addition, the DataReader can request missing samples (by sending an ACKNACK) and the DataWriter will respond by resending the missing samples. This section describes some advanced timing parameters that control the behavior of this mechanism. Many applications do not need to change these settings. These parameters are contained in the

DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3).

The protocol described in Overview of the Reliable Protocol (Section 10.2) uses very simple rules such as piggybacking HB messages to each DATA message and responding immediately to ACKNACKs with the requested repair messages. While correct, this protocol would not be capable of accommodating optimum performance in more advanced use cases.

This section describes some of the parameters configurable by means of the rtps_reliable_writer structure in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3) and how they affect the behavior of the RTPS protocol.

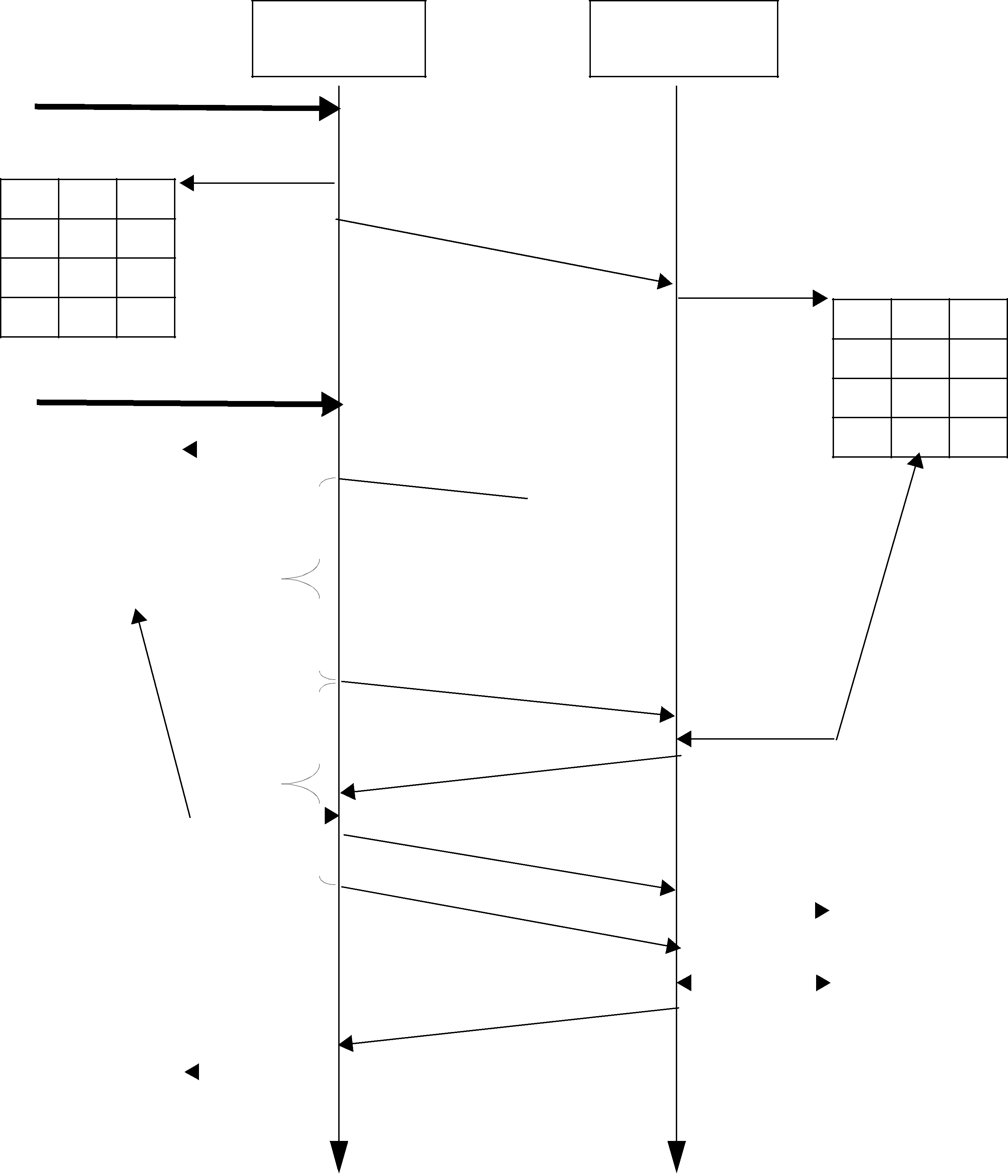

10.3.4.1How Often Heartbeats are Resent (heartbeat_period)

If a DataReader does not acknowledge a sample that has been sent, the DataWriter resends the heartbeat. These heartbeats are resent at the rate set in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3), specifically its heartbeat_period field.

For example, a heartbeat_period of 3 seconds means that if a DataReader does not receive the latest sample (for example, it gets dropped by the network), it might take up to 3 seconds before the DataReader realizes it is missing data. The application can lower this value when it is important that recovery from packet loss is very fast.

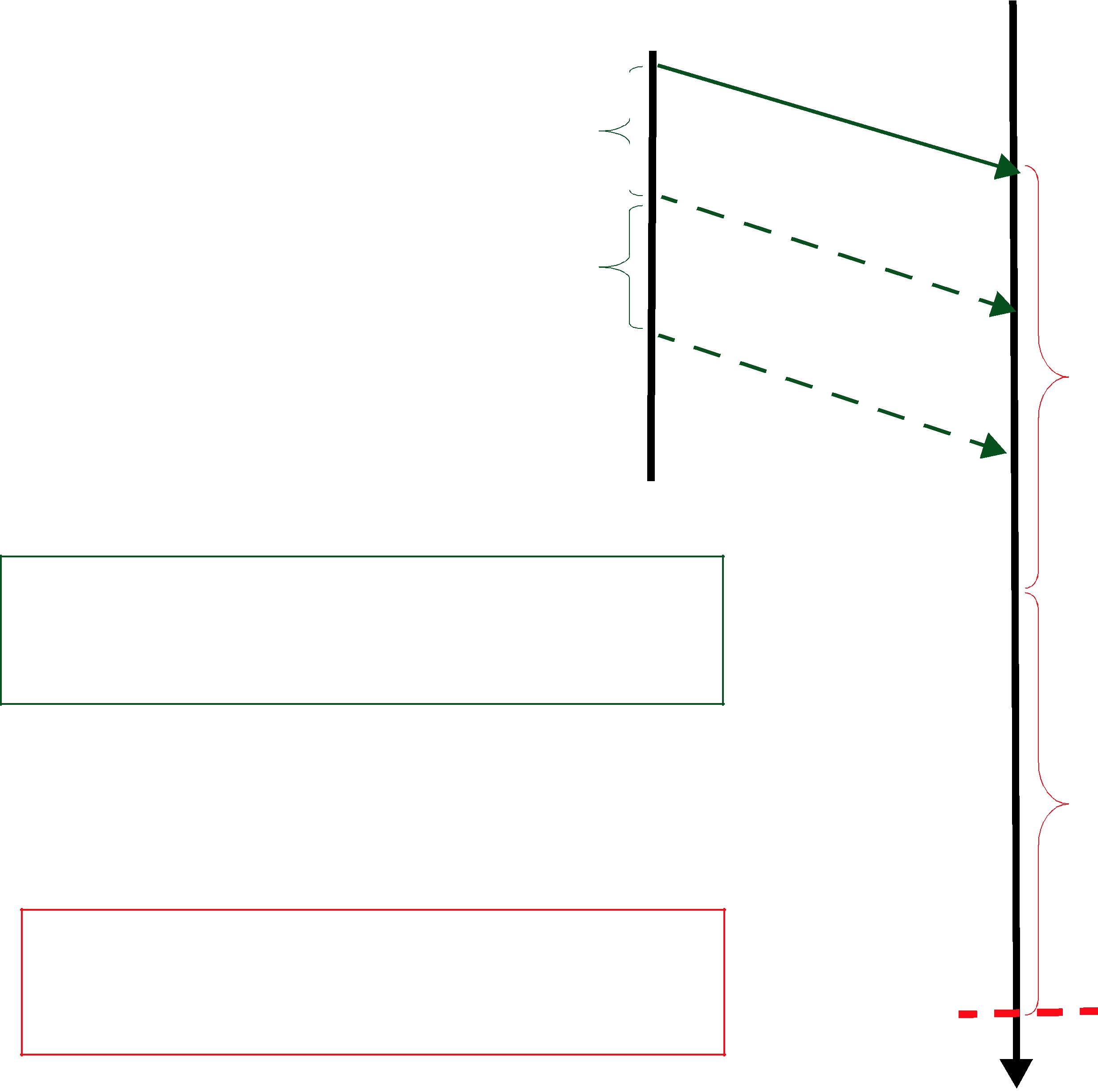

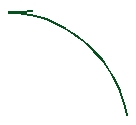

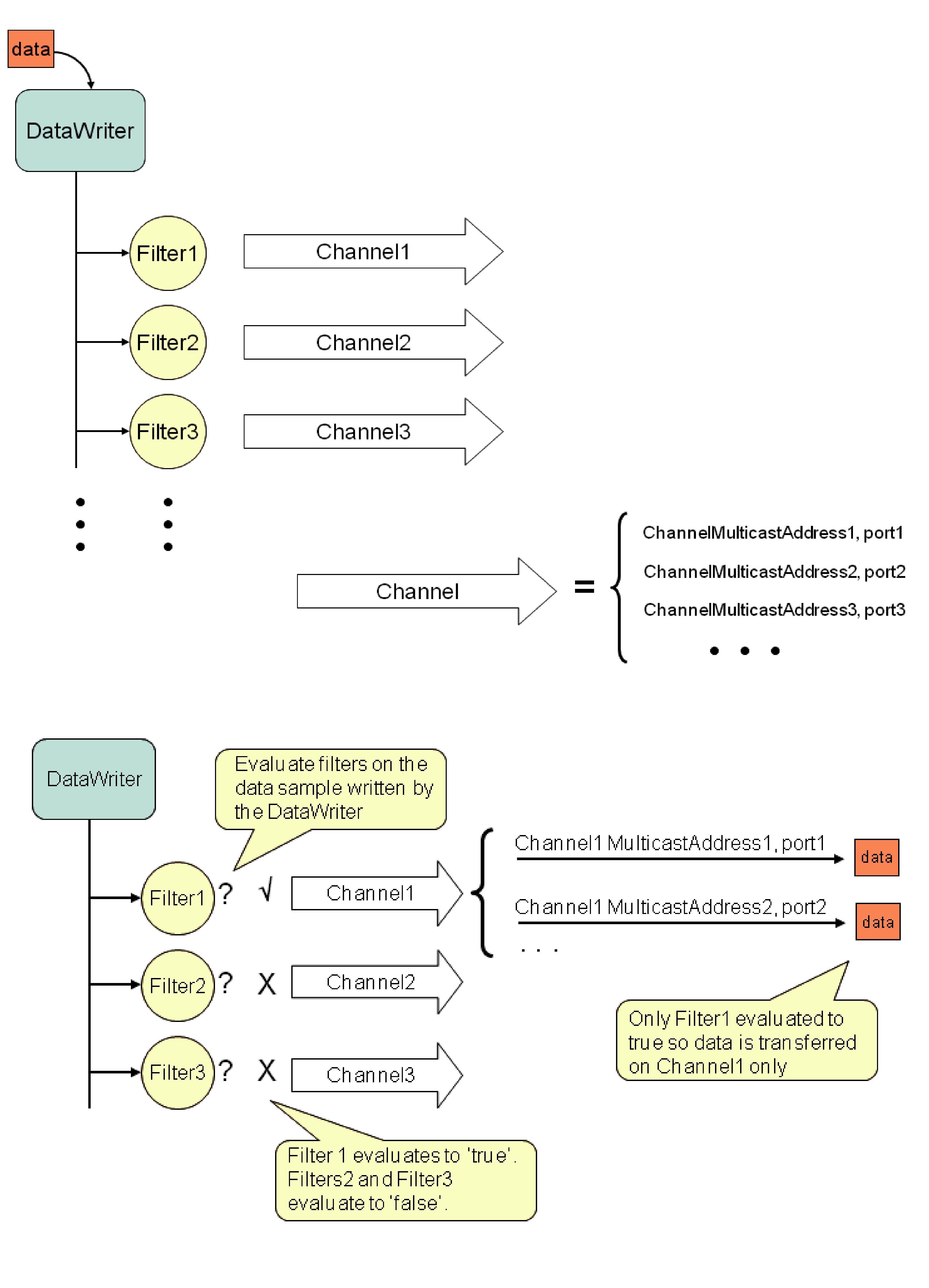

The basic approach of sending HB messages as a piggyback to DATA messages has the advantage of minimizing network traffic. However, there is a situation where this approach, by itself, may result in large latencies. Suppose there is a DataWriter that writes bursts of data, separated by relatively long periods of silence. Furthermore assume that the last message in one of the bursts is lost by the network. This is the case shown for message DATA(B, 2) in Figure 10.7. If HBs were only sent piggybacked to DATA messages, the DataReader would not realize it missed the ‘B’ DATA message with sequence number ‘2’ until the DataWriter wrote the next message. This may be a long time if data is written sporadically. To avoid this situation,

Connext can be configured so that HBs are sent periodically as long as there are samples that have not been acknowledged even if no data is being sent. The period at which these HBs are sent is configurable by setting the rtps_reliable_writer.heartbeat_period field in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3).

Note that a small value for the heartbeat_period will result in a small

Also note that the heartbeat_period should not be less than the rtps_reliable_reader.heartbeat_suppression_duration in the DATA_READER_PROTOCOL QosPolicy (DDS Extension) (Section 7.6.1); otherwise those HBs will be lost.

Figure 10.7 Use of heartbeat_period

DataWriter |

DataReader |

write(A)

1 A X

cache (A, 1)

DATA (A,1)

cache (A,1) 1 |

A 4 |

write(B)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

cache(B,2) |

|

|

|

|

||||||

|

1 |

|

A |

X |

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

DATA (B,2) |

|

|

||

|

2 |

|

B |

X |

|

heartbeat peri |

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|||||

|

|

acked(1) |

|

|

|

|

|||||||||

|

|

|

|

|

|

|

|

||||||||

|

|

|

|

|

|

|

|

|

|||||||

|

|

get(2) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

K(2) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

KNAC |

|||

|

|

|

|

|

|

|

|

|

|

|

AC |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

D |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

AT |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

A( |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

B, |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2) |

|

|

|

|

|

|

|

|

|

|

|

|

H |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

B( |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1- |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

|

A |

4 |

|

|

|

|

|

|

|

|

|

|

K(3) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

KNAC |

||||

|

2 |

|

B |

4 |

|

|

|

|

|

|

AC |

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

time |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

r

r

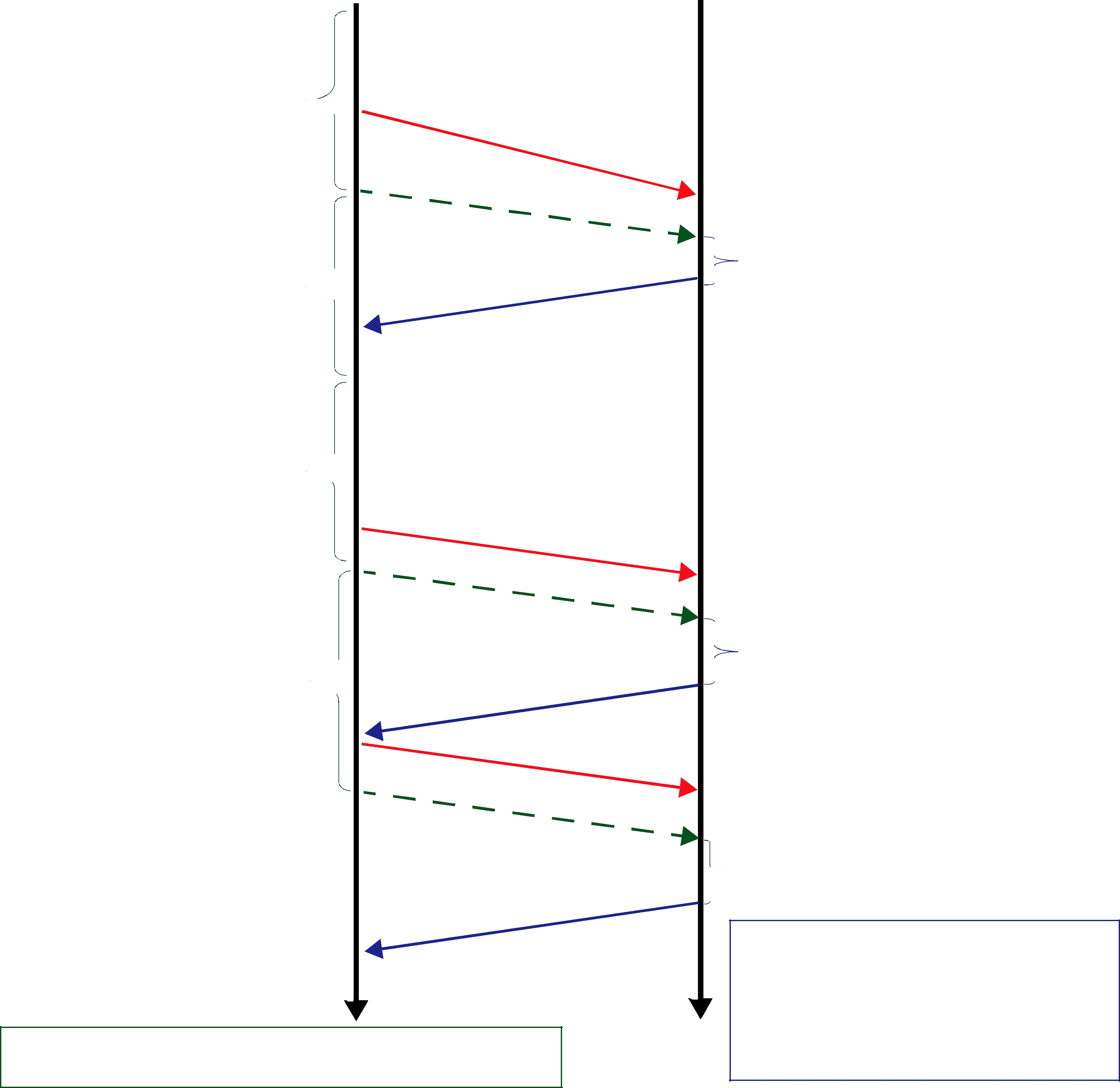

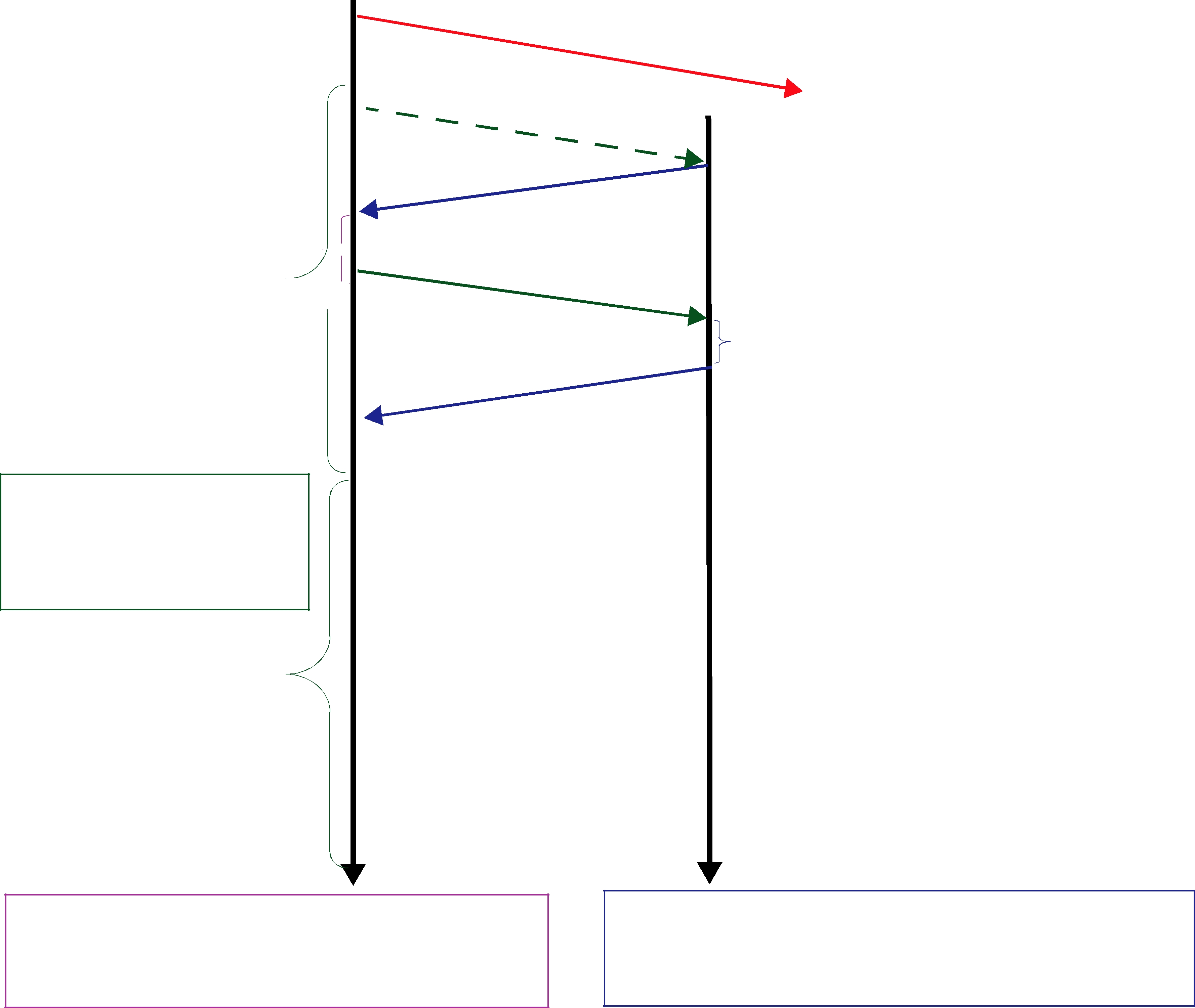

10.3.4.2How Often Piggyback Heartbeats are Sent (heartbeats_per_max_samples)

A DataWriter will automatically send heartbeats with new samples to request regular ACKNACKs from the DataReader. These are called “piggyback” heartbeats.

If batching is disabled1: one piggyback heartbeat will be sent every [max_samples2/ heartbeats_per_max_samples] number of samples.

If batching is enabled: one piggyback heartbeat will be sent every [max_batches3/ heartbeats_per_max_samples] number of samples.

Furthermore, one piggyback heartbeat will be sent per send window. If the above calculation is greater than the send window size, then the DataWriter will send a piggyback heartbeat for every [send window size] number of samples.

The heartbeats_per_max_samples field is part of the rtps_reliable_writer structure in the

DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3). If heartbeats_per_max_samples is set equal to max_samples, this means that a heartbeat will be sent with each sample. A value of 8 means that a heartbeat will be sent with every 'max_samples/ 8' samples. Say max_samples is set to 1024, then a heartbeat will be sent once every 128 samples. If you set this to zero, samples are sent without any piggyback heartbeat. The max_samples field is part of the RESOURCE_LIMITS QosPolicy (Section 6.5.20).

Figure 10.1 on page

There are two reasons to send a HB:

❏To request that a DataReader confirm the receipt of data via an ACKNACK, so that the

DataWriter can remove it from its send queue and therefore prevent the DataWriter’s history from filling up (which could cause the write() operation to temporarily block4).

❏To inform the DataReader of what data it should have received, so that the DataReader can send a request for missing data via an ACKNACK.

The DataWriter’s send queue can buffer many

A HB is used to get confirmation from DataReaders so that the DataWriter can remove acknowledged samples from the queue to make space for new samples. Therefore, if the queue size is large, or new samples are added slowly, HBs can be sent less frequently.

In Figure 10.8 on page

10.3.4.3Controlling Packet Size for Resent Samples (max_bytes_per_nack_response)

A repair packet is the maximum amount of data that a DataWriter will resend at a time. For example, if the DataReader requests 20 samples, each 10K, and the max_bytes_per_nack_response is set to 100K, the DataWriter will only send the first 10 samples. The DataReader will have to ACKNACK again to receive the next 10 samples.

1.Batching is enabled with the BATCH QosPolicy (DDS Extension) (Section 6.5.2).

2.max_samples is set in the RESOURCE_LIMITS QosPolicy (Section 6.5.20)

3.max_batches is set in the DATA_WRITER_RESOURCE_LIMITS QosPolicy (DDS Extension) (Section 6.5.4)

4.Note that data could also be removed from the DataWriter’s send queue if it is no longer relevant due to some other QoS such a HISTORY KEEP_LAST (Section 6.5.10) or LIFESPAN (Section 6.5.12).

Figure 10.8 Use of heartbeats_per_max_samples

DataWriter |

DataReader |

write(A)

1 A X  cache (A, 1)

cache (A, 1)

write(B)

DATA (A,1)

cache (A,1) |

|

1 |

A |

4 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

cache(B,2) |

|

|

|

1 |

A |

X |

|

|

||

|

|

|

|

|

|

|

2 |

B |

X |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

write(C)

DATA(B,2)

cache (B,2) |

|

1 |

A |

4 |

|

|

|

|

|

|

|

2 |

B |

4 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

A |

X |

|

|

|

2 |

B |

X |

|

|

|

3 |

C |

X |

|

|

|

|

|

|

1 |

A |

4 |

|

|

|

2 |

B |

4 |

|

|

|

3 |

C |

4 |

|

|

|

|

|

|

cache(C,3)

D |

|

AT |

|

A( |

|

C,3);H |

|

|

|

1 A 4

1 A 4

cache (C,3)

2 B 4

|

|

|

|

4) |

|

|

|

K( |

|

|

|

AC |

|

|

|

KN |

|

|

|

|

AC |

|

|

|

time |

|

|

|

time |

See Figure 10.1 for meaning of table columns.

A DataWriter may resend multiple missed samples in the same packet. The max_bytes_per_nack_response field in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3) limits the size of this ‘repair’ packet.

10.3.4.4Controlling How Many Times Heartbeats are Resent (max_heartbeat_retries)

If a DataReader does not respond within max_heartbeat_retries number of heartbeats, it will be dropped by the DataWriter and the reliable DataWriter’s Listener will be called with a

RELIABLE_READER_ACTIVITY_CHANGED Status (DDS Extension) (Section 6.3.6.8).

If the dropped DataReader becomes available again (perhaps its network connection was down temporarily), it will be added back to the DataWriter the next time the DataWriter receives some message (ACKNACK) from the DataReader.

When a DataReader is ‘dropped’ by a DataWriter, the DataWriter will not wait for the DataReader to send an ACKNACK before any samples are removed. However, the DataWriter will still send data and HBs to this DataReader as normal.

The max_heartbeat_retries field is part of the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3).

10.3.4.5Treating

In addition to max_heartbeat_retries, if inactivate_nonprogressing_readers is set, then not only are

One example for which it could be useful to turn on inactivate_nonprogressing_readers is when a DataReader’s

10.3.4.6Coping with Redundant Requests for Missing Samples (max_nack_response_delay)

When a DataWriter receives a request for missing samples from a DataReader and responds by resending the requested samples, it will ignore additional requests for the same samples during the time period max_nack_response_delay.

The rtps_reliable_writer.max_nack_response_delay field is part of the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3).

If your send period is smaller than the

While these redundant messages provide an extra cushion for the level of reliability desired, you can conserve the CPU and network bandwidth usage by limiting how often the same ACKNACK messages are sent; this is controlled by min_nack_response_delay.

Reliable subscriptions are prevented from resending an ACKNACK within min_nack_response_delay seconds from the last time an ACKNACK was sent for the same sample. Our testing shows that the default min_nack_response_delay of 0 seconds achieves an optimal balance for most applications on typical Ethernet LANs.

However, if your system has very slow computers and/or a slow network, you may want to consider increasing min_nack_response_delay. Sending an ACKNACK and resending a missing sample inherently takes a long time in this system. So you should allow a longer time for recovery of the lost sample before sending another ACKNACK. In this situation, you should increase min_nack_response_delay.

If your system consists of a fast network or computers, and the receive queue size is very small, then you should keep min_nack_response_delay very small (such as the default value of 0). If the queue size is small, recovering a missing sample is more important than conserving CPU and network bandwidth (new samples that are too far ahead of the missing sample are thrown away). A fast system can cope with a smaller min_nack_response_delay value, and the reliable sample stream can normalize more quickly.

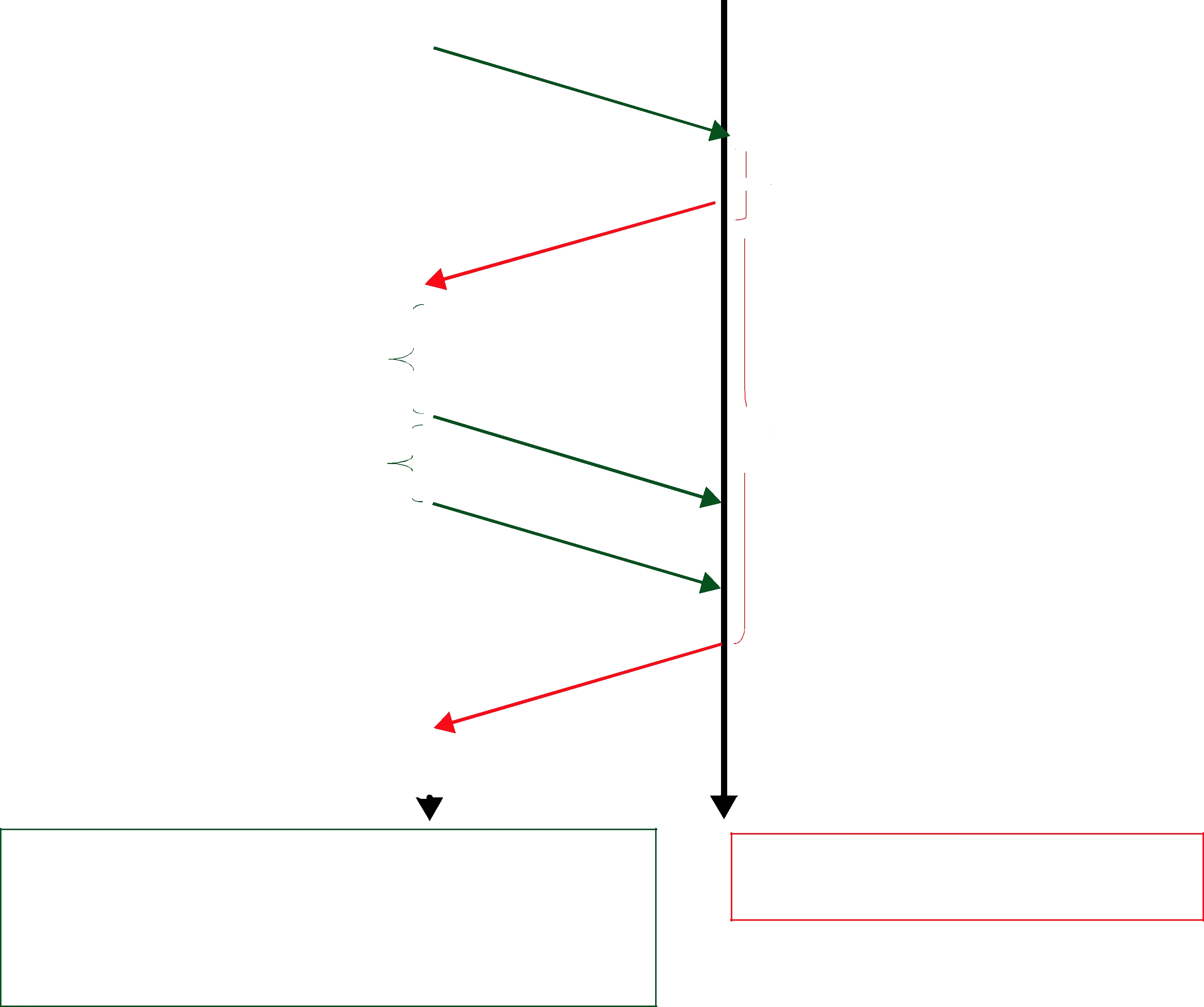

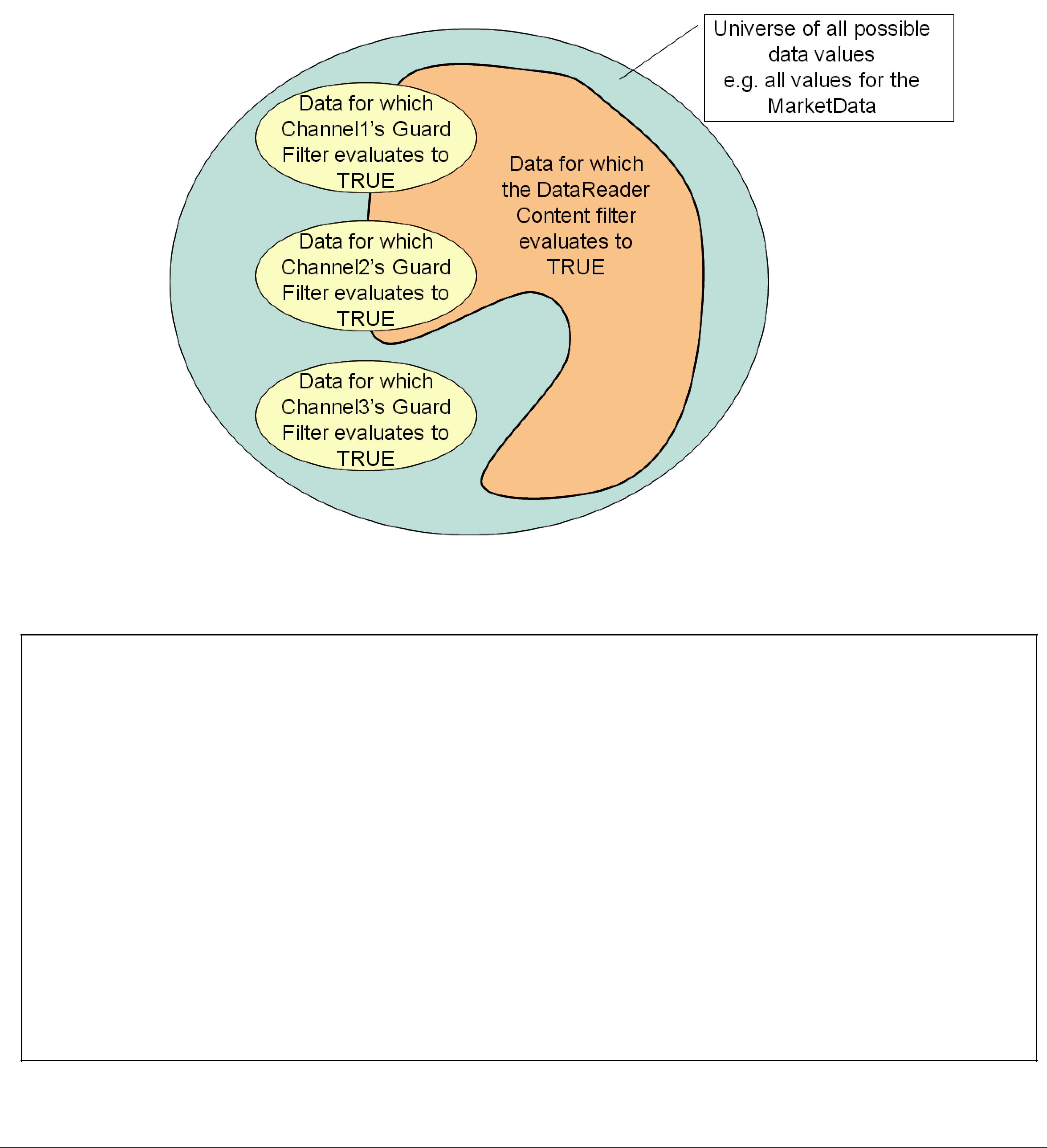

Figure 10.9 Resending Missing Samples due to Duplicate ACKNACKs

DataWriter DataReader

Send Sample “1”

Send Sample “2”

1

Send Sample “3”

Send Sample “4”

Resend Sample “2”  Send Sample “5”

Send Sample “5”

Resend Sample “2”

|

|

|

|

|

|

|

|

) |

||

|

|

|

|

|

|

|

2 |

|

|

|

|

|

|

|

|

|

( |

|

|

|

|

|

|

|

|

|

K |

|

|

|

|

|

|

|

|

|

C |

|

|

|

|

|

|

|

|

|

A |

|

|

|

|

|

|

|

|

|

N |

|

|

|

|

|

|

|

|

|

K |

|

|

|

|

|

|

|

|

|

C |

|

|

|

|

|

|

|

|

|

|

A |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

) |

||

|

|

|

|

|

|

|

2 |

|

||

|

|

|

|

|

|

( |

|

|

|

|

|

|

|

|

|

K |

|

|

|

|

|

|

|

|

|

C |

|

|

|

|

|

|

|

|

|

A |

|

|

|

|

|

|

|

|

|

N |

|

|

|

|

|

|

|

|

|

K |

|

|

|

|

|

|

|

|

|

C |

|

|

|

|

|

|

|

|

|

|

A |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3

3 4

2 3 4

5

Space must be reserved for missing sample “2”.

Samples “3” and “4” are cached while waiting for missing sample “2”.

Sample “2” is dropped since it is older than the last sample that has been handed to the application.

10.3.4.7Disabling Positive Acknowledgements (disable_postive_acks_min_sample_keep_duration)

When ACKNACK storms are a primary concern in a system, an alternative to tuning heartbeat and ACKNACK response delays is to disable positive acknowledgments (ACKs) and rely just on NACKs to maintain reliability. Systems with

Normally when ACKs are enabled, strict reliability is maintained by the DataWriter, guaranteeing that a sample stays in its send queue until all DataReaders have positively acknowledged it (aside from relevant DURABILITY, HISTORY, and LIFESPAN QoS policies). When ACKs are disabled, strict reliability is no longer guaranteed, but the DataWriter should still keep the sample for a sufficient duration for

The keep duration should be configured for the expected

If the peak send rate is known and writer resources are available, the writer queue can be sized so that writes will not block. For this case, the queue size must be greater than the send rate multiplied by the keep duration.

10.3.5Avoiding Message Storms with DataReaderProtocol QosPolicy

DataWriters send data samples and heartbeats to DataReaders. A DataReader responds to a heartbeat by sending an acknowledgement that tells the DataWriter what the DataReader has received so far and what it is missing. If there are many DataReaders, all sending ACKNACKs to the same DataWriter at the same time, a message storm can result. To prevent this, you can set a delay for each DataReader, so they don’t all send ACKNACKs at the same time. This delay is set in the DATA_READER_PROTOCOL QosPolicy (DDS Extension) (Section 7.6.1).

If you have several DataReaders per DataWriter, varying this delay for each one can avoid ACKNACK message storms to the DataWriter. If you are not concerned about message storms, you do not need to change this QosPolicy.

Example:

reader_qos.protocol.rtps_reliable_reader.min_heartbeat_response_delay.sec = 0; reader_qos.protocol.rtps_reliable_reader.min_heartbeat_response_delay.nanosec = 0; reader_qos.protocol.rtps_reliable_reader.max_heartbeat_response_delay.sec = 0; reader_qos.protocol.rtps_reliable_reader.max_heartbeat_response_delay.nanosec =

0.5 * 1000000000UL; // 0.5 sec

As the name suggests, the minimum and maximum response delay bounds the random wait time before the response. Setting both to zero will force immediate response, which may be necessary for the fastest recovery in case of lost samples.

10.3.6Resending Samples to

The DURABILITY QosPolicy (Section 6.5.7) is also somewhat related to Reliability. Connext requires a finite time to "discover" or match DataReaders to DataWriters. If an application attempts to send data before the DataReader and DataWriter "discover" one another, then the sample will not actually get sent. Whether or not samples are resent when the DataReader and DataWriter eventually "discover" one another depends on how the DURABILITY and HISTORY QoS are set. The default setting for the Durability QosPolicy is VOLATILE, which means that the DataWriter will not store samples for redelivery to

Connext also supports the TRANSIENT_LOCAL setting for the Durability, which means that the samples will be kept stored for redelivery to

See also: Waiting for Historical Data (Section 7.3.6).

10.3.7Use Cases

This section contains advanced material that discusses practical applications of the reliability related QoS.

10.3.7.1Importance of Relative Thread Priorities

For high throughput, the Connext Event thread’s priority must be sufficiently high on the sending application. Unlike an unreliable writer, a reliable writer relies on internal Connext threads: the Receive thread processes ACKNACKs from the DataReaders, and the Event thread schedules the events necessary to maintain reliable data flow.

❏When samples are sent to the same or another application on the same host, the Receive thread priority should be higher than the writing thread priority (priority of the thread calling write() on the DataWriter). This will allow the Receive thread to process the messages as they are sent by the writing thread. A sustained reliable flow requires the reader to be able to process the samples from the writer at a speed equal to or faster than the writer emits.

❏The default Event thread priority is low. This is adequate if your reliable transfer is not sustained; queued up events will eventually be processed when the writing thread yields the CPU. The Connext can automatically grow the event queue to store all pending events. But if the reliable communication is sustained, reliable events will continue to be scheduled, and the event queue will eventually reach its limit. The default Event thread priority is unsuitable for maintaining a fast and sustained reliable communication and should be increased through the participant_qos.event.thread.priority. This value maps directly to the OS thread priority, see EVENT QosPolicy (DDS Extension) (Section 8.5.5)).

The Event thread should also be increased to minimize the reliable latency. If events are processed at a higher priority, dropped packets will be resent sooner.

Now we consider some practical applications of the reliability related QoS:

❏Aperiodic Use Case:

❏Aperiodic, Bursty (Section 10.3.7.3)

10.3.7.2Aperiodic Use Case:

Suppose you have aperiodically generated data that needs to be delivered reliably, with minimum latency, such as a series of commands (“Ready,” “Aim,” “Fire”). If a writing thread may block between each sample to guarantee reception of the just sent sample on the reader’s middleware end, a smaller queue will provide a smaller upper bound on the sample delivery time. Adequate writer QoS for this use case are presented in Figure 10.10.

Figure 10.10 QoS for an Aperiodic,

1

2

3

5//use these hard coded value unless you use a key

6

7

8

9

10

12// want to piggyback HB w/ every sample.

13

14

15

16

17

18

19

20//consider making

21

22

24// should be faster than the send rate, but be mindful of OS resolution

25

26

27alertReaderWithinThisMs * 1000000;

29

30

32// essentially turn off slow HB period

33

Line 1 (Figure 10.10): This is the default setting for a writer, shown here strictly for clarity.

Line 2 (Figure 10.10): Setting the History kind to KEEP_ALL guarantees that no sample is ever lost.

Line 3 (Figure 10.10): This is the default setting for a writer, shown here strictly for clarity. ‘Push’ mode reliability will yield lower latency than ‘pull’ mode reliability in normal situations where there is no sample loss. (See DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) (Section 6.5.3).) Furthermore, it does not matter that each packet sent in response to a command will be small, because our data sent with each command is likely to be small, so that maximizing throughput for this data is not a concern.

Line 5 - Line 10 (Figure 10.10): For this example, we assume a single writer is writing samples one at a time. If we are not using keys (see Section 2.2.2), there is no reason to use a queue with room for more than one sample, because we want to resolve a sample completely before moving on to the next. While this negatively impacts throughput, it minimizes memory usage. In this example, a written sample will remain in the queue until it is acknowledged by all active readers (only 1 for this example).

Line 12 - Line 14 (Figure 10.10): The fastest way for a writer to ensure that a reader is

Line

Line

Line

❏Suppose that the

end, and assuming that this retry succeeds, the time to recover the sample from the original publication time is: alertReaderWithinThisMs + 50 sec + 25 sec.

If the OS is capable of

❏What if two packets are dropped in a row? Then the recovery time would be

2 * alertReaderWithinThisMs + 2 * 50 sec + 25 sec. If alertReaderWithinThisMs is 100 ms, the recovery time now exceeds 200 ms, and can perhaps degrade user experience.

Line

So if we set blockingTime to about 80 ms, we will have given enough chance for recovery. Of course, in a dynamic system, a reader may drop out at any time, in which case max_heartbeat_retries will be exceeded, and the unresponsive reader will be dropped by the writer. In either case, the writer can continue writing. Inappropriate values will cause a writer to prematurely drop a temporarily unresponsive (but otherwise healthy) reader, or be stuck trying unsuccessfully to feed a crashed reader. In the unfortunate case where a reader becomes temporarily unresponsive for a duration exceeding (alertReaderWithinThisMs * max_heartbeat_retries), the writer may issue gaps to that reader when it becomes active again; the dropped samples are irrecoverable. So estimating the worst case unresponsive time of all potential readers is critical if sample drop is unacceptable.

Line

Figure 10.11 shows how to set the QoS for the reader side, followed by a

Figure 10.11 QoS for an Aperiodic,

1

2

3

4// 1 is ok for normal use. 2 allows fast infinite loop

5

6

7

9

10

11

12

Line

Line

1.The sender application writes sample 1 to the reader. The receiver application processes it and sends a

2.The sender application writes sample 2 to the receiving application in response to response 1. Because the reader’s queue is 2, it can accept sample 2 even though it may not yet have acknowledged sample 1. Otherwise, the reader may drop sample 2, and would have to recover it later.

3.At the same time, the receiver application acknowledges sample 1, and frees up one slot in the queue, so that it can accept sample 3, which it on its way.

The above steps can be repeated

Line 7 (Figure 10.11): Since we are not using keys, there is just one instance.

Line

10.3.7.3Aperiodic, Bursty

Suppose you have aperiodically generated bursts of data, as in the case of a new aircraft approaching an airport. The data may be the same or different, but if they are written by a single writer, the challenge to this writer is to feed all readers as quickly and efficiently as possible when this burst of hundreds or thousands of samples hits the system.

❏If you use an unreliable writer to push this burst of data, some of them may be dropped over an unreliable transport such as UDP.

❏If you try to shape the burst according to however much the slowest reader can process, the system throughput may suffer, and places an additional burden of queueing the samples on the sender application.

❏If you push the data reliably as fast they are generated, this may cost dearly in repair packets, especially to the slowest reader, which is already burdened with application chores.

Connext pull mode reliability offers an alternative in this case by letting each reader pace its own data stream. It works by notifying the reader what it is missing, then waiting for it to request only as much as it can handle. As in the aperiodic

Line 1 (Figure 10.12): This is the default setting for a writer, shown here strictly for clarity.

Line 2 (Figure 10.12): Since we do not want any data lost, we want the History kind set to KEEP_ALL.

Figure 10.12 QoS for an Aperiodic, Bursty Writer

1

2

3

5//use these hard coded value until you use key

6

7

8

9= worstBurstInSample;

10

11

12

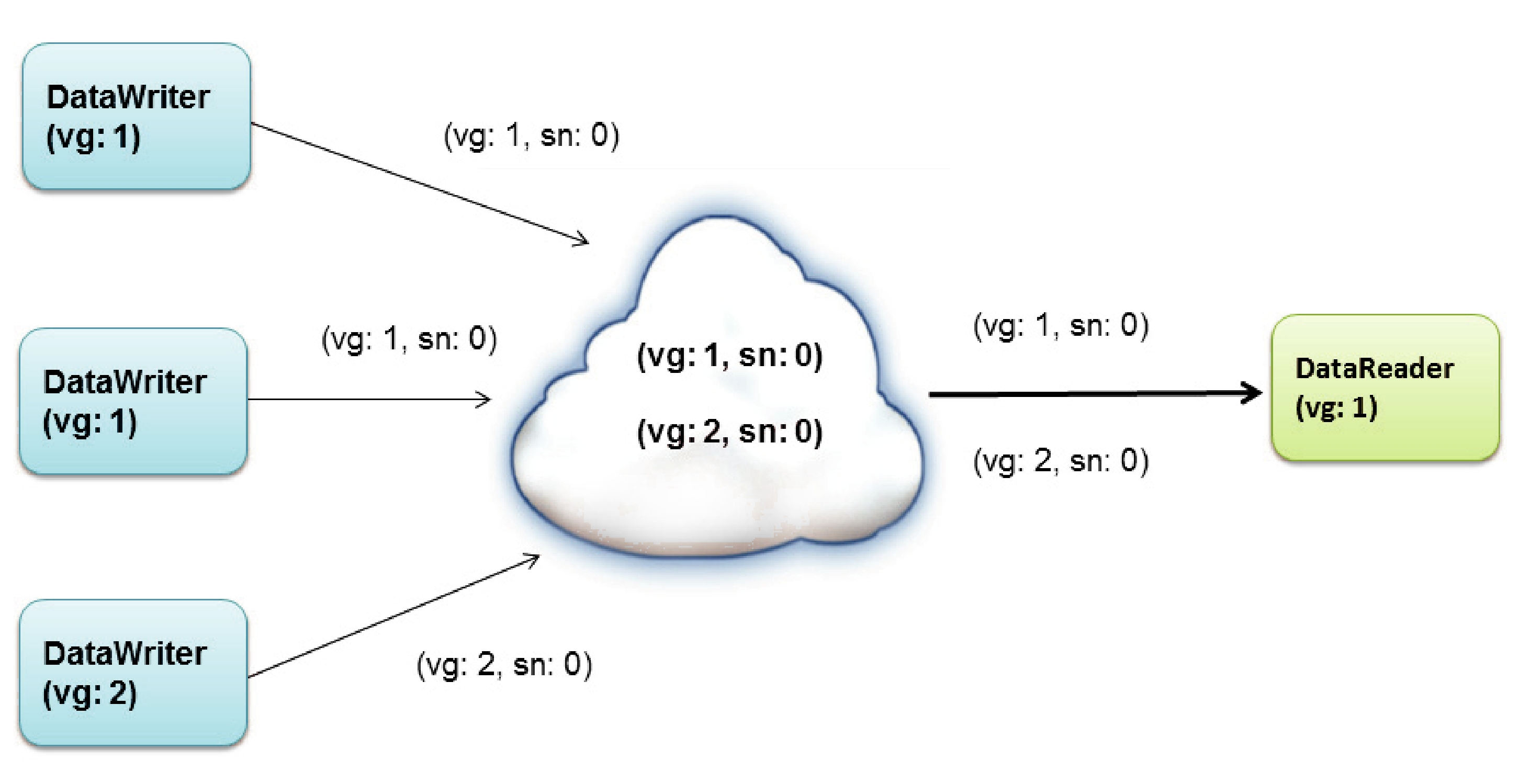

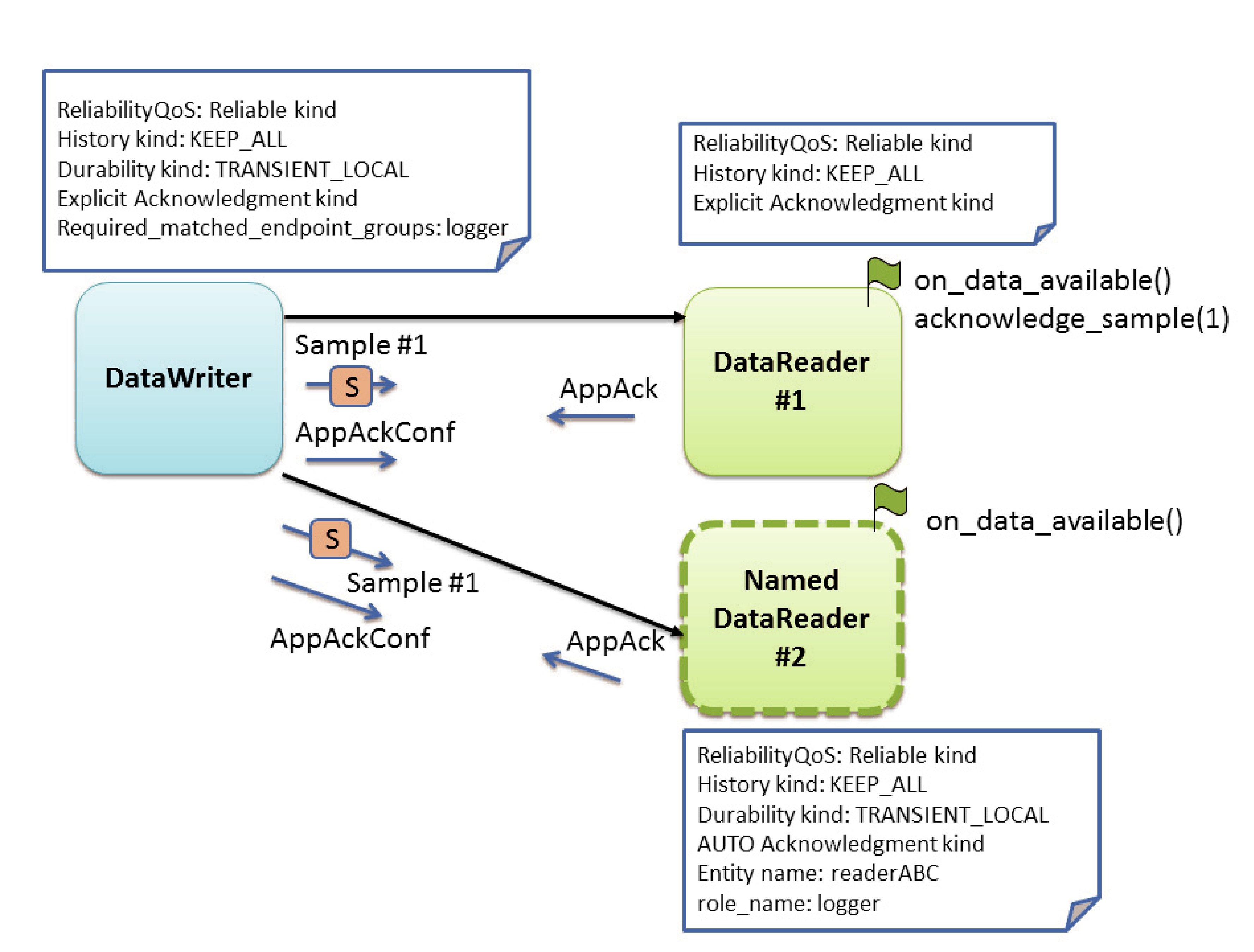

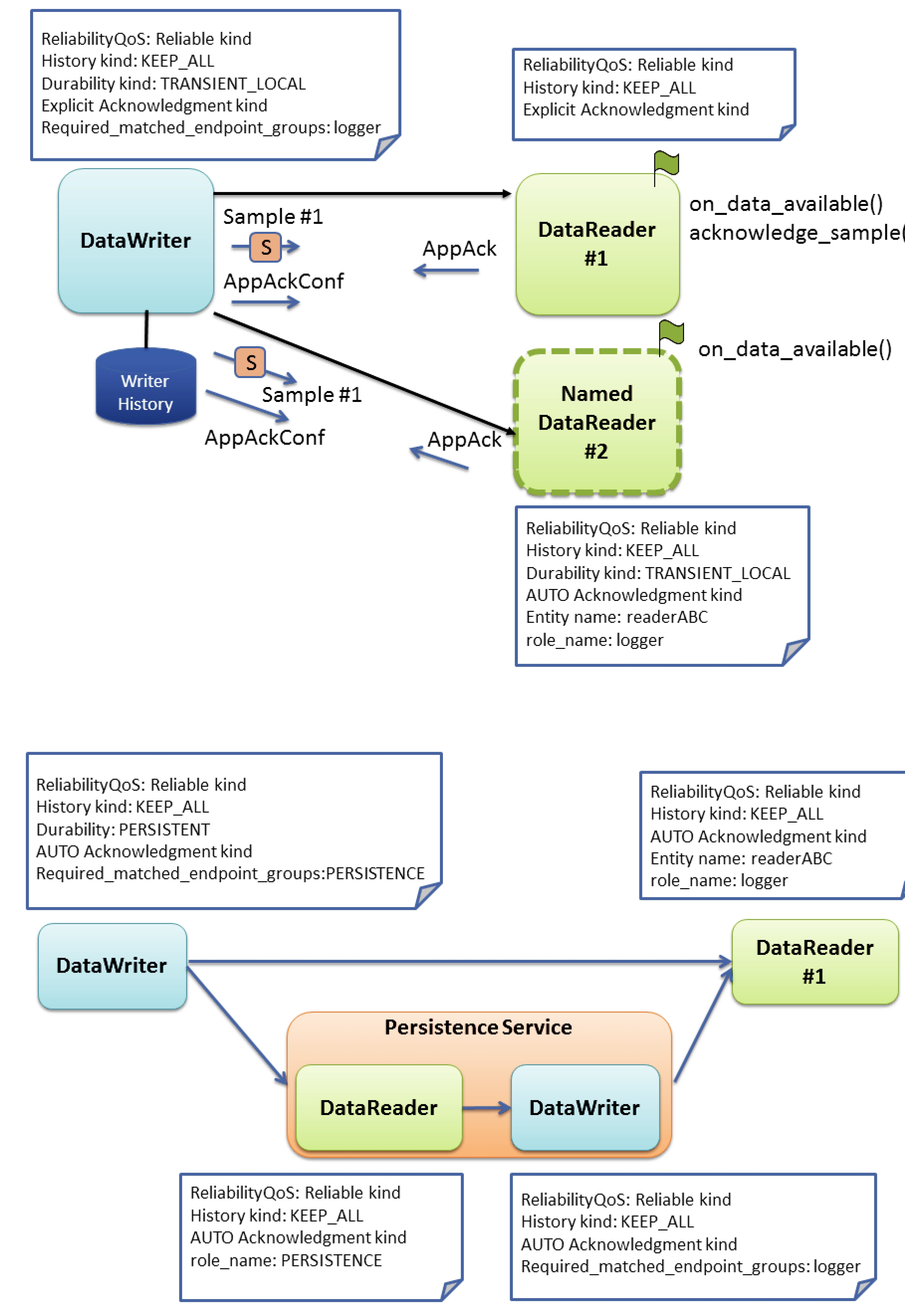

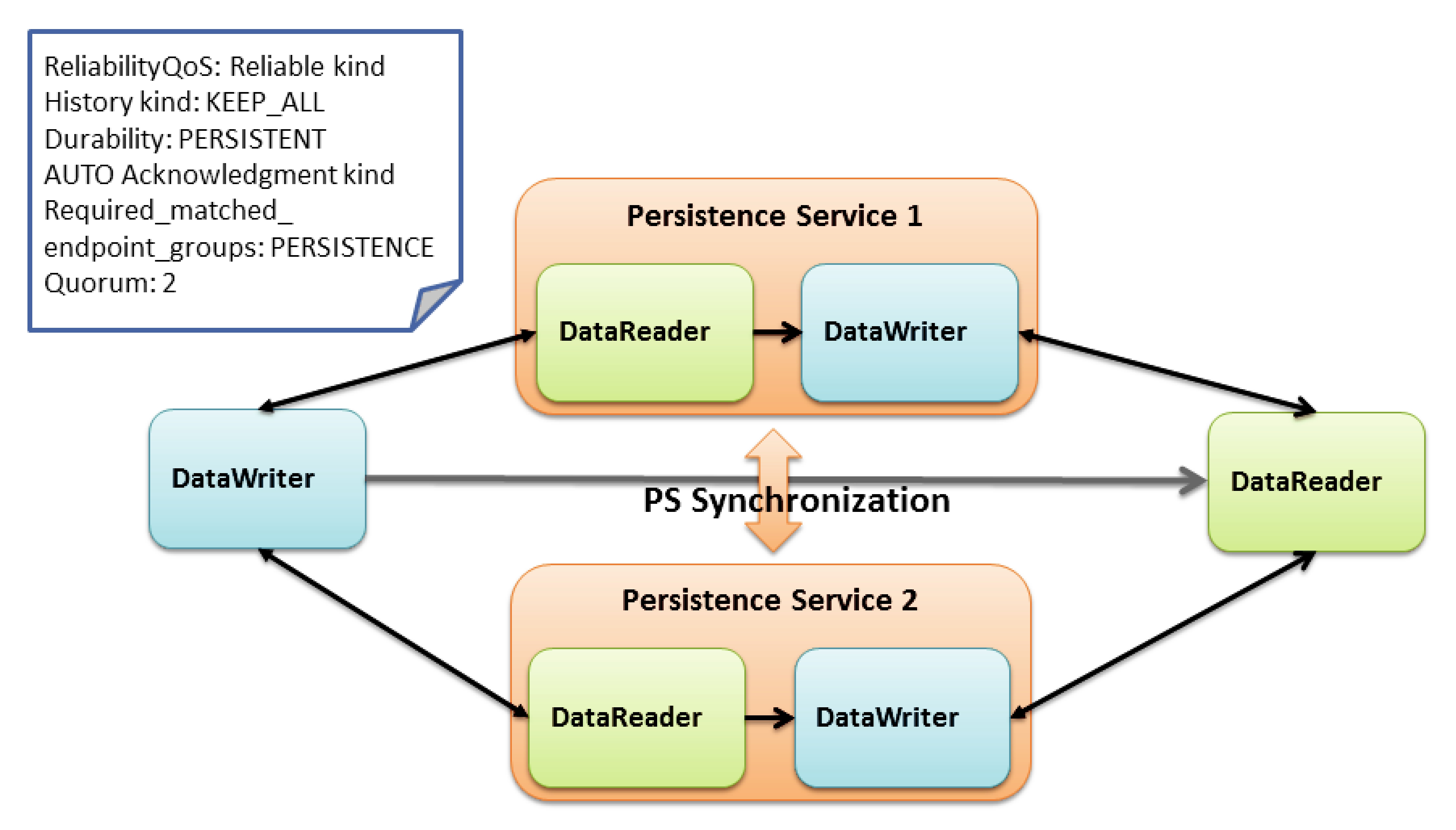

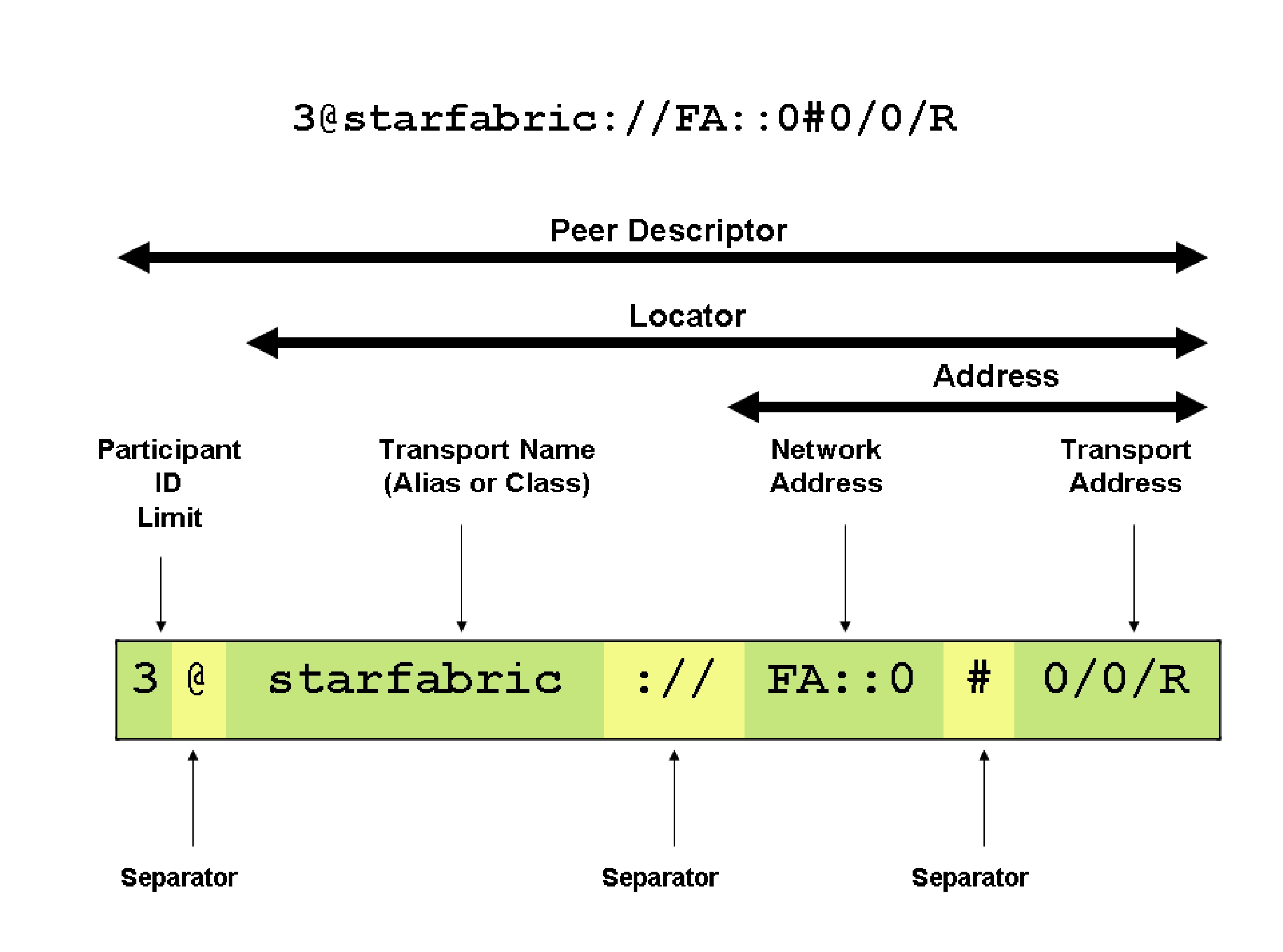

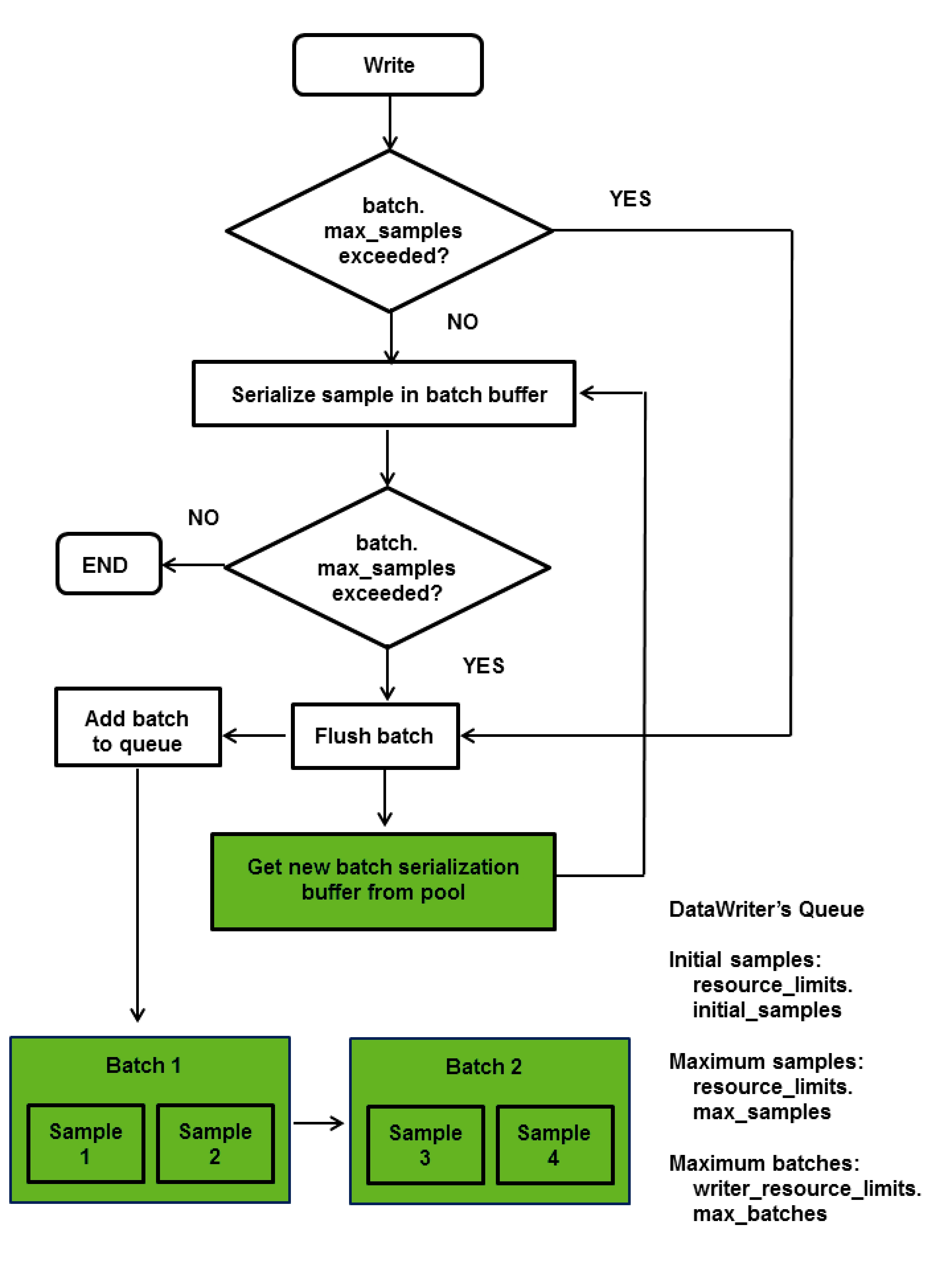

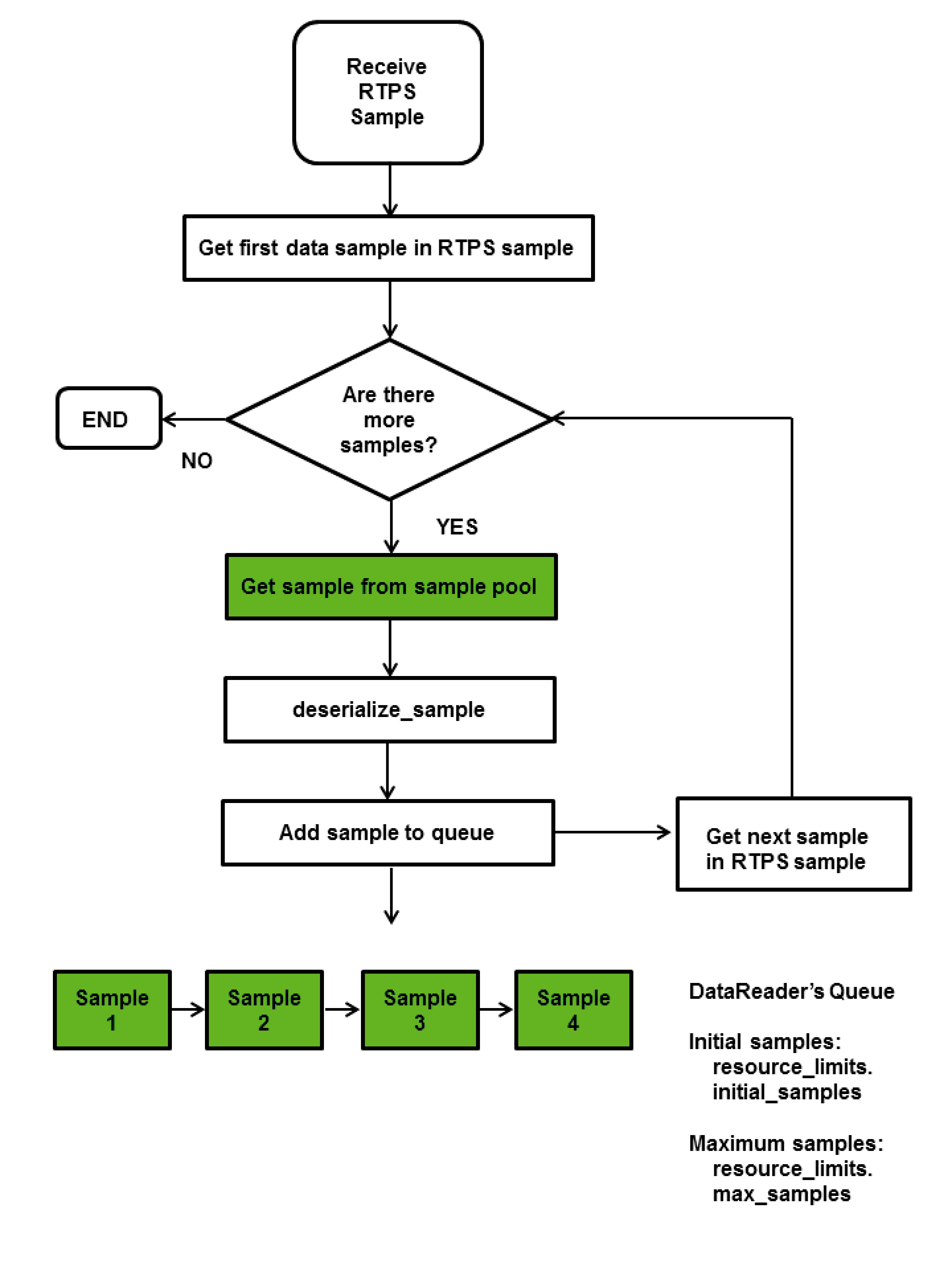

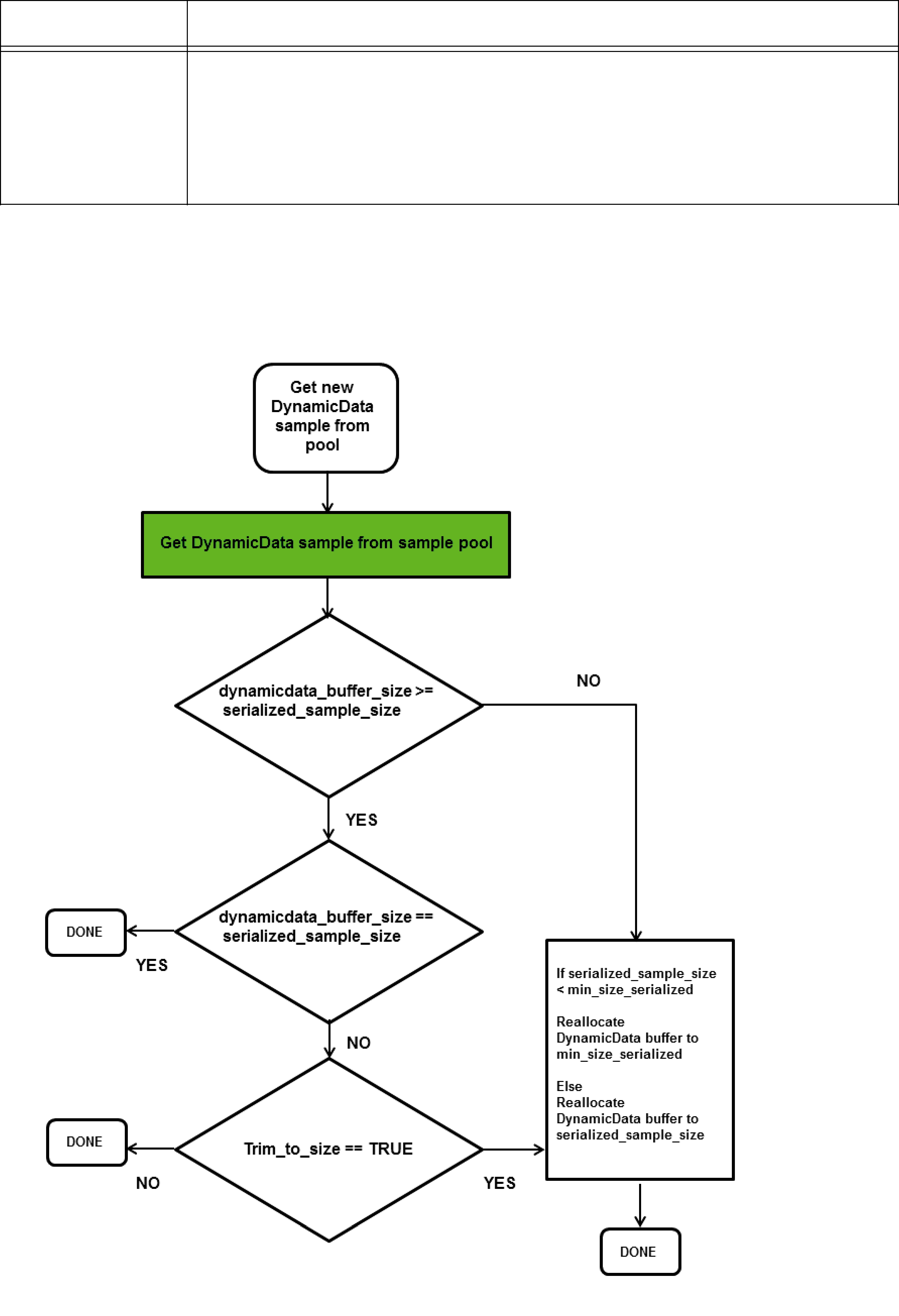

13// piggyback HB not used