Controlling Heartbeats and Retries with DataWriterProtocol QosPolicy

In the Connext DDS reliability model, the DataWriter sends DDS data samples and heartbeats to reliable DataReaders. A DataReader responds to a heartbeat by sending an ACKNACK, which tells the DataWriter what the DataReader has received so far.

In addition, the DataReader can request missing DDS samples (by sending an ACKNACK) and the DataWriter will respond by resending the missing DDS samples. This section describes some advanced timing parameters that control the behavior of this mechanism. Many applications do not need to change these settings. These parameters are contained in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension).

The protocol described in Overview of the Reliable Protocol uses very simple rules such as piggybacking HB messages to each DATA message and responding immediately to ACKNACKs with the requested repair messages. While correct, this protocol would not be capable of accommodating optimum performance in more advanced use cases.

This section describes some of the parameters configurable by means of the rtps_reliable_writer structure in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) and how they affect the behavior of the RTPS protocol.

How Often Heartbeats are Resent (heartbeat_period)

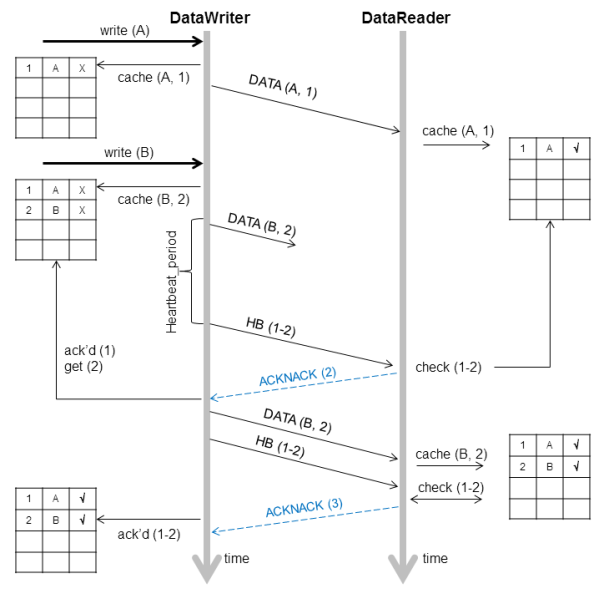

If a DataReader does not acknowledge a DDS sample that has been sent, the DataWriter resends the heartbeat. These heartbeats are resent at the rate set in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension), specifically its heartbeat_period field.

For example, a heartbeat_period of 3 seconds means that if a DataReader does not receive the latest DDS sample (for example, it gets dropped by the network), it might take up to 3 seconds before the DataReader realizes it is missing data. The application can lower this value when it is important that recovery from packet loss is very fast.

The basic approach of sending HB messages as a piggyback to DATA messages has the advantage of minimizing network traffic. However, there is a situation where this approach, by itself, may result in large latencies. Suppose there is a DataWriter that writes bursts of data, separated by relatively long periods of silence. Furthermore assume that the last message in one of the bursts is lost by the network. This is the case shown for message DATA(B, 2) in Figure: Use of heartbeat_period . If HBs were only sent piggybacked to DATA messages, the DataReader would not realize it missed the ‘B’ DATA message with sequence number ‘2’ until the DataWriter wrote the next message. This may be a long time if data is written sporadically. To avoid this situation, Connext DDS can be configured so that HBs are sent periodically as long as there are DDS samples that have not been acknowledged even if no data is being sent. The period at which these HBs are sent is configurable by setting the rtps_reliable_writer.heartbeat_period field in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension).

Note that a small value for the heartbeat_period will result in a small worst-case latency if the last message in a burst is lost. This comes at the expense of the higher overhead introduced by more frequent HB messages.

Also note that the heartbeat_period should not be less than the rtps_reliable_reader.heartbeat_suppression_duration in the DATA_READER_PROTOCOL QosPolicy (DDS Extension); otherwise those HBs will be lost.

Figure: Use of heartbeat_period

How Often Piggyback Heartbeats are Sent (heartbeats_per_max_samples)

A DataWriter will automatically send heartbeats with new DDS samples to request regular ACKNACKs from the DataReader. These are called “piggyback” heartbeats.

A piggyback heartbeat is sent every [(current send-window size/heartbeats_per_max_samples)] number of DDS samples written.

The heartbeats_per_max_samples field is part of the rtps_reliable_writer structure in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension). If heartbeats_per_max_samples is set equal to max_send_window_size, this means that a heartbeat will be sent with each DDS sample. A value of 8 means that a heartbeat will be sent with every 'current send-window size/8' DDS samples. Say current send window is 1024, then a heartbeat will be sent once every 128 DDS samples. If you set this to zero, DDS samples are sent without any piggyback heartbeat. The max_send_window_size field is part of the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension).

Figure: Basic RTPS Reliable Protocol and Figure: RTPS Reliable Protocol in the Presence of Message Loss seem to imply that a heartbeat (HB) is sent as a piggyback to each DATA message. However, in situations where data is sent continuously at high rates, piggybacking a HB to each message may result in too much overhead; not so much on the HB itself, but on the ACKNACKs that would be sent back as replies by the DataReader.

There are two reasons to send a HB:

- To request that a DataReader confirm the receipt of data via an ACKNACK, so that the DataWriter can remove it from its send queue and therefore prevent the DataWriter’s history from filling up (which could cause the write() operation to temporarily block1Note that data could also be removed from the DataWriter’s send queue if it is no longer relevant due to some other QoS such a HISTORY KEEP_LAST (See "HISTORY QosPolicy") or LIFESPAN (See "LIFESPAN QoS Policy").).

- To inform the DataReader of what data it should have received, so that the DataReader can send a request for missing data via an ACKNACK.

The DataWriter’s send queue can buffer many DDS data samples while it waits for ACKNACKs, and the DataReader’s receive queue can store out-of-order DDS samples while it waits for missing ones. So it is possible to send HB messages much less frequently than DATA messages. The ratio of piggyback HB messages to DATA messages is controlled by the rtps_reliable_writer.heartbeats_per_max_samples field in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension).

A HB is used to get confirmation from DataReaders so that the DataWriter can remove acknowledged DDS samples from the queue to make space for new DDS samples. Therefore, if the queue size is large, or new DDS samples are added slowly, HBs can be sent less frequently.

In Figure: Use of heartbeats_per_max_samples, the DataWriter sets the heartbeats_per_max_samples to certain value so that a piggyback HB will be sent for every three DDS samples. The DataWriter first writes DDS sample A and B. The DataReader receives both. However, since no HB has been received, the DataReader won’t send back an ACKNACK. The DataWriter will still keep all the DDS samples in its queue. When the DataWriter sends DDS sample C, it will send a piggyback HB along with the DDS sample. Once the DataReader receives the HB, it will send back an ACKNACK for DDS samples up to sequence number 3, such that the DataWriter can remove all three DDS samples from its queue.

Figure: Use of heartbeats_per_max_samples

Controlling Packet Size for Resent DDS Samples (max_bytes_per_nack_response)

A DataWriter may resend multiple missed DDS samples in the same packet. The max_bytes_per_nack_ response field in the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension) limits the size of this ‘repair’ packet. The reliable DataWriter will include at least one sample in the repair packet.

For example, if the DataReader requests 20 DDS samples, each 10K, and the max_bytes_per_nack_response is set to 100K, the DataWriter will only send the first 10 DDS samples at most. The DataReader will have to ACKNACK again to receive the other DDS samples.

Regardless of this setting, the maximum number of samples that can be part of a repair packet is limited to 32. This limit cannot be changed by configuration. In addition, the number of samples is limited by the value of NDDS_Transport_Property_t’s gather_send_buffer_count_max (see Setting the Maximum Gather-Send Buffer Count for UDPv4 and UDPv6).

Controlling How Many Times Heartbeats are Resent (max_heartbeat_retries)

If a DataReader does not respond within max_heartbeat_retries number of heartbeats, it will be dropped by the DataWriter and the reliable DataWriter’s Listener will be called with a RELIABLE_READER_ACTIVITY_CHANGED Status (DDS Extension).

If the dropped DataReader becomes available again (perhaps its network connection was down temporarily), it will be added back to the DataWriter the next time the DataWriter receives some message (ACKNACK) from the DataReader.

When a DataReader is ‘dropped’ by a DataWriter, the DataWriter will not wait for the DataReader to send an ACKNACK before any DDS samples are removed. However, the DataWriter will still send data and HBs to this DataReader as normal.

The max_heartbeat_retries field is part of the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension).

Treating Non-Progressing Readers as Inactive Readers (inactivate_nonprogressing_readers)

In addition to max_heartbeat_retries, if inactivate_nonprogressing_readers is set, then not only are non-responsive DataReaders considered inactive, but DataReaders sending non-progressing NACKs can also be considered inactive. A non-progressing NACK is one which requests the same oldest DDS sample as the previously received NACK. In this case, the DataWriter will not consider a non-progressing NACK as coming from an active reader, and hence will inactivate the DataReader if no new NACKs are received before max_heartbeat_retries number of heartbeat periods has passed.

One example for which it could be useful to turn on inactivate_nonprogressing_readers is when a DataReader’s (keep-all) queue is full of untaken historical DDS samples. Each subsequent heartbeat would trigger the same NACK, and nominally the DataReader would not be inactivated. A user not requiring strict-reliability could consider setting inactivate_nonprogressing_readers to allow the DataWriter to progress rather than being held up by this non-progressing DataReader.

Coping with Redundant Requests for Missing DDS Samples (max_nack_response_delay)

When a DataWriter receives a request for missing DDS samples from a DataReader and responds by resending the requested DDS samples, it will ignore additional requests for the same DDS samples during the time period max_nack_response_delay.

The rtps_reliable_writer.max_nack_response_delay field is part of the DATA_WRITER_PROTOCOL QosPolicy (DDS Extension).

If your send period is smaller than the round-trip delay of a message, this can cause unnecessary DDS sample retransmissions due to redundant ACKNACKs. In this situation, an ACKNACK triggered by an out-of-order DDS sample is not received before the next DDS sample is sent. When a DataReader receives the next message, it will send another ACKNACK for the missing DDS sample. As illustrated in Figure: Resending Missing Samples due to Duplicate ACKNACKs , duplicate ACKNACK messages cause another resending of missing DDS sample “2” and lead to wasted CPU usage on both the publication and the subscription sides.

Figure: Resending Missing Samples due to Duplicate ACKNACKs

While these redundant messages provide an extra cushion for the level of reliability desired, you can conserve the CPU and network bandwidth usage by limiting how often the same ACKNACK messages are sent; this is controlled by min_nack_response_delay.

Reliable subscriptions are prevented from resending an ACKNACK within min_nack_response_delay seconds from the last time an ACKNACK was sent for the same DDS sample. Our testing shows that the default min_nack_response_delay of 0 seconds achieves an optimal balance for most applications on typical Ethernet LANs.

However, if your system has very slow computers and/or a slow network, you may want to consider increasing min_nack_response_delay. Sending an ACKNACK and resending a missing DDS sample inherently takes a long time in this system. So you should allow a longer time for recovery of the lost DDS sample before sending another ACKNACK. In this situation, you should increase min_nack_response_delay.

If your system consists of a fast network or computers, and the receive queue size is very small, then you should keep min_nack_response_delay very small (such as the default value of 0). If the queue size is small, recovering a missing DDS sample is more important than conserving CPU and network bandwidth (new DDS samples that are too far ahead of the missing DDS sample are thrown away). A fast system can cope with a smaller min_nack_response_delay value, and the reliable DDS sample stream can normalize more quickly.

Disabling Positive Acknowledgements (disable_positive_acks_min_sample_keep_duration)

When ACKNACK storms are a primary concern in a system, an alternative to tuning heartbeat and ACKNACK response delays is to disable positive acknowledgments (ACKs) and rely just on NACKs to maintain reliability. Systems with non-strict reliability requirements can disable ACKs to reduce network traffic and directly solve the problem of ACK storms. ACKs can be disabled for the DataWriter and the DataReader; when disabled for the DataWriter, none of its DataReaders will send ACKs, whereas disabling it at the DataReader allows per-DataReader configuration.

Normally when ACKs are enabled, strict reliability is maintained by the DataWriter, guaranteeing that a DDS sample stays in its send queue until all DataReaders have positively acknowledged it (aside from relevant DURABILITY, HISTORY, and LIFESPAN QoS policies). When ACKs are disabled, strict reliability is no longer guaranteed, but the DataWriter should still keep the DDS sample for a sufficient duration for ACK-disabled DataReaders to have a chance to NACK it. Thus, a configurable “keep-duration” (disable_postive_acks_min_sample_keep_duration) applies for DDS samples written for ACK-disabled DataReaders, where DDS samples are kept in the queue for at least that keep-duration. After the keep-duration has elapsed for a DDS sample, the DDS sample is considered to be “acknowledged” by its ACK-disabled DataReaders.

The keep duration should be configured for the expected worst-case from when the DDS sample is written to when a NACK for the DDS sample could be received. If set too short, the DDS sample may no longer be queued when a NACK requests it, which is the cost of not enforcing strict reliability.

If the peak send rate is known and writer resources are available, the writer queue can be sized so that writes will not block. For this case, the queue size must be greater than the send rate multiplied by the keep duration.

© 2018 RTI