5. Basic QoS¶

| Prerequisites |

|

| Time to complete | 1 hour |

| Concepts covered in this module |

|

Connext DDS provides a variety of configuration options to change how your data is delivered and to fine-tune the performance of your system. These configuration options are called Quality of Service, or QoS. QoS settings are configured on DataWriters and DataReaders (and on other DDS objects, such as Publishers and Subscribers).

Some of the basic QoS policies configured on DataWriters and DataReaders include the following:

- Reliability QoS Policy: Should the arrival of each sample be guaranteed, or is best-effort enough and the risk of missing a sample acceptable?

- History QoS Policy: How much data should be stored for reliability and durability purposes?

- Resource Limits QoS Policy: What is the maximum allowed size of a DataWriter’s or DataReader’s queue due to memory constraints?

- Durability QoS Policy: Should data be stored and automatically sent to new DataReaders as they start up?

- Deadline QoS Policy: How do we detect that streaming data is being sent at an acceptable rate?

There are many more QoS policies that control discovery, fault-tolerance, and more. We will focus on just a few in this module. For a broader look at the QoS policies available, see the QoS Reference Guide. Although we will be focusing on QoS policies that are set on DataWriters and DataReaders, you can set QoS policies on other DDS objects; in Hands-On 1: Update One QoS Profile in the Monitoring/Control Application, we will set a QoS policy on a DomainParticipant.

5.1. Request-Offered QoS Policies¶

Some QoS policies are “Request-Offered,” meaning that a DataWriter offers a level of service, and a DataReader requests a level of service. If the DataWriter offers a level of service that’s the same as or higher than the DataReader requests, the QoS policies are matching. If the DataReader requests a higher level of service than the DataWriter is offering, the QoS policies are considered “incompatible,” and the DataWriter will not send data to the DataReader. Request-Offered semantics are abbreviated with the term “RxO.”

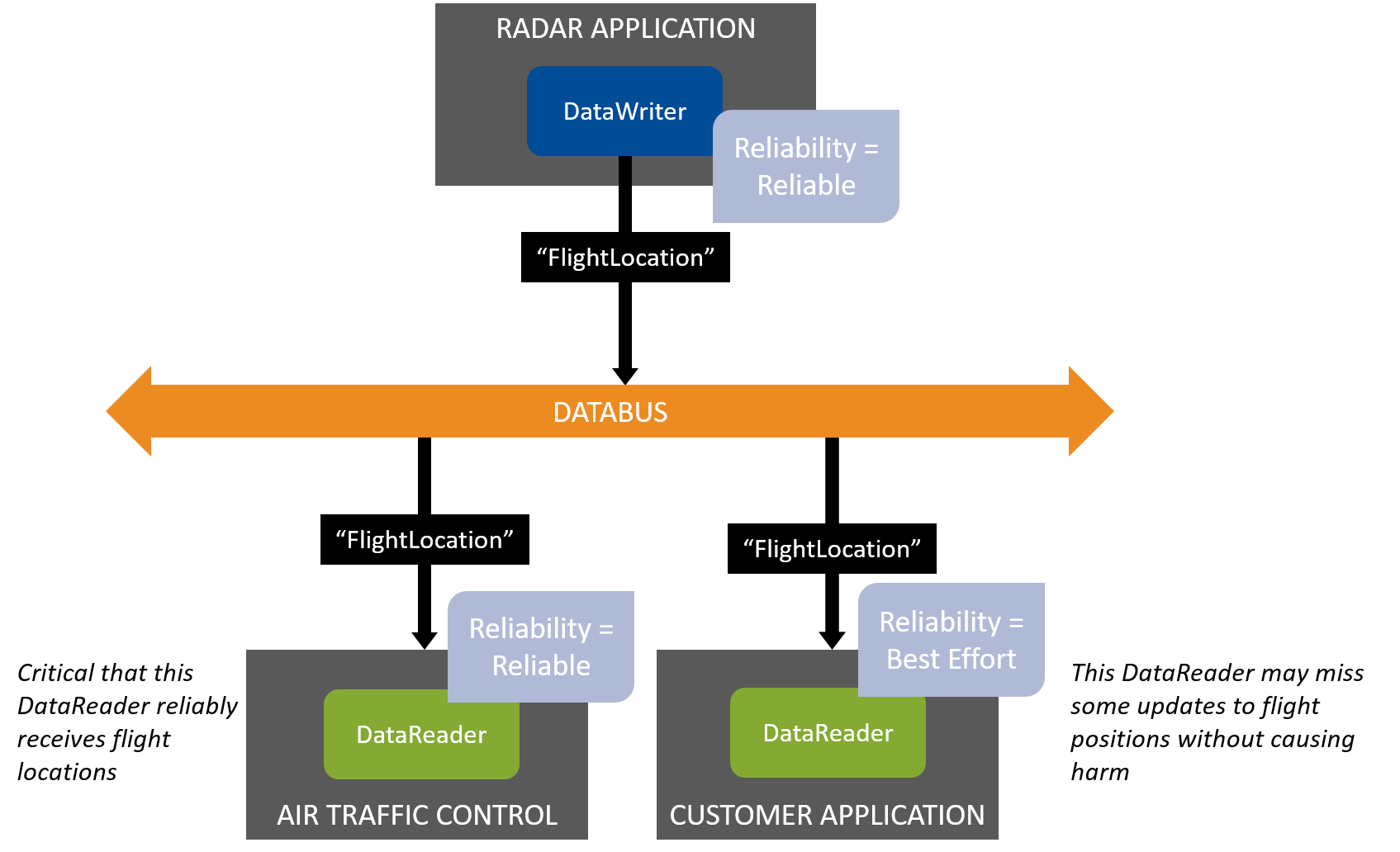

One example of a Request-Offered QoS policy is Reliability. Reliable delivery is considered a higher level of service than best-effort delivery. Although a DataWriter may offer reliable delivery, not every DataReader it’s communicating with needs reliability. Let’s look at an example of a system that tracks aircraft. Some DataReaders—such as those in the air traffic control application—need the aircraft locations reliably, meaning they cannot miss an update. Other DataReaders—such as those in an application that updates flight times for customers to view departures and arrivals—do not need to receive every flight position update. The radar application DataWriter will send aircraft positions to all the DataReaders that need it, but not all of those DataReaders need it reliably.

Figure 5.1 The Customer Application’s DataReader does not need flight location data reliably. The Radar Application’s DataWriter can send to both DataReaders because its Reliability QoS Policy is the same as or higher than theirs.

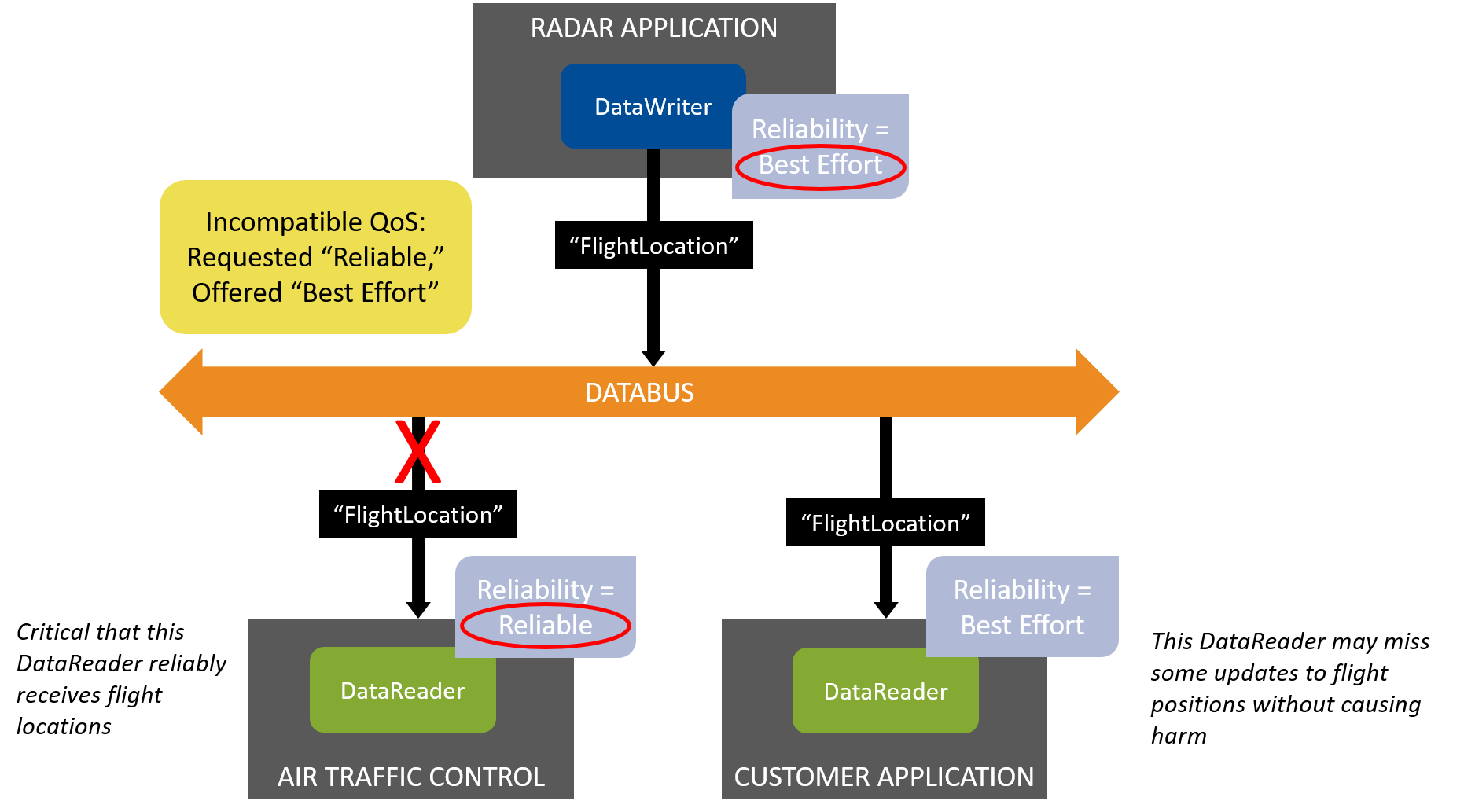

Now imagine that the system in Figure 5.1 is misconfigured, so the DataWriter offers Best Effort data, which is a lower level of service. In this situation, the Air Traffic Control Application can’t receive data reliably, even though it is critical that it receive every update. This is an error in the configuration of the system, and Connext DDS treats it that way: the DataWriter and DataReader of these applications will not communicate, and the DataReader and DataWriter will instead be notified that they have incompatible QoS policies.

Figure 5.2 The DataWriter is misconfigured to offer only Best Effort reliability, and now it does not match with the Reliable DataReader. The DataWriter is still compatible with the Customer Application’s DataReader.

In previous modules, our applications have only been notified of data being available (see Details of Receiving Data in Section 2). In Hands-On 3: Incompatible QoS Notification, we will update one of the applications to receive incompatible QoS notifications in addition to Data Available notifications.

Not all Quality of Service policies have Request-Offered semantics. For example, the History QoS Policy that we will discuss below is not request-offered: DataWriters and DataReaders can have their own history settings, independent of each other; therefore, their History QoS policies do not need to match. You can check which QoS policies do and do not have request-offered semantics by looking at the RxO column in the QoS Reference Guide.

5.2. Some Basic QoS Policies¶

5.2.1. Reliability and History QoS Policies¶

The Reliability QoS Policy and History QoS Policy work together to determine how reliably data gets sent.

5.2.1.1. “Best Effort” Reliability¶

In Introduction to Data Types, we introduced one data flow pattern where the QoS setting isn’t Reliable, called “Streaming Sensor Data.” Remember that Streaming Sensor Data has these characteristics:

- Usually sent rapidly

- Usually sent periodically

- When data is lost over the network, it is more important to get the next update than to wait for retransmission of the lost update

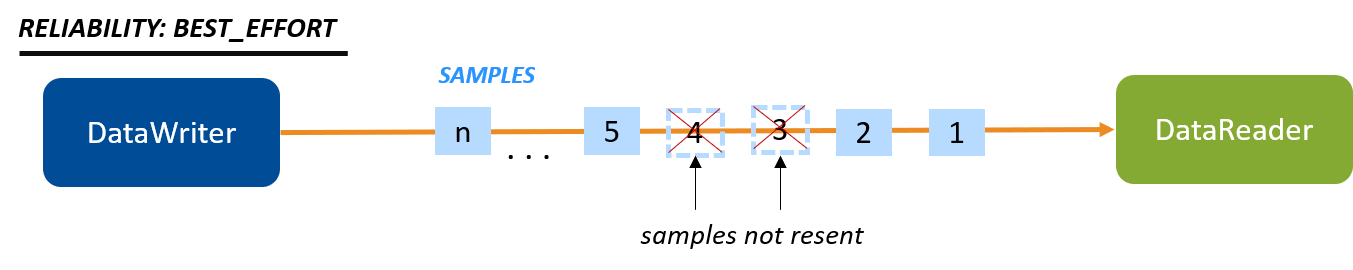

Because of these characteristics, streaming sensor data is generally not configured to be reliable. It is configured with “Best Effort” reliability. It is one end of the spectrum of how reliably your data can be sent.

Figure 5.3 Best-Effort Reliability: Samples that the DataReader did not receive are not resent.

| Reliability (RxO) | Level (Lowest to Highest) | Definition |

|---|---|---|

| kind | BEST_EFFORT | Do not send data reliably. If samples are lost, they are not resent. |

| RELIABLE | Send data reliably. Resend lost samples, depending on the History QoS Policy and Resource Limits QoS Policy. |

5.2.1.2. “Reliable” Reliability + “Keep All” History¶

The other end of the spectrum is a pattern called “Event and Alarm Data.” The typical characteristics of event and alarm data are:

- May be sent rapidly

- Sent intermittently

- Important to see every update for events and alarms that occur

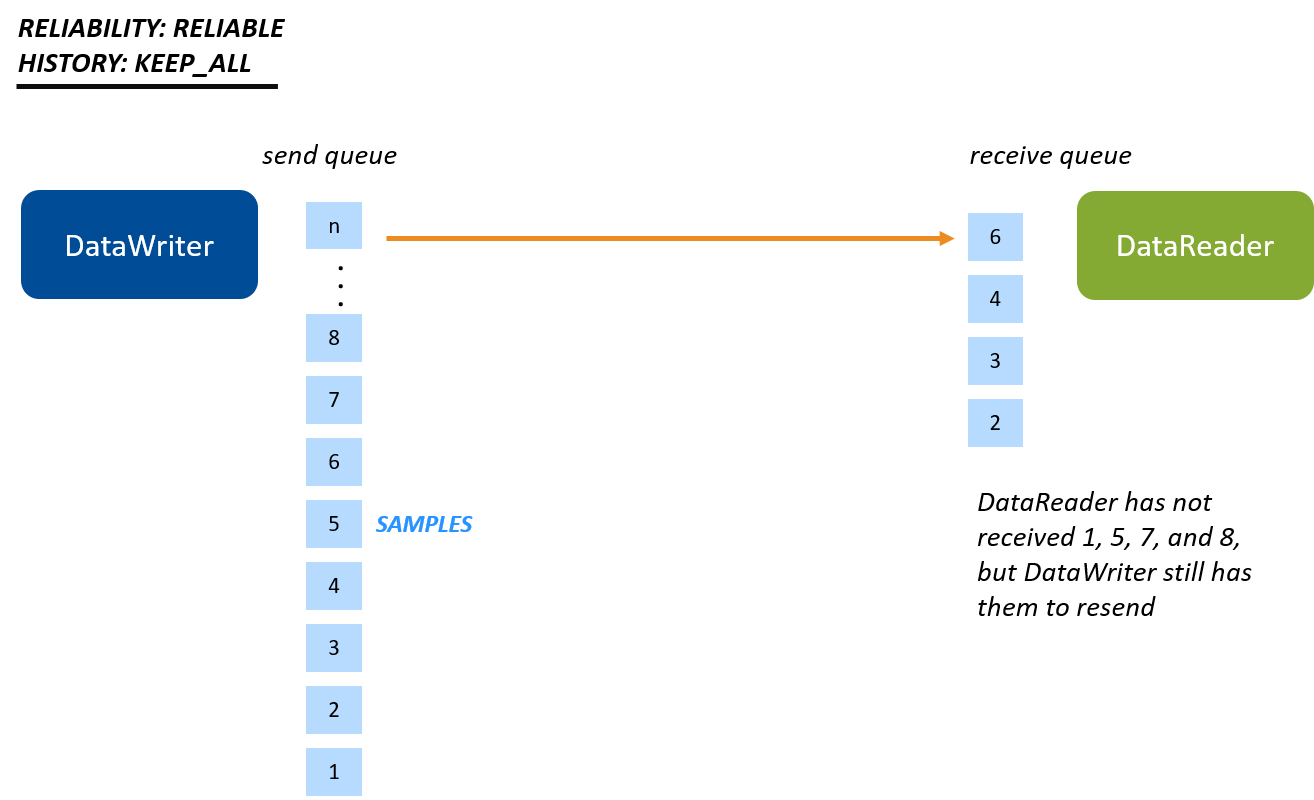

Event and Alarm Data requires a level of reliability where all data is kept for reliable DataReaders. This means that Connext DDS will try to resend all lost data, and it will maintain a queue of data that has not been delivered to the existing DataReaders. It also means that a DataWriter will not overwrite data in its queue until all DataReaders have received the previously sent data (or have gone offline). This level of reliability is set up using:

- Reliability kind = RELIABLE

- History kind = KEEP_ALL

Figure 5.4 Keep-All Reliability: Now there is a queue, and all samples are kept in the queue. Samples cannot be overwritten until they are received. (More details and caveats are explained in Reliable Communications, in the RTI Connext DDS Core Libraries User’s Manual.)

5.2.1.3. “Reliable” Reliability + “Keep Last” History¶

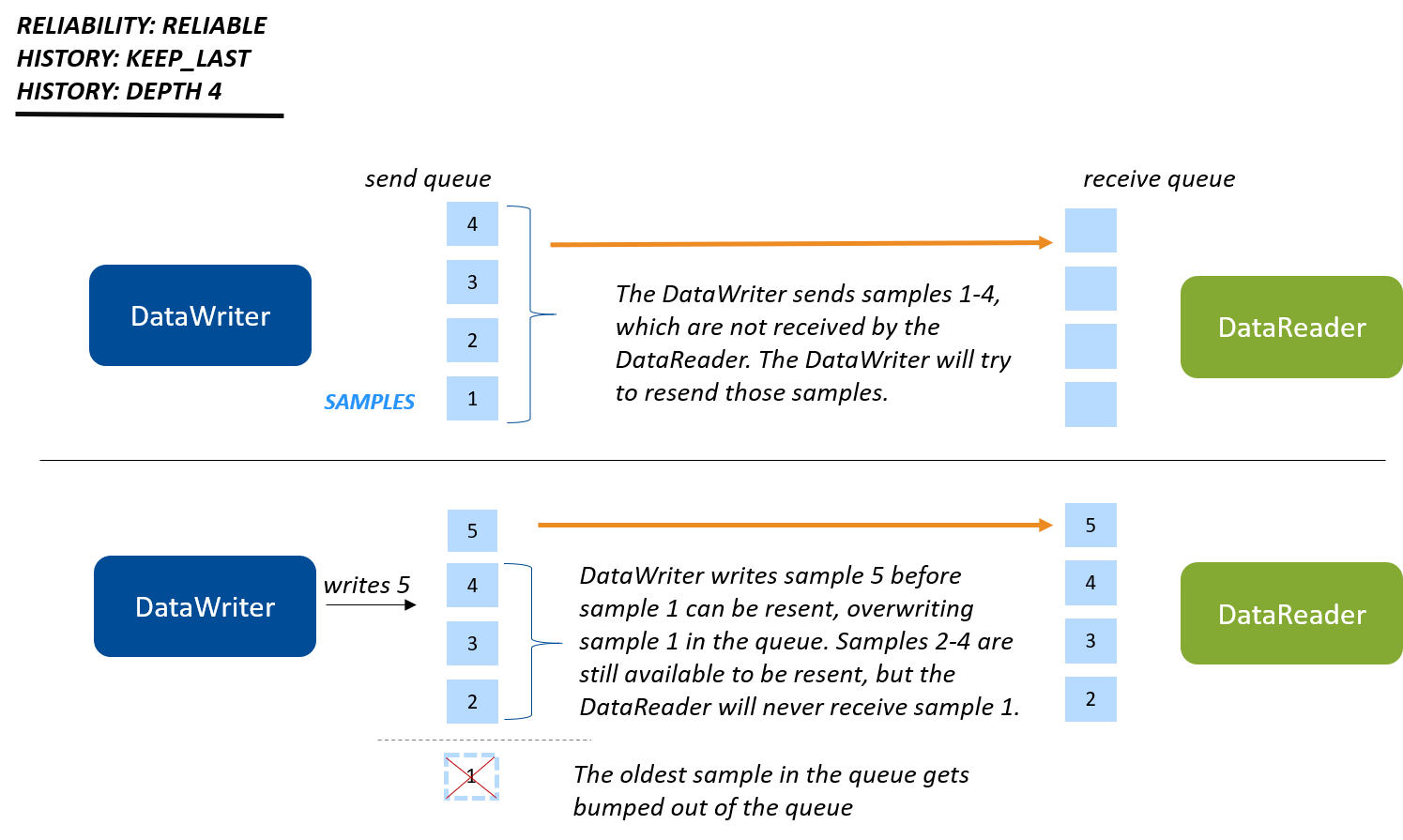

There is one more reliability configuration in-between “Best Effort” and “Keep-All” called Keep-Last Reliability.

Keep-Last Reliability is a configuration where the last N number of samples sent are reliably delivered. This allows the DataWriter to overwrite older samples with newer samples, even if some DataReaders have not received those older samples. This configuration is set up using:

- Reliability kind = RELIABLE

- History kind = KEEP_LAST

- History depth = Number of samples to keep for each instance

Figure 5.5 Keep-Last Reliability (unkeyed or with a single instance): In this example, you have four slots open on the writer side to keep samples that have not yet been received by the reader.

Imagine that the DataWriter in Figure 5.5 rapidly writes 10 samples. Four of those samples will overwrite the four samples that are kept in the queue; the next four will overwrite those four, and so on. The DataReader might accept those samples as rapidly as the DataWriter writes them—if not, some of them might be lost.

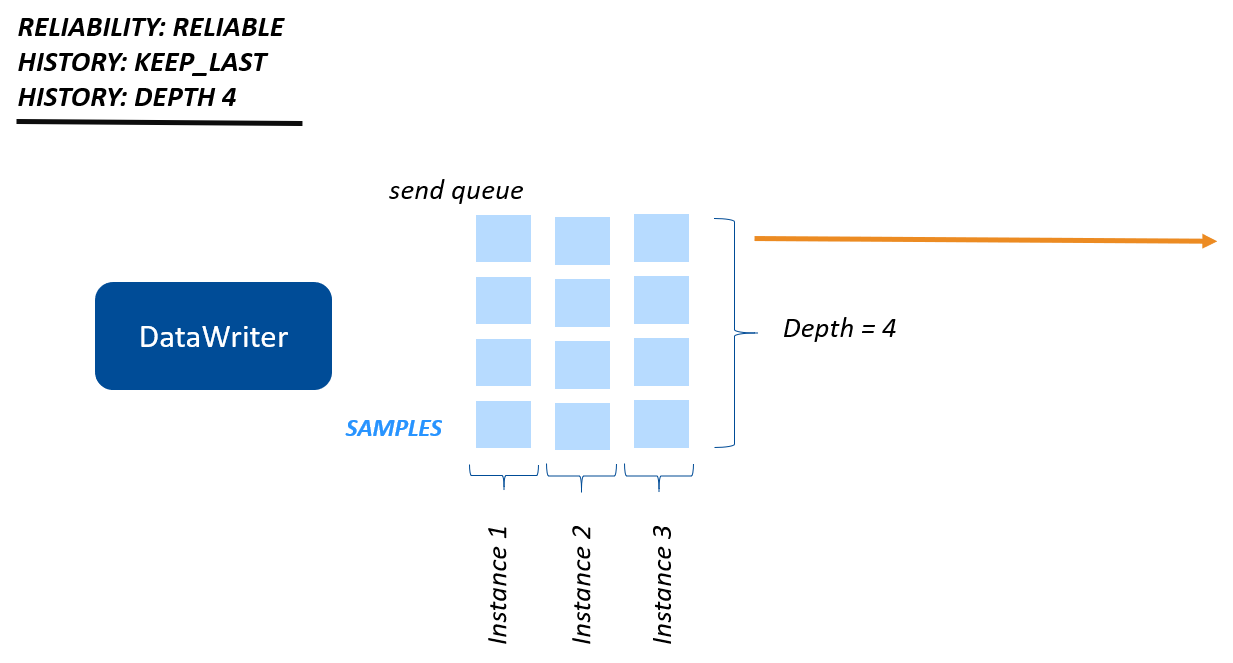

There is one important feature of how the History depth works that makes it important for many design patterns: the History depth QoS setting is applied per instance. This means that you specify a History depth of N samples, and Connext DDS will reliably deliver the last N samples of each instance (for example, of each flight). It may not be obvious why this is so powerful at first, but this is the basis for the State Data pattern and we’ll see it at work in the last hands-on exercise in this module. (There is another important piece of the State Data pattern: Durability, which sends the current state to late-joining DataReaders. We’ll talk about this in the Durability QoS Policy.)

Figure 5.6 Keep-Last Reliability (with multiple instances): A history of four samples per instance are available to be reliably delivered to a DataReader.

We’ve been focused on the DataWriter’s queue when talking about Keep-Last Reliability, but the behavior is the same for the DataReader: the DataReader queue keeps History depth samples for each instance, and when a new sample arrives, it is allowed to overwrite an existing sample in the DataReader’s queue for that instance.

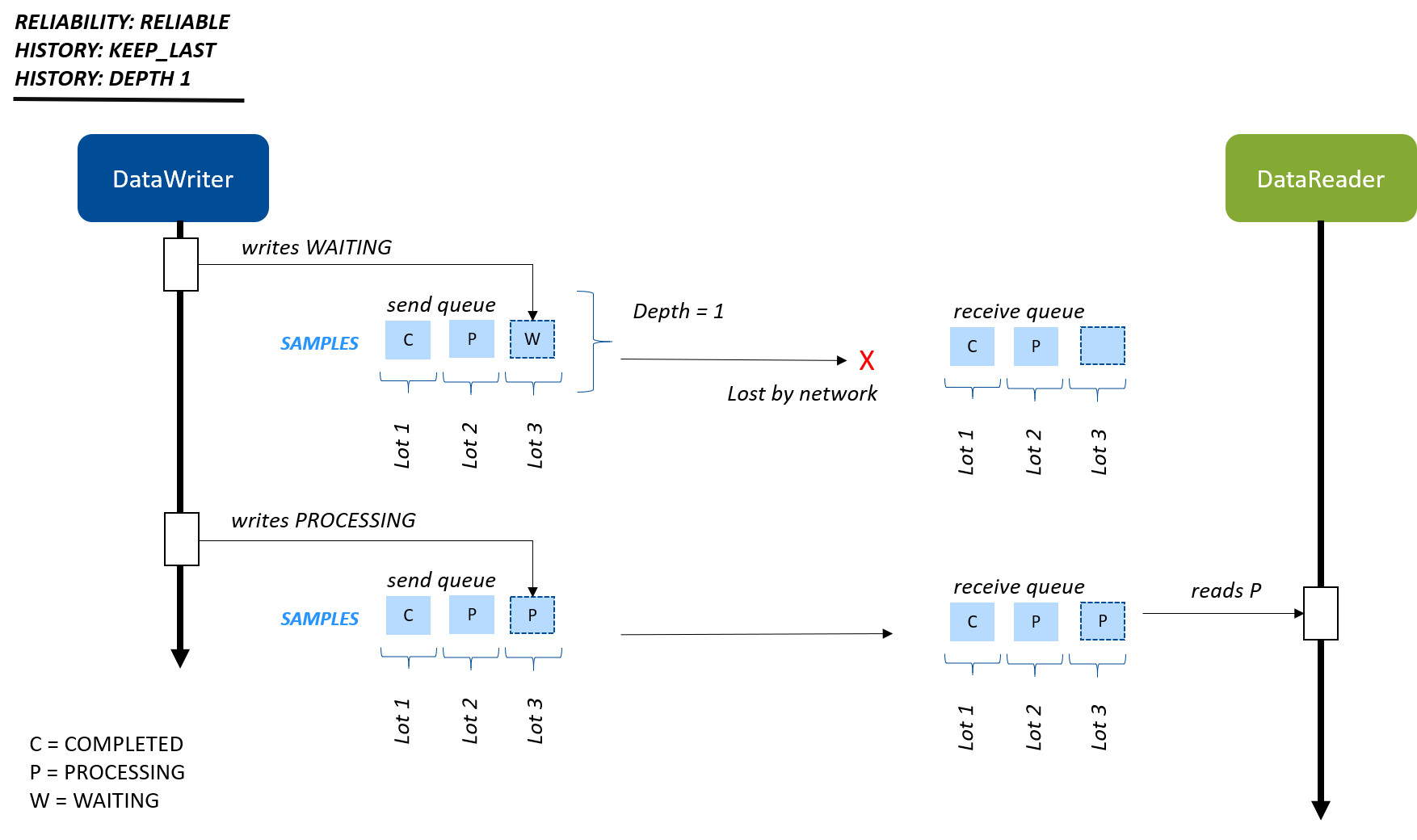

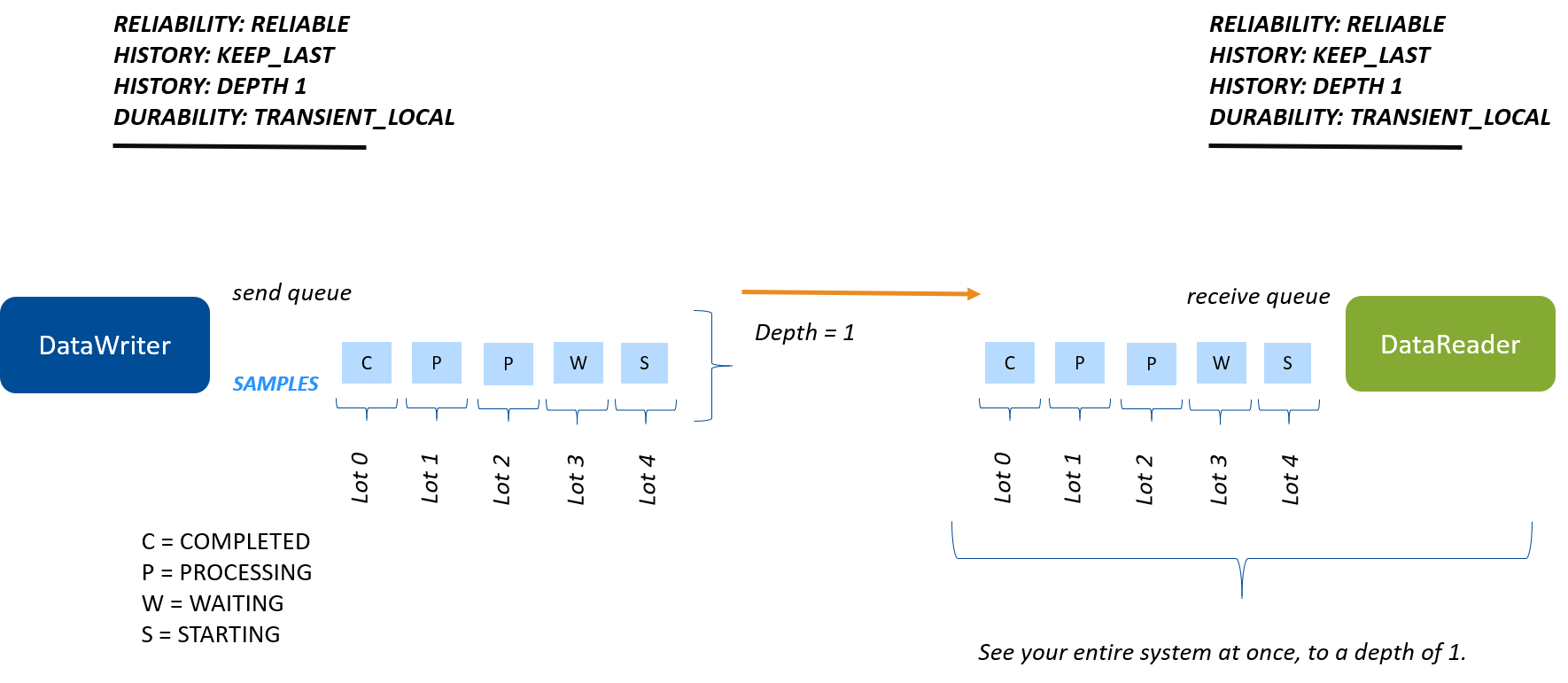

So when would an application use Keep-Last Reliability? As mentioned above, this configuration is the basis for the State Data pattern. One example of State Data is the ChocolateLotState data in the chocolate factory. Recall that the applications in our example update the state of the lot, and the Monitoring/Control application monitors that state.

The typical characteristics of state data are:

- Typically state data does not change rapidly and periodically (otherwise, it would be streaming data).

- Applications monitoring state data would like to reliably receive that data.

- Applications monitoring state data need to know the current state. It is more important to receive the current state than to receive every state update that has ever happened, even if that means missing some state updates.

These requirements of the State Data pattern can be met by setting History kind to KEEP_LAST and Durability kind to TRANSIENT_LOCAL or higher (see Durability QoS Policy).

Figure 5.7 State Data pattern using reliability with a history depth of 1. In this pattern, typically a depth of 1 is used. The DataReader doesn’t need to know all or several previous states, just the most recent state. In this case, the DataReader never receives the WAITING state update for lot 3, because it was lost on the network, and overwritten with the PROCESSING state before the DataWriter could resend it.

Since state data isn’t streaming, there is no guarantee that the next state data update will be sent right away. This means that when the state updates, you want that update to be reliable—up to a point. In Figure 5.7, the DataWriter is updating the ChocolateLotState only when that state changes. In the real world, this may happen infrequently, depending on how long it takes the station to process a lot. If the ChocolateLotState DataWriter was using Best Effort and the DataReader missed an update, the DataWriter would not necessarily send new data right away—so the DataReader might not receive the state of the lot for a long time. With a Reliability kind of RELIABLE and a History depth of 1 (as well as a TRANSIENT_LOCAL or higher Durability QoS Policy), the DataReader has at least the last available state.

We’ll see how a RELIABLE Reliability kind with a History depth of 1, along with TRANSIENT_LOCAL Durability, looks in our final hands-on exercise.

| History (Not RxO) | Value | Definition |

|---|---|---|

| kind | KEEP_LAST | Keep the last depth number of samples per instance in the queue |

| KEEP_ALL | Keep all samples (subject to Resource Limits that we will discuss next) | |

| depth | <integer value> | How many samples to keep per instance if Keep Last is specified |

5.2.1.4. Summary¶

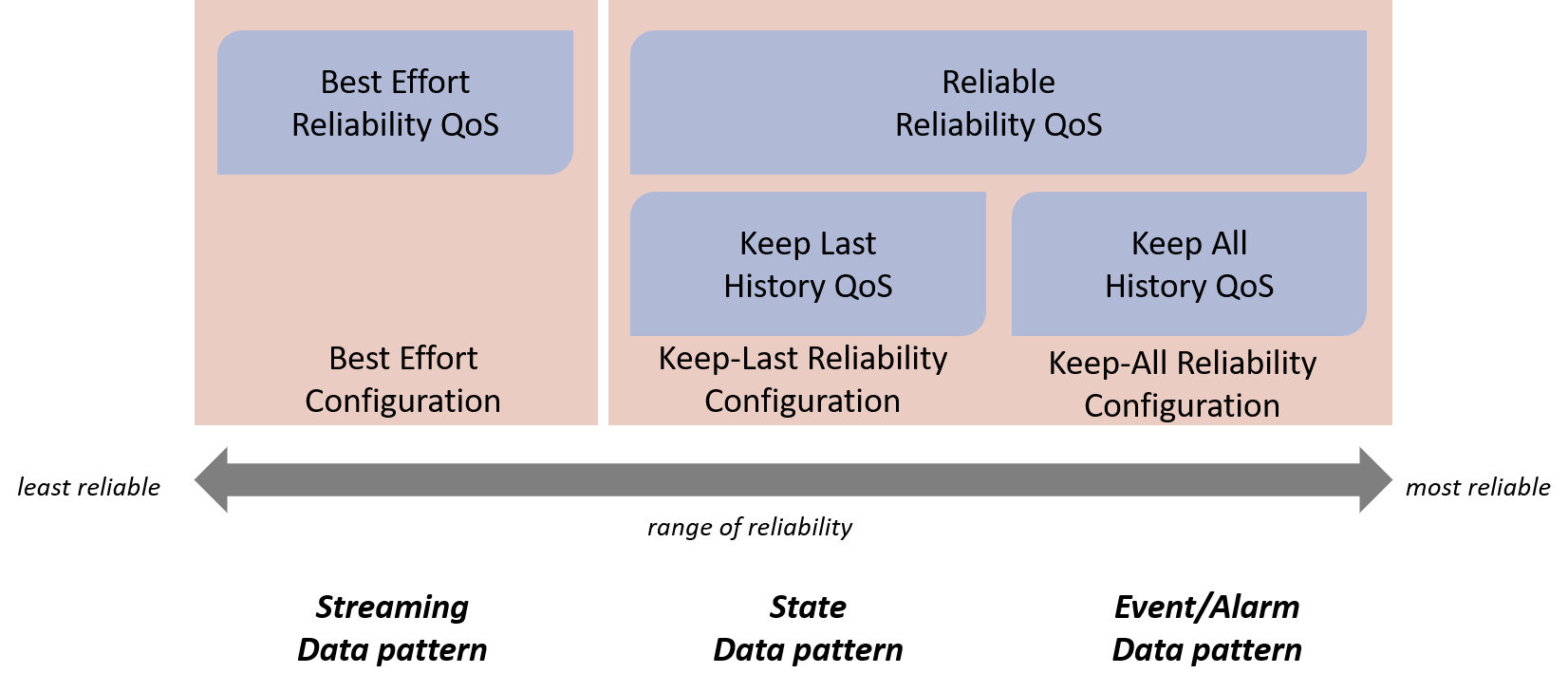

You have a range of reliability options using the Reliability and History QoS policies, for various data patterns:

- Streaming data like “ChocolateTemperature” that does not need reliability at all

- State data like “ChocolateLotState”, where DataReaders generally want to reliably receive the latest state and can accept missing some state updates when the state is changing rapidly

- Event and Alarm data that needs guaranteed delivery of every sample

Figure 5.8 A range of reliability options between Best Effort and Reliable. If a RELIABLE kind is selected, the History QoS Policy comes into play.

5.2.2. Resource Limits QoS Policy¶

Even in a system where you need strict Keep-All Reliability, there may be a limit to the number of samples that you want to keep in a DataWriter’s or DataReader’s queue at one time, because of memory resource constraints. You may want to set a maximum number of samples allowed in a DataWriter or DataReader queue so that it does not grow indefinitely.

The Resource Limits QoS Policy contains several limits, but the one we will focus on is max_samples. This setting limits the total number of samples in a DataWriter’s or DataReader’s queue across all instances.

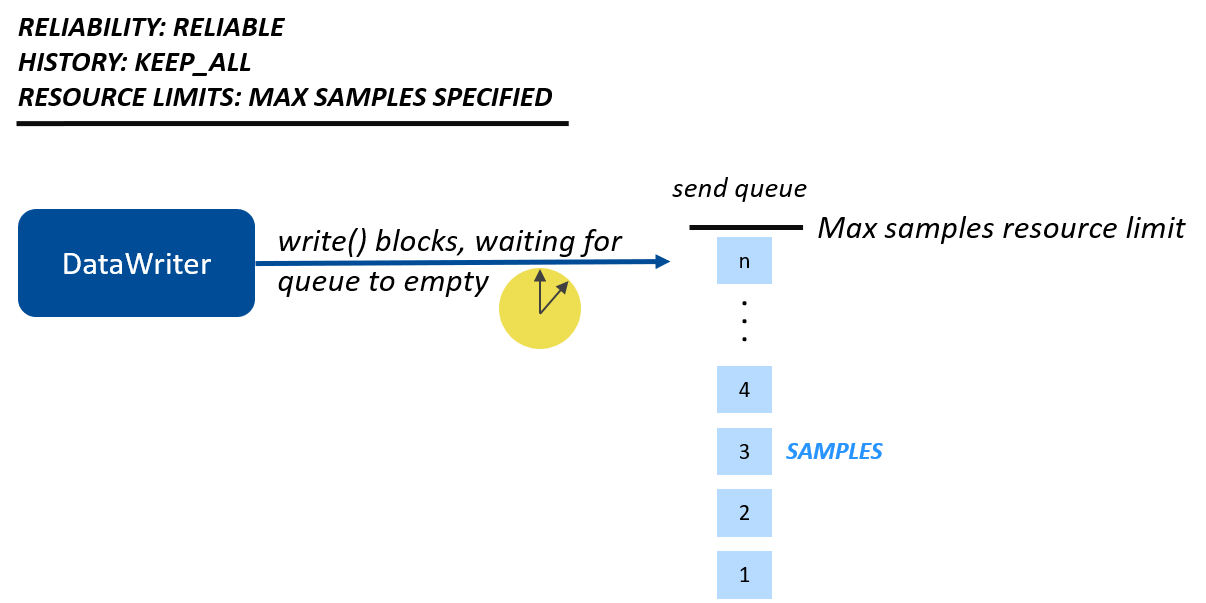

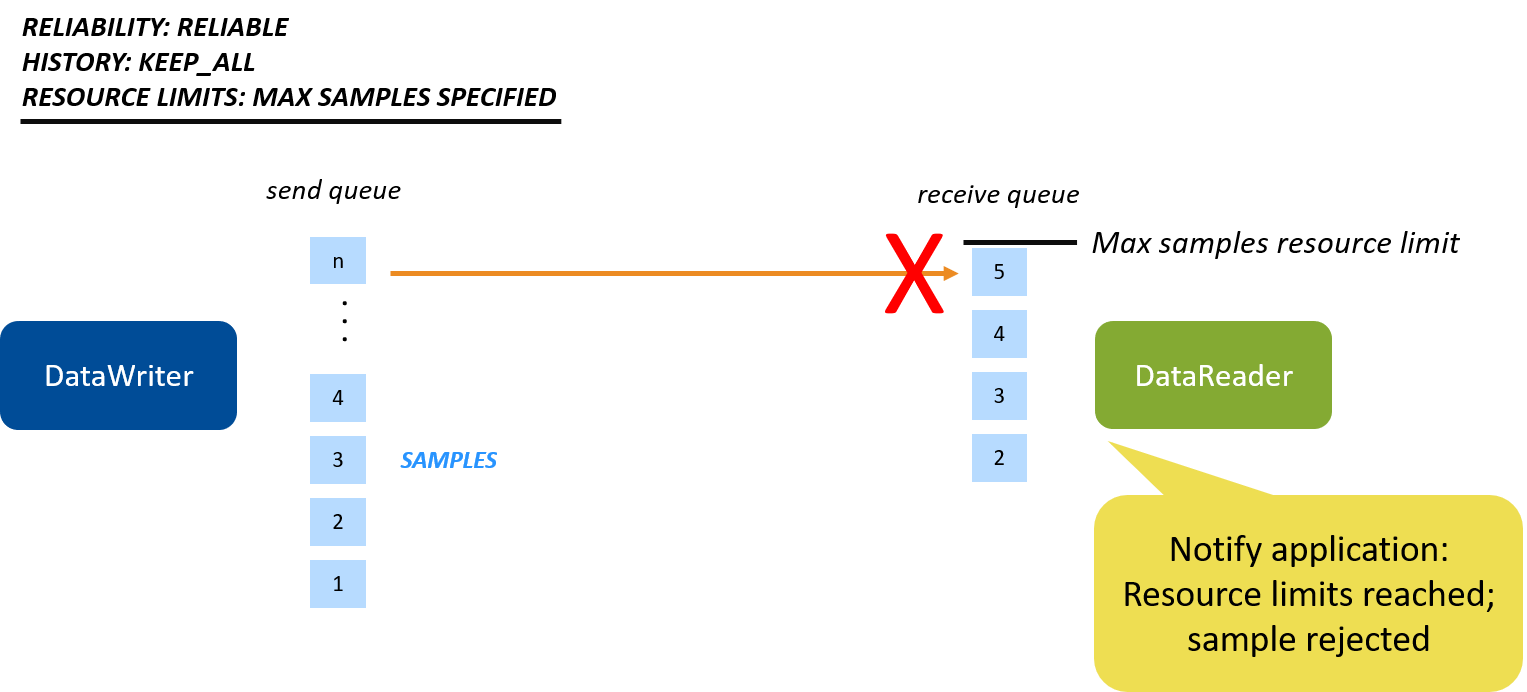

If a DataWriter or DataReader has its History kind set to KEEP_ALL, it is not allowed to overwrite samples in its queue to make room for new samples. So what happens if a DataWriter or DataReader exceeds its max_samples resource limit? The DataWriter and DataReader handle this situation slightly differently.

DataWriters with Keep-All Reliability handle hitting their Resource

Limits by blocking the write() call, waiting for an empty slot in the

queue as the DataReaders receive the reliable data. DataReaders with Keep-All

Reliability handle hitting their Resource Limits by rejecting any

samples that arrive when their queue is full, and notifying the

application so that the application can call take() to remove samples

from the queue.

Figure 5.9 DataWriter View: The DataWriter’s write() call will block if it hits

its resource limits, and one or more DataReaders have not received all

the data. If the write() call times out without being able to send data,

write() will return or throw an error. If you’re interested in the

details of the reliability protocol and how DataWriters handle

non-responsive DataReaders without becoming blocked forever,

see Reliable Communications, in the RTI Connext DDS Core Libraries User’s Manual.

Figure 5.10 DataReader view: The DataReader rejects samples if it has reached its resource limits.

| Resource Limits (Not RxO) | Value | Definition |

|---|---|---|

| max_samples | <integer value> | The maximum samples allowed in a DataWriter’s or DataReader’s queue across all instances |

| max_instances | <integer value> | The maximum number of instances a DataWriter can write or a DataReader can read |

| max_samples_per_instance | <integer value> | The maximum number of samples allowed for each instance in a DataWriter’s or DataReader’s queue |

We’ve covered only a few resource limits here. For more information, see RESOURCE_LIMITS QosPolicy, in the RTI Connext DDS Core Libraries User’s Manual.

5.2.3. Durability QoS Policy¶

The Durability QoS Policy specifies whether data will be delivered to a DataReader that was not known to the DataWriter at the time the data was written (also called a “late-joining” DataReader). Perhaps the DataReader wasn’t there or hadn’t been discovered at the time the samples were written. This QoS policy is used in multiple patterns, including the State Data pattern.

Typically when an application needs state data, it wants to receive the current states as soon as it starts up. The one thing we’ve been missing in our example is that if an application with a DataReader starts up late, right now it doesn’t find out the current state of the chocolate lots in the system. This is especially obvious if you start the Monitoring/Control application before you start the Tempering Station application:

$ ./tempering_application

waiting for lot

Processing lot #3

waiting for lot

waiting for lot

waiting for lot

Processing lot #4

The Monitoring/Control application has sent lots #0-4 to the Tempering application—but the first thing the Tempering application sees is lot #3! It lost the notifications about all the previous lots. We will fix this in one of the hands-on exercises later in this module, by using the Durability QoS Policy together with the Reliability and History QoS policies. We’ll use a QoS profile that will specify a higher Durability level than the default, so the Tempering application’s DataReader receives ChocolateLotState updates that were written before it started.

The lowest level of durability is “Volatile,” which means that historical data is not sent to any late-joining DataReader. (“Historical” data is any data sent by a DataWriter before it discovers a DataReader.) “Volatile” is the default durability setting. The next level of durability is “Transient Local,” which means that historical data is automatically maintained in the DataWriter’s queue, up to the History depth. Late-joining DataReaders that also use reliability and Transient Local durability are automatically sent historical data when they discover the DataWriter. “Transient Local” is the setting we will use in the hands-on exercise later in this module. This setting will ensure that the Tempering application receives notifications about all previous lots when it starts up late.

The Durability QoS Policy is a Request-Offered policy. For example, if the DataReader requests “Transient Local” durability, but the DataWriter is set to “Volatile” durability, the entities are not compatible and they will not communicate.

| Durability (RxO) | Value (Lowest to Highest) | Definition |

|---|---|---|

| kind | VOLATILE | Do not save or deliver historical DDS samples. (Historical samples are samples that were written before the DataReader was discovered by the DataWriter.) |

| TRANSIENT_LOCAL | Save and deliver historical DDS samples if the DataWriter still exists. | |

| TRANSIENT_DURABILITY | Save and deliver historical DDS samples using RTI Persistence Service to store samples in volatile memory. | |

| PERSISTENT_DURABILITY | Save and deliver historical DDS samples using RTI Persistence Service to store samples in non-volatile memory. |

As you can see in the table above, Durability has two additional kinds: TRANSIENT and PERSISTENT. These levels of durability allow historical data to be available to late-joining DataReaders even if the original DataWriter is no longer in the system (because it has been shut down, for example). These higher levels of durability require that data is also stored by RTI Persistence Service. These QoS settings are sometimes used as part of the Event and Alarm Data pattern in systems where you need to guarantee delivery of Events even if the DataWriter no longer exists. They might also be used in a State Data pattern where state synchronization is important: for example, a DataWriter changes the state of a system (writes the result of some process) and then ends, and the next application must read that state in order to continue working with it.

5.2.4. Deadline QoS Policy¶

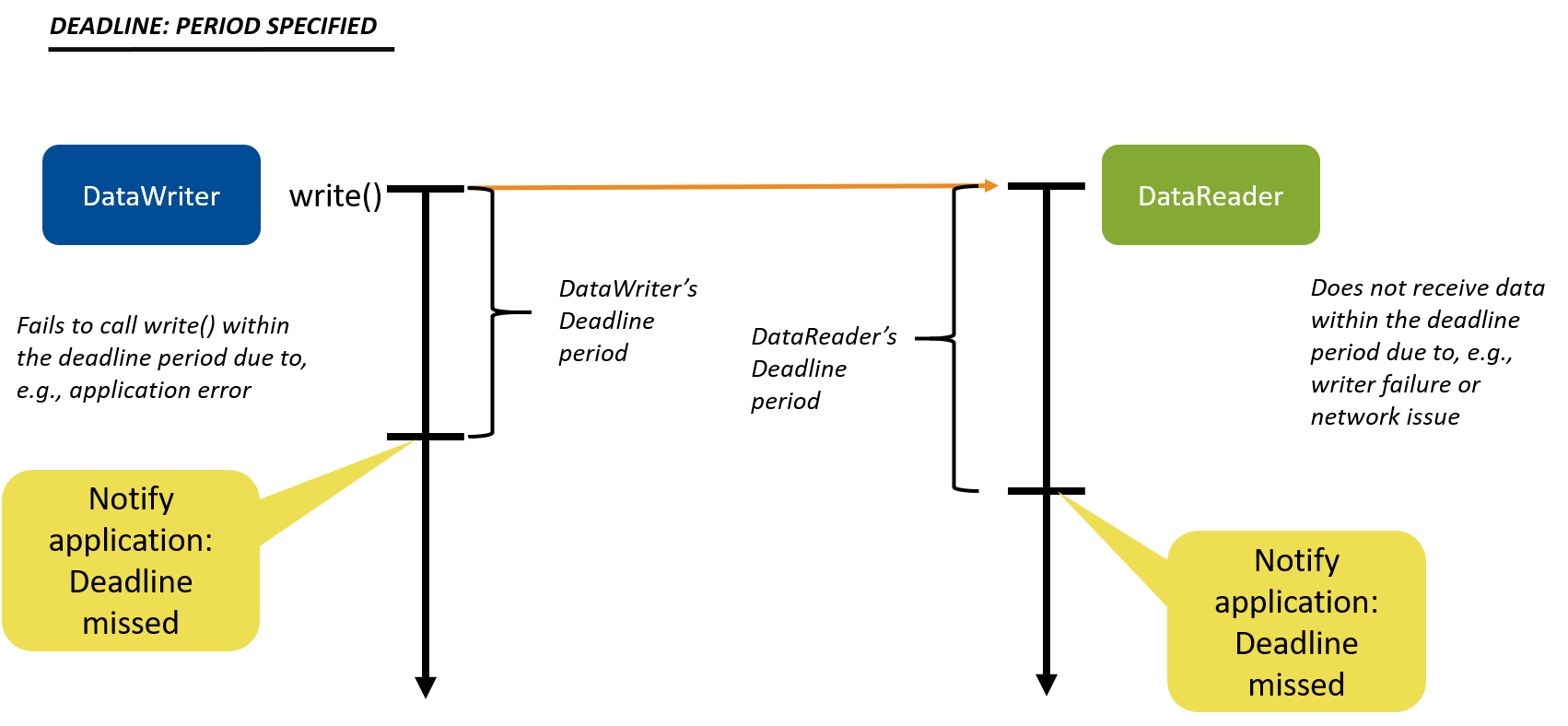

The Deadline QoS Policy is used for periodic and streaming data, and can be configured on both the DataWriter and the DataReader.

When the Deadline QoS Policy is set on a DataReader, it specifies that the DataReader expects to receive data within a particular time period, or else the application should be notified. When the Deadline QoS Policy is set on a DataWriter, it specifies that the DataWriter commits to writing data within a particular time period, or else the application will be notified. For example, if an application hangs instead of writing within the deadline period, Connext DDS will notify that application that it’s not fulfilling the quality of service it offered. We will look at how to receive these notifications in Hands-On 3: Incompatible QoS Notification.

The Deadline QoS Policy is a request-offered QoS: the DataWriter must offer the same or shorter deadline than what the DataReader requests. Typically, the DataWriter is configured with a shorter deadline than the DataReader, due to the possibility of network latency.

Figure 5.11 Deadline QoS Policy: The DataWriter commits to writing data within a particular time period; the DataReader expects to receive data within a particular time period.

| Deadline (RxO) | Value | Definition |

|---|---|---|

| period | deadline time: DataWriter deadline must be <= DataReader request | Time period in which a DataWriter offers to write and a DataReader requests to receive periodic samples |

5.2.5. QoS Patterns Review¶

The design patterns you will use for your data are made up of combinations of QoS settings. Here is a review of the patterns we have covered:

| Pattern | Reliability kind | History kind | History depth | Durability kind | Deadline period |

|---|---|---|---|---|---|

| Streaming (Periodic) Data | BEST_EFFORT | N/A | N/A | VOLATILE | Period in which you expect to send or receive periodic data |

| Event/Alarm Data | RELIABLE | KEEP_ALL | N/A | Various (see below) | N/A |

| State Data | RELIABLE | KEEP_LAST | Number of samples to keep in the queue to be reliably delivered. Typically 1 | TRANSIENT_LOCAL | N/A |

Event and Alarm Data may have various levels of Durability kind, depending on whether your system design requires Events and Alarms to be available to late-joining DataReaders, and whether Events and Alarms must be available even if the original DataWriter is no longer in the system.

The Resource Limits QoS Policy that we have discussed is mostly orthogonal to our design patterns: it is ultimately a limit on the maximum memory for samples and instances that your system should use, and that is part of your overall system design. Typically, you design your data flows first, such as Streaming (Perioidic) Data, and set your QoS policies based on that data pattern. Then you refine your QoS settings, overriding individual settings such as resource limits, to make your QoS configuration work for your system.

5.3. QoS Profiles¶

QoS profiles are groupings of QoS settings defined in an XML file. Connext DDS

provides many builtin QoS profiles that you can use as a starting point

for your systems. Some of these profiles cover the patterns we have just

discussed, such as the “Pattern.Streaming” profile we have used before.

The pattern-based profiles start with “Pattern” in the name. There are

also many that are made up of basic sets of QoS configurations that you

can use to build your own patterns. For example, there are QoS profiles

based on data flow characteristics such as

“Generic.StrictReliable.HighThroughput” that is configured for

high-throughput strictly-reliable data. All of the available Builtin QoS

profiles can be viewed in the file

<NDDSHOME>/resource/xml/BuiltinProfiles.documentationONLY.xml.

Note

As its name implies, the BuiltinProfiles.documentationONLY.xml file

is only for viewing what the builtin profiles contain. You cannot

modify the builtin profiles; however, you can create

your own profile that inherits from a builtin profile. Then you can overwrite

parts of the inherited profile with your own QoS settings.

In Modify for Streaming Data (in Introduction to Data Types),

we already changed a QoS profile in the XML to inherit from a builtin QoS profile.

In that exercise, though, our code loaded a profile implicitly because

is_default_qos was set to true in the XML file. This is dangerous,

because the default QoS profile may not have all of the settings you want

(and some settings you don’t want). In the next hands-on exercises,

we’ll review new QoS profiles that inherit from builtin profiles and override some

values. We will change the code to load those QoS profiles, allowing our

application to specify the profiles it wants to use for individual DomainParticipants, DataWriters,

or DataReaders.

Note

Using is_default_qos="true" is not a best practice in production

applications. It’s a convenient way to get you started quickly, but in

production applications you should explicitly specify which QoS profile

to use, instead of relying on a default.

5.4. Hands-On 1: Update One QoS Profile in the Monitoring/Control Application¶

In this exercise, you are starting with applications in the repository where we have pre-configured only some of the QoS profiles. We will start by configuring some of the correct QoS profiles for the Monitoring/Control application, but we will intentionally leave one DataWriter configured to use the default QoS profile in error. This will allow us to debug incompatible QoS profiles.

We will do the following:

- Hands-On 1:

- Load the QoS file.

- Set up a DomainParticipant QoS profile, to better help you debug QoS profiles in Admin Console. (For more information on DomainParticipants, see Publishers, Subscribers, and DomainParticipants.)

- Set up the correct QoS profiles in the DataReaders, but not the DataWriter.

- Hands-On 2: Debug the incompatible QoS profiles in Admin Console.

- Hands-On 3: Add code to help debug incompatible QoS profiles directly in one

of the applications.

- Hands-On 4: Change the Monitoring/Control DataWriter’s QoS profile to the correct QoS profile, so now the DataWriters and DataReaders are compatible.

In this exercise, you’ll be working in the directory 5_basic_qos/c++11,

which was created when you cloned the getting started repository from github

in the first module, in Clone Repository.

Tip

You don’t need to run rtisetenv_<arch> like you did in the

previous modules because the CMake script finds the Connext DDS

installation directory and sets up the environment for you.

Run CMake.

Note

You must have CMake version 3.11 or higher.

In the

5_basic_qos/c++11directory, type the following, depending on your operating system:$ mkdir build $ cd build $ cmake ..

Make sure “cmake” is in your path.

If Connext DDS is not installed in the default location (see Paths Mentioned in Documentation), specify

cmakeas follows:cmake -DCONNEXTDDS_DIR=<installation dir> ...$ mkdir build $ cd build $ cmake ..

Make sure “cmake” is in your path.

If Connext DDS is not installed in the default location (see Paths Mentioned in Documentation), specify

cmakeas follows:cmake -DCONNEXTDDS_DIR=<installation dir> ...Enter the following commands:

> mkdir build > cd build > "c:\program files\CMake\bin\cmake" --help

Your path name may vary. Substitute

"c:\program files\CMake\bin\cmake"with the correct path for your CMake installation.--helpwill list all the compilers you can generate project files for. Choose an installed compiler version, such as “Visual Studio 15 2017”.

From within the

builddirectory, create the build files:If you have a 64-bit Windows machine, add the option

-A x64. For example:> "c:\program files\CMake\bin\cmake" -G "Visual Studio 15 2017" -D CONNEXTDDS_ARCH=x64Win64VS2017 -A x64 ..

If you have a 32-bit Windows machine, add the option

-A Win32. For example:> "c:\program files\CMake\bin\cmake" -G "Visual Studio 15 2017" -D CONNEXTDDS_ARCH=i86Win32VS2017 -A Win32 ..

Note

If you are using Visual Studio 2019, use “2017” in your architecture name.

If Connext DDS is not installed in the default location (see Paths Mentioned in Documentation), add the option

-DCONNEXTDDS_DIR=<install dir>. For example:> c:\program files\CMake\bin\cmake" -G "Visual Studio 15 2017" -DCONNEXTDDS_ARCH=x64Win64VS2017 -A x64 -DCONNEXTDDS_DIR=<install dir> ..

Within the application, load the

qos_profiles.xmlfile from the repository by performing the following steps:Open the

monitoring_ctrl_application.cxxfile and look for the comment:// Exercise #1.1: Add QoS providerAdd the following code after the comment:

// Exercise #1.1: Add QoS provider // Loads the QoS from the qos_profiles.xml file. dds::core::QosProvider qos_provider("./qos_profiles.xml");

This code explicitly loads an XML QoS file named

qos_profiles.xmlthat we have provided for you in thec++11directory, instead of relying on the file with a default name (USER_QOS_PROFILES.xml) as we did in Section 3 (Modify for Streaming Data).Tip

QoS profile XML files can be modified and loaded without recompiling the application.

Review the QoS profiles in the

qos_profiles.xmlfile.This XML file contains the QoS profiles that will be used by your applications. The file defines a QoS library, “ChocolateFactoryLibrary,” that contains the QoS profiles we will be using:

<qos_library name="ChocolateFactoryLibrary">

Take a look at the “TemperingApplication” and “MonitoringControlApplication” qos_profiles in the XML file:

<!-- QoS profile to set the participant name for debugging --> <qos_profile name="TemperingApplication" base_name="BuiltinQosLib::Generic.Common"> <participant_qos> <participant_name> <name>TemperingAppParticipant</name> </participant_name> </participant_qos> </qos_profile> <!-- QoS profile to set the participant name for debugging --> <qos_profile name="MonitoringControlApplication" base_name="BuiltinQosLib::Generic.Common"> <participant_qos> <participant_name> <name>MonitoringControlParticipant</name> </participant_name> </participant_qos> </qos_profile>

We haven’t talked about setting QoS profiles on the DomainParticipants yet–we will say more about this when we talk about discovery. For now, what’s important is that if you set the

participant_nameQoS setting, Connext DDS makes that name visible to other applications. Since DomainParticipants own all your DataWriters and DataReaders, giving your DomainParticipants unique names makes it easier to tell your DataWriters and DataReaders apart when you are debugging in Admin Console.These DomainParticipant profiles are pretty simple. They inherit from the builtin QoS profile “Generic.Common” by specifying that as the base profile:

base_name="BuiltinQosLib::Generic.Common"

Then these QoS profiles override the default DomainParticipant’s name:

<participant_qos> <participant_name> <name>MonitoringControlParticipant</name> </participant_name> </participant_qos>

In the next step, we will change the Monitoring/Control Application’s DomainParticipant to use this QoS profile to help us debug in Admin Console.

Now review the “ChocolateTemperatureProfile” Qos profile, which will be used to specify QoS policies for DataWriters and DataReaders in our applications:

<qos_profile name="ChocolateTemperatureProfile" base_name="BuiltinQosLib::Pattern.Streaming">

This profile inherits from the “Pattern.Streaming” profile, which provides a basic pattern for DataWriter and DataReader QoS profiles for streaming data, including the following:

- Reliability kind = BEST_EFFORT

- Deadline period = 4 seconds

Remember you can review the details of the default profiles like “Pattern.Streaming” in

<NDDSHOME>/resource/xml/BuiltinProfiles.documentationONLY.xml.Review the “ChocolateLotStateProfile” QoS profile, which will be used to specify QoS policies for DataWriters and DataReaders in our applications:

<qos_profile name="ChocolateLotStateProfile" base_name="BuiltinQosLib::Pattern.Status">

This profile inherits from the “Pattern.Status” profile, which provides the following DataWriter and DataReader QoS settings:

- Reliability kind = RELIABLE

- Durability kind = TRANSIENT_LOCAL

- History kind = KEEP_LAST

- History depth = 1

This is the State Data pattern described above, in “Reliable” Reliability + “Keep Last” History.

Add the DomainParticipant’s QoS profile in

monitoring_ctrl_application.cxx.Look for the comment:

// Exercise #1.2: Load DomainParticipant QoS profileReplace this line:

dds::domain::DomainParticipant participant(domain_id);

So the code looks like this:

// Exercise #1.2: Load DomainParticipant QoS profile dds::domain::DomainParticipant participant( domain_id, qos_provider.participant_qos( "ChocolateFactoryLibrary::MonitoringControlApplication"));

With this code, you have just explicitly loaded the “MonitoringControlApplication” QoS profile, which is part of the ChocolateFactoryLibrary. Remember that this QoS profile inherits from the default DomainParticipant QoS profile, but specifies the

participant_nameto be “MonitoringControlParticipant” in the QoS file.

Add the DataReaders’ QoS profile in

monitoring_ctrl_application.cxx.Look for the comment:

// Exercise #1.3: Update the lot_state_reader and temperature_reader // to use correct QoS

Replace these lines:

dds::sub::DataReader<ChocolateLotState> lot_state_reader(subscriber, topic); // Add a DataReader for Temperature to this application dds::sub::DataReader<Temperature> temperature_reader( subscriber, temperature_topic);

So the code looks like this:

// Exercise #1.3: Update the lot_state_reader and temperature_reader // to use correct QoS dds::sub::DataReader<ChocolateLotState> lot_state_reader( subscriber, topic, qos_provider.datareader_qos( "ChocolateFactoryLibrary::ChocolateLotStateProfile")); // Add a DataReader for Temperature to this application dds::sub::DataReader<Temperature> temperature_reader( subscriber, temperature_topic, qos_provider.datareader_qos( "ChocolateFactoryLibrary::ChocolateTemperatureProfile"));

Now that you have added that code, the DataReaders are each loading the correct QoS profiles. You have not changed the DataWriter, so it is erroneously using the default QoS profile.

Compile and run the two applications (tempering_application and monitoring_ctrl_application):

Compile the code:

From within the

builddirectory:$ makeFrom within the

builddirectory:$ makeFrom within the

builddirectory:Open

rticonnextdds-getting-started-qos.slnin Visual Studio by enteringrticonnextdds-getting-qos.slnat the command prompt or using File > Open Project in Visual Studio.

Right-click

ALL_BUILDand choose Build. (See2_hello_world\<language>\README_<architecture>.txtif you need more help.)Since “Debug” is the default option at the top of Visual Studio, a Debug directory will be created with the compiled files.

Run the Monitoring/Control application:

From within the

builddirectory:$ ./monitoring_ctrl_applicationFrom within the

builddirectory:$ ./monitoring_ctrl_applicationFrom within the

builddirectory:> Debug\monitoring_ctrl_application.exeIn another command prompt window, run the Tempering application with a sensor ID as a parameter:

From within the

builddirectory:$ ./tempering_application -i 9

From within the

builddirectory:$ ./tempering_application -i 9

From within the

builddirectory:> Debug\tempering_application.exe -i 9Look at the output of the applications:

The Monitoring/Control application starts lots, but we never see lot updates:

Starting lot: [lot_id: 0 next_station: StationKind::TEMPERING_CONTROLLER ] Starting lot: [lot_id: 1 next_station: StationKind::TEMPERING_CONTROLLER ]

The Tempering application waits for lots, but it never gets any updates about lots:

ChocolateTemperature Sensor with ID: 9 starting Waiting for lot Waiting for lot Waiting for lot Waiting for lot Waiting for lot

The DataWriters and DataReaders are not communicating because they have incompatible QoS profiles, which we will debug in the next hands-on exercise.

Keep the applications running for the next hands-on exercise.

5.5. Hands-On 2: Incompatible QoS in Admin Console¶

As we mentioned above, the Tempering application is using all correct QoS settings, and we updated the DataReaders in the Monitoring/Control application. But we intentionally didn’t update the Monitoring/Control application’s DataWriter. We will debug this problem using Admin Console.

Make sure the monitoring_ctrl_application and tempering_application are still running.

Open Admin Console.

Open Admin Console from RTI Launcher.

Choose the Administration view:

(The Administration view might be selected for you already, since we used that view in the previous module.)

You should immediately notice that there is a red error showing on your “ChocolateLotState” Topic.

If you hover over the ChocolateLotState Topic, you will see an error: “Request/offered QoS.”

Click on the “ChocolateLotState” Topic in the DDS Logical View, and you should see a diagram showing the DataWriters and DataReaders that are writing and reading the Topic.

We’ve organized this view so that the DataWriter and DataReader from the Monitoring/Control application are on the bottom. (You can click and drag the icons to organize your view the same way.) It’s easy to see which application the DataWriters and DataReaders belong to, because you’ve set the

participant_nameQoS setting, which allows you to see the names of the DomainParticipants (TemperingAppParticipant and MonitoringControlParticipant) that own the DataWriters and DataReaders in this system.

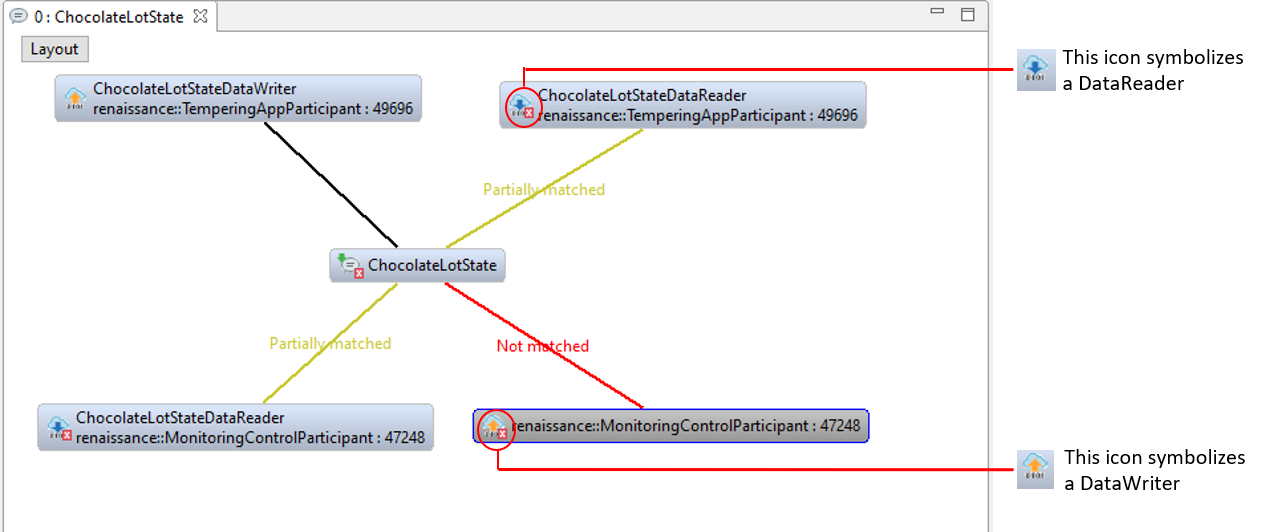

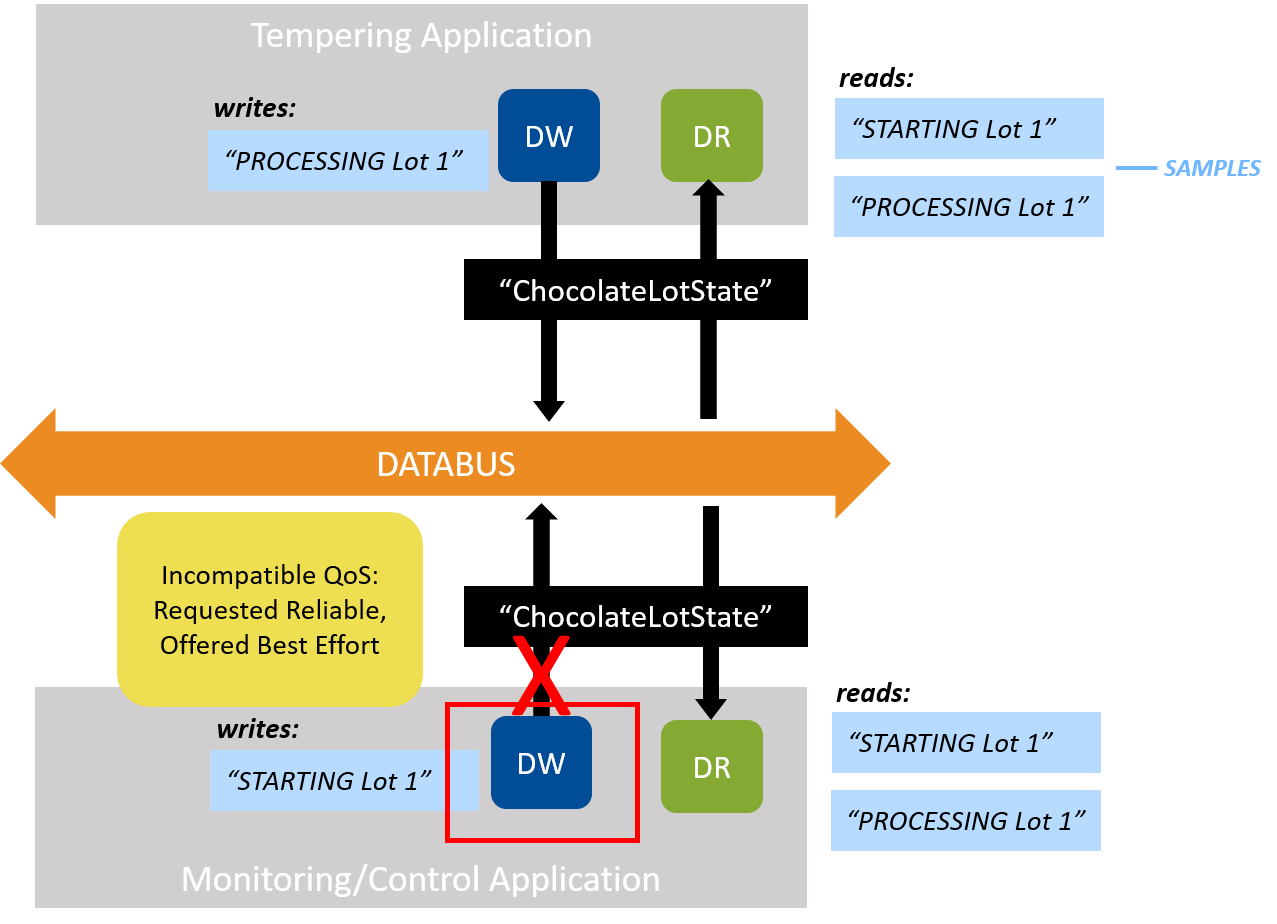

Notice in the above view that some DataWriters and DataReaders are matching, while others are not—this is because there is one DataWriter that is configured correctly and one that is misconfigured. Remember that the “ChocolateLotState” DataWriters and DataReaders look like Figure 5.15 in our system; the Monitoring/Control application’s DataWriter is not configured correctly:

Figure 5.15 Two DataWriters and two DataReaders should be communicating in our example system, when configured correctly. But the DataWriter QoS policy in the Monitoring/Control application is not configured correctly. (See also Figure 4.5.)

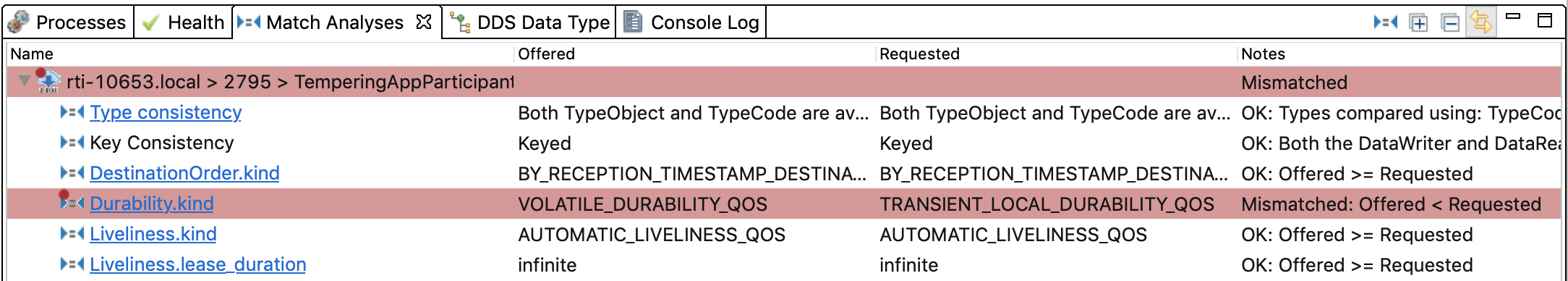

Click on the DataWriter for the Monitoring/Control application that says it is “not matched” in Admin Console.

At the bottom of Admin Console’s screen, click on the Match Analyses view:

Notice in the Offered and Requested columns that the misconfigured DataWriter is offering Volatile durability (meaning that it will not save data for late-joining DataReaders). However, the two DataReaders are requesting Transient Local durability (meaning that they need data saved for when they join late—this is the correct setting, since it follows the State Data pattern). The DataReader is requesting a higher level of QoS setting (“I need data saved”) than the DataWriter is offering (“I’m not saving data”). This is a system error, caused by incompatible QoS policies. Scroll down in this view to see the second DataReader that is mismatched with the DataWriter.

Tip

Any time a DataReader requests a higher level of service than the DataWriter offers, Connext DDS reports it as a system error.

Looking back at the “ChocolateLotState” view, we see that the “ChocolateLotState” DataReaders in the system are partially matched. This is because the DataReaders match with the DataWriter (in the Tempering application) that is configured correctly.

5.6. Hands-On 3: Incompatible QoS Notification¶

Both your DataWriters and DataReaders can be notified in your own applications when

they discover a DataReader or DataWriter with incompatible QoS settings. In the 5_basic_qos

directory, we have added a StatusCondition to the Tempering application’s DataReader

to detect incompatible QoS policies. (This is a change to the Tempering application

from the version used in the previous module, in 4_keys_instances.) In the steps

below, you will review this additional code and add new code that prints out the error.

Quit both of your applications if you haven’t already.

Open the

tempering_application.cxxfile.

Review the code. Notice that we have already added code to detect when the DataReader discovers a DataWriter with an incompatible QoS policy. When this happens, it calls the function

on_requested_incompatible_qos:// Enable the 'data available' and 'requested incompatible qos' statuses reader_status_condition.enabled_statuses( dds::core::status::StatusMask::data_available() | dds::core::status::StatusMask::requested_incompatible_qos()); // Associate a handler with the status condition. This will run when the // condition is triggered, in the context of the dispatch call (see below) reader_status_condition.extensions().handler( [&lot_state_reader, &lot_state_writer]() { if((lot_state_reader.status_changes() & dds::core::status::StatusMask::data_available()) != dds::core::status::StatusMask::none()) { process_lot(lot_state_reader, lot_state_writer); } if ((lot_state_reader.status_changes() & dds::core::status::StatusMask::requested_incompatible_qos()) != dds::core::status::StatusMask::none()) { on_requested_incompatible_qos(lot_state_reader); } }); // Create a WaitSet and attach the StatusCondition dds::core::cond::WaitSet waitset; waitset += reader_status_condition;

Find the

on_requested_incompatible_qosfunction, and add code after the comment:// Exercise #3.1 add a message to print when this DataReader discovers an // incompatible DataWriter std::cout << "Discovered DataWriter with incompatible policy: "; if (incompatible_policy == policy_id<Reliability>::value) { std::cout << "Reliability"; } else if (incompatible_policy == policy_id<Durability>::value) { std::cout << "Durability"; } std::cout << std::endl;

The code you just added will print an error message when the DataReader discovers an incompatible DataWriter.

Rebuild and run both applications.

When you run the Tempering application, you will now see notifications when it discovers DataWriters with incompatible QoS policies:

ChocolateTemperature Sensor with ID: 33 starting waiting for lot Discovered DataWriter with incompatible policy: Durability waiting for lot waiting for lot

(Connext DDS prints the error only the first time it notices the incompatibility.)

Quit both applications.

5.7. Hands-On 4: Using Correct QoS Profile¶

Finally, let’s go back to the monitoring_ctrl_application.cxx file. This

is where you added the QoS profile for the DomainParticipant and DataReaders earlier. Now you will

be modifying the DataWriter’s QoS profile to use the correct values.

Edit

monitoring_ctrl_application.cxx.Find the comment:

// Exercise #4.1: Load ChocolateLotState DataWriter QoS profile after // debugging incompatible QoS

Replace this line:

dds::pub::DataWriter<ChocolateLotState> lot_state_writer(publisher, topic);

So the code looks like this:

// Exercise #4.1: Load ChocolateLotState DataWriter QoS profile after // debugging incompatible QoS dds::pub::DataWriter<ChocolateLotState> lot_state_writer( publisher, topic, qos_provider.datawriter_qos( "ChocolateFactoryLibrary::ChocolateLotStateProfile"));

Now you have configured the “ChocolateLotState” DataWriter to use the “ChocolateLotStateProfile” QoS profile.

Compile your applications and rerun. Now you see that they are communicating correctly, and the Tempering application always starts processing lot #0, even if the Tempering application started after the Monitoring/Control application. This is because the Tempering application is using the State Data pattern (Reliability kind = RELIABLE, History kind = KEEP_LAST, History depth = 1, Durability kind = TRANSIENT_LOCAL) for its QoS profile.

Recall that the Tempering application processes the lot and updates the status of each lot it receives.

ChocolateTemperature Sensor with ID: 35 starting waiting for lot Processing lot #0 waiting for lot Processing lot #1 waiting for lot waiting for lot Processing lot #2 waiting for lot

Recall that the Monitoring/Control application starts each lot at the TEMPERING CONTROLLER. The TEMPERING CONTROLLER then updates the lot state to indicate it is WAITING, PROCESSING, or completed. (The “current station” is invalid before the tempering controller starts processing the lot, because the Tempering application is the first station, so there is no station before that.)

Starting lot: [lot_id: 0 next_station: StationKind::TEMPERING_CONTROLLER ] Received Lot Update: [lot_id: 0, station: StationKind::INVALID_CONTROLLER , next_station: StationKind::TEMPERING_CONTROLLER , lot_status: LotStatusKind::WAITING ] Received Lot Update: [lot_id: 0, station: StationKind::TEMPERING_CONTROLLER , next_station: StationKind::INVALID_CONTROLLER , lot_status: LotStatusKind::PROCESSING ] Received Lot Update: [lot_id: 0 is completed] Starting lot: [lot_id: 1 next_station: StationKind::TEMPERING_CONTROLLER ] Received Lot Update: [lot_id: 1, station: StationKind::INVALID_CONTROLLER , next_station: StationKind::TEMPERING_CONTROLLER , lot_status: LotStatusKind::WAITING ] Received Lot Update: [lot_id: 1, station: StationKind::TEMPERING_CONTROLLER , next_station: StationKind::INVALID_CONTROLLER , lot_status: LotStatusKind::PROCESSING ] Received Lot Update: [lot_id: 1 is completed]

If you haven’t already, start the Monitoring/Control application before the Tempering application and see that the Tempering application processes all of the lots, starting with 0, even though the Tempering application in this scenario is a late joiner.

Upon startup, the Tempering application gets a current view of your entire system. This is because all of the QoS policies relevant to the State Data pattern are working together: since Reliability kind is RELIABLE and Durability kind is TRANSIENT_LOCAL, the Tempering application sees all the ChocolateLotState instances even if its DataReader is a late-joiner; since History depth is 1, it sees one sample from each instance. Contrast this with our discussion in Durability QoS Policy, where the Monitoring/Control application sent lots #0-4, but the Tempering application saw only lot #3 at the start. This was because we hadn’t set Durability yet.

Figure 5.17 Reliability, History, and Durability QoS policies work together so that the late-joining DataReader sees one sample per instance.

5.8. Next Steps¶

Congratulations! You have learned about several of the basic Quality of Service offered by Connext DDS. We only scratched the surface of the configuration options you have available, so for further information about QoS policies, you should look at the following documents:

- QoS Reference Guide

- DataWriter QoS, in the RTI Connext DDS Core Libraries User’s Manual

- DataReader QoS, in the RTI Connext DDS Core Libraries User’s Manual

- Configuring QoS with XML, in the RTI Connext DDS Core Libraries User’s Manual

In an upcoming module, we will be looking at filtering data using ContentFilteredTopics.