2.1.2. Discovery Performance¶

This section provides the results of testing Simple Discovery in RTI Connext 6.1 at various scales of applications, DomainParticipants, and endpoints (DataWriters and DataReaders).

These numbers should only be used as a first rough approximation, since the results are highly dependent on the hardware, software architecture, QoS used, and network infrastructure of the tested system. These numbers are taken with multicast enabled, since this is the default mode used by Connext for discovery.

2.1.2.1. Time to Complete Endpoint Discovery¶

The following graph shows the time it takes to complete endpoint discovery measured for different numbers of DomainParticipants with different numbers of endpoints. See the detailed information about the tests below.

Detailed test input

The complete discovery process has two phases. In the first, the DomainParticipant(s) exchange messages to discover each other; in the second, the DataWriters and DataReaders (endpoints) within those participants discover each other.

The main goal for this test is to determine the behavior of DDS discovery at various scales, with increasing numbers of DomainParticipants and increasing numbers of endpoints within those DomainParticipants.

Note

Tests in this report reflect Test Applications that were all set to launch at the same time. This places a great deal of stress on the discovery system. Additional stress could be added by allowing user data to flow during discovery, but this was not configured for these tests.

The input parameters chosen for these tests are designed to go through a set of realistic scenarios. These parameters are:

Number of hosts: 7

Participants in the system: [100, 200, 300, 400]

Topics in the system: [0.5, 0.65, 0.80, 0.95, 1.10] * Number of Participants in the system

Readers per topic: 10

Writers per topic: 2

Given these input parameters, the number of topics in each of the tests are shown below. In the first row, the number of topics used are half the number of participants used, and so on.

TOPICS

Participants |

100 |

200 |

300 |

400 |

|---|---|---|---|---|

Topics = 50% of the Participant Number |

50 |

101 |

151 |

201 |

Topics = 65% of the Participant Number |

65 |

130 |

195 |

261 |

Topics = 80% of the Participant Number |

80 |

160 |

241 |

321 |

Topics = 95% of the Participant Number |

95 |

191 |

285 |

381 |

Topics = 110% of the Participant Number |

111 |

222 |

331 |

441 |

As a result, the number of endpoints in each of the tested scenarios can be calculated as follows:

ENDPOINTS

Participants |

100 |

200 |

300 |

400 |

|---|---|---|---|---|

Topics = 50% of the Participant Number |

600 |

1212 |

1812 |

2412 |

Topics = 65% of the Participant Number |

780 |

1560 |

2340 |

3132 |

Topics = 80% of the Participant Number |

960 |

1920 |

2892 |

3852 |

Topics = 95% of the Participant Number |

1140 |

2292 |

3420 |

4572 |

Topics = 110% of the Participant Number |

1332 |

2664 |

3972 |

5292 |

Detailed results

TIME FOR COMPLETE ENDPOINT DISCOVERY (Seconds)

Participants |

100 |

200 |

300 |

400 |

|---|---|---|---|---|

Topics = 50% of the Participant Number |

3.9480 |

9.4990 |

19.4370 |

25.7350 |

Topics = 65% of the Participant Number |

4.2950 |

9.5960 |

19.7360 |

27.0660 |

Topics = 80% of the Participant Number |

4.5540 |

12.2370 |

20.4340 |

27.7330 |

Topics = 95% of the Participant Number |

4.9400 |

12.2360 |

21.8360 |

29.4960 |

Topics = 110% of the Participant Number |

7.4570 |

15.2120 |

21.9560 |

30.7930 |

Software information

RTI developed a testing framework specifically designed for discovery benchmarking. This framework was used to perform the tests detailed in this document. This framework is capable of running simultaneously different application loads and commands in all the hosts in the experiment. It can collect all of the results and monitor the resource usage of the hosts.

The framework is composed of four main applications: the Manager, Monitor, Agent and Test Application.

The Manager is the initial process to be run. This process launches and coordinates the Agents on each of the test hosts. The Agents are in charge of launching as many Test Applications as the Manager requests. The Manager also requests the Agents to start the tests and shut down.

The Monitor tool is used to visualize the memory, CPU, and discovery data from the Agent and the Test Application. It also indicates problems in the communication (hosts not connected, missed deadlines, etc.).

RTI Connext 6.1.1 is used for compiling and linking. The architecture chosen for the libraries is x64Linux3gcc4.8.2.

Hardware information

Eight hosts were used to run the tests: seven to run the discovery applications and one to monitor and log the output information.

All these hosts have the following characteristics:

CPU Intel Core Xeon (12 Cores) 3.6GHz

Memory (RAM): 12 GB

OS: CentOS 7

All the hosts were connected to the same Gigabit Ethernet switch.

Some extra tuning was done to improve the network and memory capabilities of the hosts:

net.core.rmem_default=65536

net.core.rmem_max=10485760

net.core.wmem_default=65536

net.core.wmem_max=10485760

net.ipv4.ipfrag_high_thresh=8388608

net.core.netdev_max_backlog=30000

You can find more information about this tuning for a better Connext response on RTI Community here.

QOS used

These are the QoS settings used for the Test Applications and Agents. In these QoS settings, we avoid sending the type code and TypeObject during discovery, relax the lease duration settings for participant discovery, and increase the buffer size of the UDPv4 receive sockets.

<dds>

<qos_library name="DiscoveryLibrary">

<qos_profile name="BaseDiscoveryProfile">

<participant_qos name="BaseParticipantQos">

<transport_builtin>

<mask>UDPv4</mask>

</transport_builtin>

<resource_limits>

<type_code_max_serialized_length>0</type_code_max_serialized_length>

<type_object_max_serialized_length>0</type_object_max_serialized_length>

</resource_limits>

<discovery_config>

<participant_liveliness_lease_duration>

<sec>200</sec>

<nanosec>0</nanosec>

</participant_liveliness_lease_duration>

<participant_liveliness_assert_period>

<sec>10</sec>

<nanosec>0</nanosec>

</participant_liveliness_assert_period>

<remote_participant_purge_kind>LIVELINESS_BASED_REMOTE_PARTICIPANT_PURGE

</remote_participant_purge_kind>

<max_liveliness_loss_detection_period>

<sec>10</sec>

<nanosec>0</nanosec>

</max_liveliness_loss_detection_period>

</discovery_config>

<property>

<value>

<element>

<name>dds.transport.UDPv4.builtin.recv_socket_buffer_size</name>

<value>1048576</value>

</element>

</value>

</property>

</participant_qos>

</qos_profile>

</qos_library>

</dds>

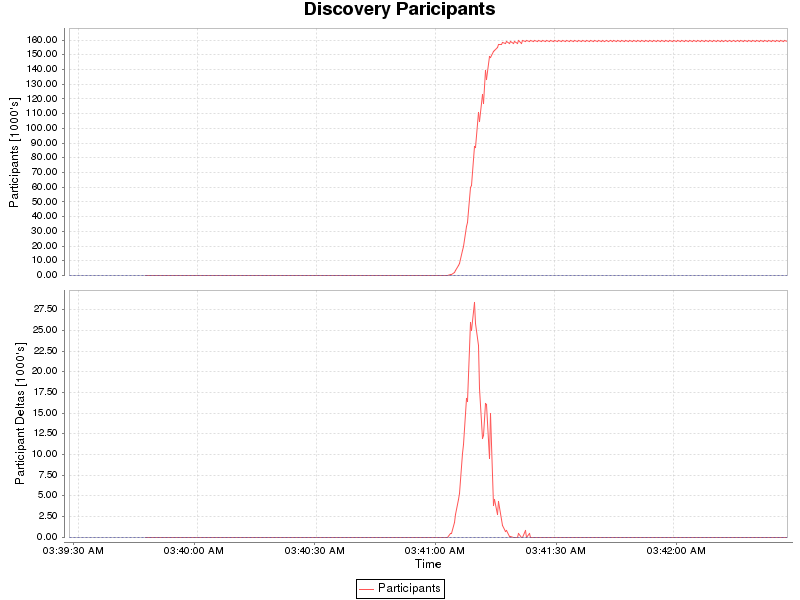

2.1.2.2. Discovery Process over Time¶

Using the Monitor application, more detailed information can be obtained for a given scenario. This can help in understanding how the discovery process is performed in a distributed system.

The following graphs show different parameters measured by Monitor, for a specific scenario.

Detailed test input

Number of machines: 7

Participants in the system: 400

Topics in the system: 1.10 * Participants in the system = 441

Readers per topic: 10

Writers per topic: 2

Total endpoints: 5292

Software information

RTI developed a testing framework specifically designed for discovery benchmarking. This framework was used to perform the tests detailed in this document. This framework is capable of running simultaneously different application loads and commands in all the hosts in the experiment. It can collect all of the results and monitor the resource usage of the hosts.

The framework is composed of four main applications: the Manager, Monitor, Agent and Test Application.

The Manager is the initial process to be run. This process launches and coordinates the Agents on each of the test hosts. The Agents are in charge of launching as many Test Applications as the Manager requests. The Manager also requests the Agents to start the tests and shut down.

The Monitor tool is used to visualize the memory, CPU, and discovery data from the Agent and the Test Application. It also indicates problems in the communication (hosts not connected, missed deadlines, etc.).

RTI Connext 6.1.1 is used for compiling and linking. The architecture chosen for the libraries is x64Linux3gcc4.8.2.

Hardware information

Eight hosts were used to run the tests: seven to run the discovery applications and one to monitor and log the output information.

All these hosts have the following characteristics:

CPU Intel Core Xeon (12 Cores) 3.6GHz

Memory (RAM): 12 GB

OS: CentOS 7

All the hosts were connected to the same Gigabit Ethernet switch.

Some extra tuning was done to improve the network and memory capabilities of the hosts:

net.core.rmem_default=65536

net.core.rmem_max=10485760

net.core.wmem_default=65536

net.core.wmem_max=10485760

net.ipv4.ipfrag_high_thresh=8388608

net.core.netdev_max_backlog=30000

You can find more information about this tuning for a better Connext response on RTI Community here.

QOS used

These are the QoS settings used for the Test Applications and Agents. In these QoS settings, we avoid sending the type code and TypeObject during discovery, relax the lease duration settings for participant discovery, and increase the buffer size of the UDPv4 receive sockets.

<dds>

<qos_library name="DiscoveryLibrary">

<qos_profile name="BaseDiscoveryProfile">

<participant_qos name="BaseParticipantQos">

<transport_builtin>

<mask>UDPv4</mask>

</transport_builtin>

<resource_limits>

<type_code_max_serialized_length>0</type_code_max_serialized_length>

<type_object_max_serialized_length>0</type_object_max_serialized_length>

</resource_limits>

<discovery_config>

<participant_liveliness_lease_duration>

<sec>200</sec>

<nanosec>0</nanosec>

</participant_liveliness_lease_duration>

<participant_liveliness_assert_period>

<sec>10</sec>

<nanosec>0</nanosec>

</participant_liveliness_assert_period>

<remote_participant_purge_kind>LIVELINESS_BASED_REMOTE_PARTICIPANT_PURGE

</remote_participant_purge_kind>

<max_liveliness_loss_detection_period>

<sec>10</sec>

<nanosec>0</nanosec>

</max_liveliness_loss_detection_period>

</discovery_config>

<property>

<value>

<element>

<name>dds.transport.UDPv4.builtin.recv_socket_buffer_size</name>

<value>1048576</value>

</element>

</value>

</property>

</participant_qos>

</qos_profile>

</qos_library>

</dds>

Detailed test input

Number of machines: 7

Participants in the system: 400

Topics in the system: 1.10 * Participants in the system = 441

Readers per topic: 10

Writers per topic: 2

Total endpoints: 5292

Software information

RTI developed a testing framework specifically designed for discovery benchmarking. This framework was used to perform the tests detailed in this document. This framework is capable of running simultaneously different application loads and commands in all the hosts in the experiment. It can collect all of the results and monitor the resource usage of the hosts.

The framework is composed of four main applications: the Manager, Monitor, Agent and Test Application.

The Manager is the initial process to be run. This process launches and coordinates the Agents on each of the test hosts. The Agents are in charge of launching as many Test Applications as the Manager requests. The Manager also requests the Agents to start the tests and shut down.

The Monitor tool is used to visualize the memory, CPU, and discovery data from the Agent and the Test Application. It also indicates problems in the communication (hosts not connected, missed deadlines, etc.).

RTI Connext 6.1.1 is used for compiling and linking. The architecture chosen for the libraries is x64Linux3gcc4.8.2.

Hardware information

Eight hosts were used to run the tests: seven to run the discovery applications and one to monitor and log the output information.

All these hosts have the following characteristics:

CPU Intel Core Xeon (12 Cores) 3.6GHz

Memory (RAM): 12 GB

OS: CentOS 7

All the hosts were connected to the same Gigabit Ethernet switch.

Some extra tuning was done to improve the network and memory capabilities of the hosts:

net.core.rmem_default=65536

net.core.rmem_max=10485760

net.core.wmem_default=65536

net.core.wmem_max=10485760

net.ipv4.ipfrag_high_thresh=8388608

net.core.netdev_max_backlog=30000

You can find more information about this tuning for a better Connext response on RTI Community here.

QOS used

These are the QoS settings used for the Test Applications and Agents. In these QoS settings, we avoid sending the type code and TypeObject during discovery, relax the lease duration settings for participant discovery, and increase the buffer size of the UDPv4 receive sockets.

<dds>

<qos_library name="DiscoveryLibrary">

<qos_profile name="BaseDiscoveryProfile">

<participant_qos name="BaseParticipantQos">

<transport_builtin>

<mask>UDPv4</mask>

</transport_builtin>

<resource_limits>

<type_code_max_serialized_length>0</type_code_max_serialized_length>

<type_object_max_serialized_length>0</type_object_max_serialized_length>

</resource_limits>

<discovery_config>

<participant_liveliness_lease_duration>

<sec>200</sec>

<nanosec>0</nanosec>

</participant_liveliness_lease_duration>

<participant_liveliness_assert_period>

<sec>10</sec>

<nanosec>0</nanosec>

</participant_liveliness_assert_period>

<remote_participant_purge_kind>LIVELINESS_BASED_REMOTE_PARTICIPANT_PURGE

</remote_participant_purge_kind>

<max_liveliness_loss_detection_period>

<sec>10</sec>

<nanosec>0</nanosec>

</max_liveliness_loss_detection_period>

</discovery_config>

<property>

<value>

<element>

<name>dds.transport.UDPv4.builtin.recv_socket_buffer_size</name>

<value>1048576</value>

</element>

</value>

</property>

</participant_qos>

</qos_profile>

</qos_library>

</dds>

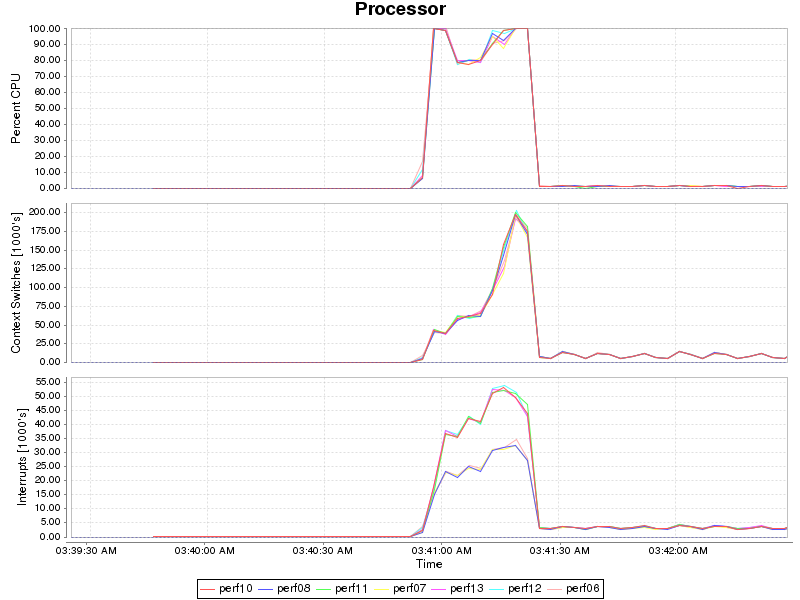

These resource utilization graphs show the overall CPU demand (top) normalized to 100%, the context switching (middle), and interrupts (bottom). As can be clearly seen in the CPU traces, the demand for processor time is extremely high during the discovery phase of this system on these (relatively few) hosts.

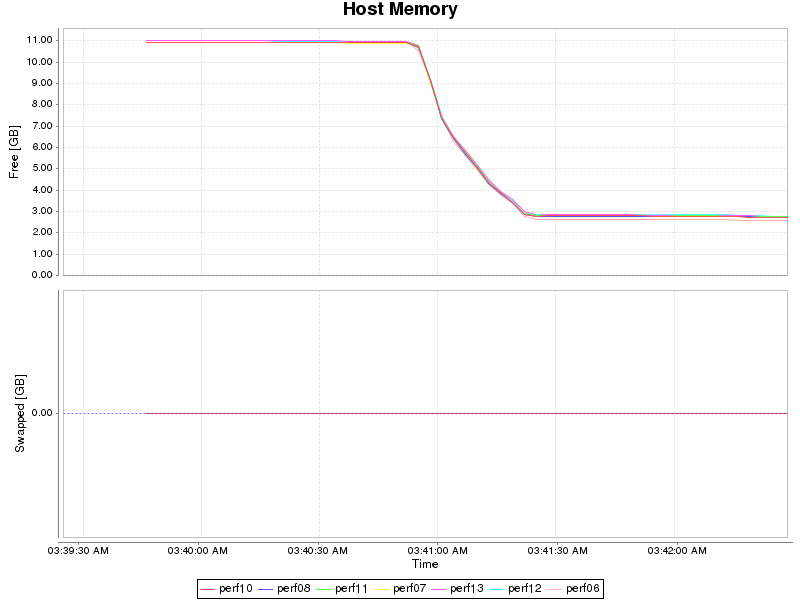

Detailed test input

Number of machines: 7

Participants in the system: 400

Topics in the system: 1.10 * Participants in the system = 441

Readers per topic: 10

Writers per topic: 2

Total endpoints: 5292

Software information

RTI developed a testing framework specifically designed for discovery benchmarking. This framework was used to perform the tests detailed in this document. This framework is capable of running simultaneously different application loads and commands in all the hosts in the experiment. It can collect all of the results and monitor the resource usage of the hosts.

The framework is composed of four main applications: the Manager, Monitor, Agent and Test Application.

The Manager is the initial process to be run. This process launches and coordinates the Agents on each of the test hosts. The Agents are in charge of launching as many Test Applications as the Manager requests. The Manager also requests the Agents to start the tests and shut down.

The Monitor tool is used to visualize the memory, CPU, and discovery data from the Agent and the Test Application. It also indicates problems in the communication (hosts not connected, missed deadlines, etc.).

RTI Connext 6.1.1 is used for compiling and linking. The architecture chosen for the libraries is x64Linux3gcc4.8.2.

Hardware information

Eight hosts were used to run the tests: seven to run the discovery applications and one to monitor and log the output information.

All these hosts have the following characteristics:

CPU Intel Core Xeon (12 Cores) 3.6GHz

Memory (RAM): 12 GB

OS: CentOS 7

All the hosts were connected to the same Gigabit Ethernet switch.

Some extra tuning was done to improve the network and memory capabilities of the hosts:

net.core.rmem_default=65536

net.core.rmem_max=10485760

net.core.wmem_default=65536

net.core.wmem_max=10485760

net.ipv4.ipfrag_high_thresh=8388608

net.core.netdev_max_backlog=30000

You can find more information about this tuning for a better Connext response on RTI Community here.

QOS used

These are the QoS settings used for the Test Applications and Agents. In these QoS settings, we avoid sending the type code and TypeObject during discovery, relax the lease duration settings for participant discovery, and increase the buffer size of the UDPv4 receive sockets.

<dds>

<qos_library name="DiscoveryLibrary">

<qos_profile name="BaseDiscoveryProfile">

<participant_qos name="BaseParticipantQos">

<transport_builtin>

<mask>UDPv4</mask>

</transport_builtin>

<resource_limits>

<type_code_max_serialized_length>0</type_code_max_serialized_length>

<type_object_max_serialized_length>0</type_object_max_serialized_length>

</resource_limits>

<discovery_config>

<participant_liveliness_lease_duration>

<sec>200</sec>

<nanosec>0</nanosec>

</participant_liveliness_lease_duration>

<participant_liveliness_assert_period>

<sec>10</sec>

<nanosec>0</nanosec>

</participant_liveliness_assert_period>

<remote_participant_purge_kind>LIVELINESS_BASED_REMOTE_PARTICIPANT_PURGE

</remote_participant_purge_kind>

<max_liveliness_loss_detection_period>

<sec>10</sec>

<nanosec>0</nanosec>

</max_liveliness_loss_detection_period>

</discovery_config>

<property>

<value>

<element>

<name>dds.transport.UDPv4.builtin.recv_socket_buffer_size</name>

<value>1048576</value>

</element>

</value>

</property>

</participant_qos>

</qos_profile>

</qos_library>

</dds>

Detailed test input

Number of machines: 7

Participants in the system: 400

Topics in the system: 1.10 * Participants in the system = 441

Readers per topic: 10

Writers per topic: 2

Total endpoints: 5292

Software information

RTI developed a testing framework specifically designed for discovery benchmarking. This framework was used to perform the tests detailed in this document. This framework is capable of running simultaneously different application loads and commands in all the hosts in the experiment. It can collect all of the results and monitor the resource usage of the hosts.

The framework is composed of four main applications: the Manager, Monitor, Agent and Test Application.

The Manager is the initial process to be run. This process launches and coordinates the Agents on each of the test hosts. The Agents are in charge of launching as many Test Applications as the Manager requests. The Manager also requests the Agents to start the tests and shut down.

The Monitor tool is used to visualize the memory, CPU, and discovery data from the Agent and the Test Application. It also indicates problems in the communication (hosts not connected, missed deadlines, etc.).

RTI Connext 6.1.1 is used for compiling and linking. The architecture chosen for the libraries is x64Linux3gcc4.8.2.

Hardware information

Eight hosts were used to run the tests: seven to run the discovery applications and one to monitor and log the output information.

All these hosts have the following characteristics:

CPU Intel Core Xeon (12 Cores) 3.6GHz

Memory (RAM): 12 GB

OS: CentOS 7

All the hosts were connected to the same Gigabit Ethernet switch.

Some extra tuning was done to improve the network and memory capabilities of the hosts:

net.core.rmem_default=65536

net.core.rmem_max=10485760

net.core.wmem_default=65536

net.core.wmem_max=10485760

net.ipv4.ipfrag_high_thresh=8388608

net.core.netdev_max_backlog=30000

You can find more information about this tuning for a better Connext response on RTI Community here.

QOS used

These are the QoS settings used for the Test Applications and Agents. In these QoS settings, we avoid sending the type code and TypeObject during discovery, relax the lease duration settings for participant discovery, and increase the buffer size of the UDPv4 receive sockets.

<dds>

<qos_library name="DiscoveryLibrary">

<qos_profile name="BaseDiscoveryProfile">

<participant_qos name="BaseParticipantQos">

<transport_builtin>

<mask>UDPv4</mask>

</transport_builtin>

<resource_limits>

<type_code_max_serialized_length>0</type_code_max_serialized_length>

<type_object_max_serialized_length>0</type_object_max_serialized_length>

</resource_limits>

<discovery_config>

<participant_liveliness_lease_duration>

<sec>200</sec>

<nanosec>0</nanosec>

</participant_liveliness_lease_duration>

<participant_liveliness_assert_period>

<sec>10</sec>

<nanosec>0</nanosec>

</participant_liveliness_assert_period>

<remote_participant_purge_kind>LIVELINESS_BASED_REMOTE_PARTICIPANT_PURGE

</remote_participant_purge_kind>

<max_liveliness_loss_detection_period>

<sec>10</sec>

<nanosec>0</nanosec>

</max_liveliness_loss_detection_period>

</discovery_config>

<property>

<value>

<element>

<name>dds.transport.UDPv4.builtin.recv_socket_buffer_size</name>

<value>1048576</value>

</element>

</value>

</property>

</participant_qos>

</qos_profile>

</qos_library>

</dds>

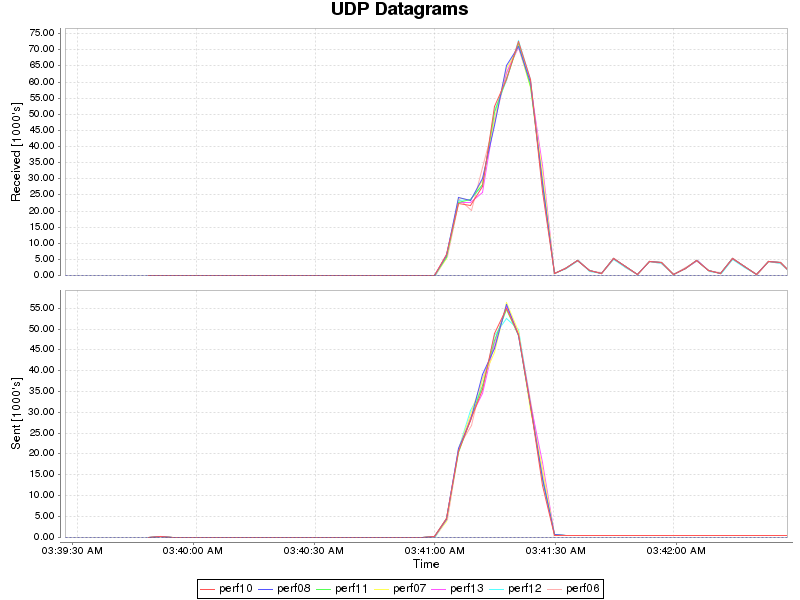

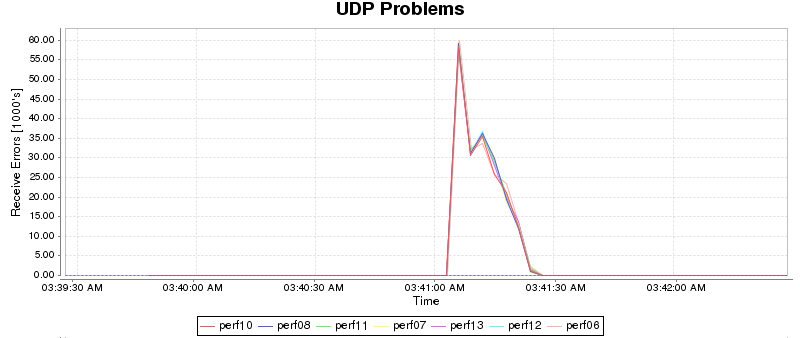

In the graphs below, see the number of UDP datagrams sent and received over time and the UDP receive errors.

Detailed test input

Number of machines: 7

Participants in the system: 400

Topics in the system: 1.10 * Participants in the system = 441

Readers per topic: 10

Writers per topic: 2

Total endpoints: 5292

Software information

RTI developed a testing framework specifically designed for discovery benchmarking. This framework was used to perform the tests detailed in this document. This framework is capable of running simultaneously different application loads and commands in all the hosts in the experiment. It can collect all of the results and monitor the resource usage of the hosts.

The framework is composed of four main applications: the Manager, Monitor, Agent and Test Application.

The Manager is the initial process to be run. This process launches and coordinates the Agents on each of the test hosts. The Agents are in charge of launching as many Test Applications as the Manager requests. The Manager also requests the Agents to start the tests and shut down.

The Monitor tool is used to visualize the memory, CPU, and discovery data from the Agent and the Test Application. It also indicates problems in the communication (hosts not connected, missed deadlines, etc.).

RTI Connext 6.1.1 is used for compiling and linking. The architecture chosen for the libraries is x64Linux3gcc4.8.2.

Hardware information

Eight hosts were used to run the tests: seven to run the discovery applications and one to monitor and log the output information.

All these hosts have the following characteristics:

CPU Intel Core Xeon (12 Cores) 3.6GHz

Memory (RAM): 12 GB

OS: CentOS 7

All the hosts were connected to the same Gigabit Ethernet switch.

Some extra tuning was done to improve the network and memory capabilities of the hosts:

net.core.rmem_default=65536

net.core.rmem_max=10485760

net.core.wmem_default=65536

net.core.wmem_max=10485760

net.ipv4.ipfrag_high_thresh=8388608

net.core.netdev_max_backlog=30000

You can find more information about this tuning for a better Connext response on RTI Community here.

QOS used

These are the QoS settings used for the Test Applications and Agents. In these QoS settings, we avoid sending the type code and TypeObject during discovery, relax the lease duration settings for participant discovery, and increase the buffer size of the UDPv4 receive sockets.

<dds>

<qos_library name="DiscoveryLibrary">

<qos_profile name="BaseDiscoveryProfile">

<participant_qos name="BaseParticipantQos">

<transport_builtin>

<mask>UDPv4</mask>

</transport_builtin>

<resource_limits>

<type_code_max_serialized_length>0</type_code_max_serialized_length>

<type_object_max_serialized_length>0</type_object_max_serialized_length>

</resource_limits>

<discovery_config>

<participant_liveliness_lease_duration>

<sec>200</sec>

<nanosec>0</nanosec>

</participant_liveliness_lease_duration>

<participant_liveliness_assert_period>

<sec>10</sec>

<nanosec>0</nanosec>

</participant_liveliness_assert_period>

<remote_participant_purge_kind>LIVELINESS_BASED_REMOTE_PARTICIPANT_PURGE

</remote_participant_purge_kind>

<max_liveliness_loss_detection_period>

<sec>10</sec>

<nanosec>0</nanosec>

</max_liveliness_loss_detection_period>

</discovery_config>

<property>

<value>

<element>

<name>dds.transport.UDPv4.builtin.recv_socket_buffer_size</name>

<value>1048576</value>

</element>

</value>

</property>

</participant_qos>

</qos_profile>

</qos_library>

</dds>