6. Getting Started Guide

6.1. About the Observability Example

Observability Framework includes a C++ example that you can use to

evaluate the capabilities of this product. The example is

installed in your rti_workspace directory, in the

/examples/observability/c++ folder.

Attention

The provided C++ example is not supported on VxWorks® and Android™ platforms.

This section details how the example is configured and how to run it. When you are ready to test the example, refer to the sections Before Running the Example and Running the Example for instructions.

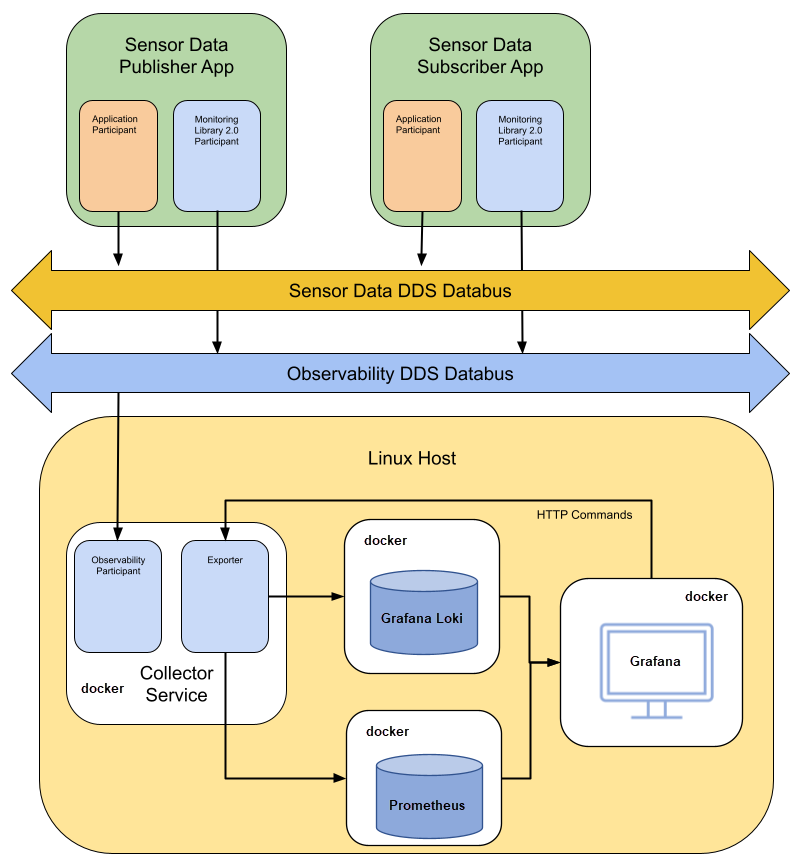

6.1.1. Applications

The example consists of two applications:

One application publishes simulated data generated by temperature sensors.

One application subscribes to the sensor data generated by the temperature sensors.

You can run multiple publishing and subscribing applications in the same host, or in multiple hosts, within a LAN. Each publishing application can handle multiple sensors, and each subscribing application subscribes to all sensors.

To learn more about the publish/subscribe model, refer to Publish/Subscribe in the RTI Connext Getting Started Guide.

The example applications use Monitoring Library 2.0 to forward telemetry data (logs and metrics) to Collector Service. The collector stores this data in Prometheus (metrics) and Grafana Loki (logs) for analysis and visualization using Grafana.

6.1.2. Data Model

The DDS data model for the Temperature topic used in this example is as follows:

// Temperature data type

struct Temperature {

// ID of the sensor sending the temperature

@key uint32 sensor_id;

// Degrees in Celsius

int32 degrees;

};

Each sensor represents a different instance in the Temperature topic. For general information about data types and topics, refer to Introduction to DataWriters, DataReaders, and Topics and Data Types in the RTI Connext Getting Started Guide.

6.1.3. DDS Entity Mapping

The Publisher application creates one DomainParticipant and

n-DataWriters, where n is the number of sensors published by the

application. This number is configurable using the command

--sensor-count. Each DataWriter publishes one instance. Refer to

Keys and Instances

in the RTI Connext Getting Started Guide for more information on

instances.

The Subscriber application creates one DomainParticipant and a single DataReader to subscribe to all sensor data.

6.1.4. Command-Line Parameters

The following command-line switches are available when starting the Publisher and Subscriber applications included in the example. Use this information as a reference when you run the example.

6.1.4.1. Publishing Application

Parameter |

Data Type |

Description |

Default |

|---|---|---|---|

-n, –application-name |

<str> |

Application name |

SensorPublisher_<init_sensor_id> |

-d, –domain |

<int> |

Application domain ID |

0 |

-i, –init-sensor-id |

<int> |

Initial sensor ID |

0 |

-s, –sensor-count |

<int> |

Sensor count. Each sensor writes one instance published by a separate DataWriter |

1 |

-o, –observability-domain |

<int> |

Domain for sending telemetry data |

2 |

-c, –collector-peer |

<str> |

Collector service peer |

localhost |

-v, –verbosity |

<int> |

How much debugging output to show, range 0-3 |

1 |

-p, –protected |

N/A |

Enable security |

disabled |

The publishing applications should not publish information for the same

sensor IDs. To avoid this issue, you will use the -i command-line

parameter to specify the sensor ID to be used as the initial ID

when Running the Example.

6.1.4.2. Subscribing Application

Parameter |

Data Type |

Description |

Default |

|---|---|---|---|

-n, –application-name |

<str> |

Application name |

SensorSubscriber |

-d, –domain |

<int> |

Application domain ID |

0 |

-o, –observability-domain |

<int> |

Domain for sending telemetry data |

2 |

-c, –collector-peer |

<str> |

Collector service peer |

localhost |

-v, –verbosity |

<int> |

How much debugging output to show, Range 0-3 |

1 |

-p, –protected |

N/A |

Enable security |

disabled |

6.2. Before Running the Example

6.2.1. Set Up Environment Variables

Set up the environment variables for running and compiling the example:

Open a command prompt window.

Run this script:

$ source <installdir>/resource/scripts/rtisetenv_<architecture>.bashIf you’re using the Z shell, run this:

$ source <installdir>/resource/scripts/rtisetenv_<architecture>.zsh$ source <installdir>/resource/scripts/rtisetenv_<architecture>.bashIf you’re using the Z shell, run this:

$ source <installdir>/resource/scripts/rtisetenv_<architecture>.zshIf you’re using the tcsh shell, run this:

$ source <installdir>/resource/scripts/rtisetenv_<architecture>.tcsh> <installdir>/resource/scripts/rtisetenv_<architecture>.bat

<installdir> refers to the installation directory for Connext.

The rtisetenv script adds the location of the SDK libraries

(<installdir>/lib/<architecture>) to your library path, sets the

<NDDSHOME> environment variable to point to <installdir>, and puts

the RTI Code Generator tool in your path. You may need Code Generator if the makefile

for your architecture is not available under the make directory in the

example.

Your architecture (such as x64Linux3gcc7.3.0) is the combination of

processor, OS, and compiler version that you will use to build your

application. For example:

$ source $NDDSHOME/resource/scripts/rtisetenv_x64Linux4gcc7.3.0.bash

6.2.2. Compile the Example

Monitoring Library 2.0 can be used in three different ways:

Dynamically loaded: This method requires that the rtimonitoring2 shared library is in the library search path.

Dynamic linking: The application is linked with the rtimonitoring2 shared library.

Static linking: The application is linked with the rtimonitoring2 static library.

You will compile the example using Connext shared libraries so that

Monitoring Library 2.0 can be dynamically loaded. The example is installed

in your rti_workspace directory, in the /examples/observability/c++ folder.

6.2.2.1. Non-Windows Systems

To build this example on a non-Windows system, type the following in a command shell from the example directory:

$ make -f make/makefile_Temperature_<architecture> DEBUG=0

If there is no makefile for your architecture in the make directory,

you can generate it using the following rtiddsgen command:

$ rtiddsgen -language C++98 -create makefiles -platform <architecture> -sharedLib -sourceDir src -d ./make ./src/Temperature.idl

6.2.2.2. Windows Systems

To build this example on Windows, open the appropriate solution file for your version of Microsoft Visual Studio in the win32 directory. To use dynamic linking, select Release DLL from the dropdown menu.

6.2.3. Install Observability Framework

Before running the example, make sure that you have installed both Monitoring Library 2.0 and the collection, storage and visualization components. Refer to the Installing and Running Observability Framework section for instructions.

If you want to run the example with security enabled, you must install Observability Framework using a secure configuration. If you did not create a secure configuration, delete the existing configuration as described in section Removing the Docker Workspace for Observability Framework, then update your JSON configuration file as needed. The following sections include example configuration files you can edit for your environment.

The collection, storage, and visualization components can be installed using one of two methods:

Install the components in a Linux host on the same LAN where the applications run, or

Install the components on a remote Linux host (for example, an AWS instance) reachable over the WAN using Real-Time WAN Transport.

Both methods support secure and non-secure configurations.

To facilitate testing secure configurations where all components run on the same

node (docker images, test applications, and browser), artifacts are provided in

your rti_workspace directory, in the /examples/dds-security/ folder. The

artifacts provided to secure the https connections use the hostname

“localhost”.

The following sections provide example JSON configurations for each of the eight operation modes supported by Observability Framework. These examples use the hostname “localhost”, default port values, and the paths to the default security artifacts where appropriate. You can copy these examples to a local file and use them as is, or customize them with your own hostname, ports, and security artifacts. For details on how to configure Observability Framework, see section Configure the JSON File.

6.2.3.1. Configure Observability Framework for the Appropriate Operation Mode

Important

The provided example configurations work only if you run ALL components

(docker images, test applications, and browser) on the same host machine.

If you intend to run any components (test applications or browser) on a

remote machine, you must update the hostname field in the JSON configuration

file to the hostname of the machine running Observability Framework.

Additionally, if you run in secure mode, you will need to generate the

https security artifacts and the DDS security artifacts as shown in

Generating the Observability Framework Security Artifacts. Once you have generated your

artifacts, you will need to update the securityConfig section in the

JSON configuration file with the paths to these artifacts.

There are eight distinct operation modes you can use to configure Observability Framework. These modes, described below, are based on the desired security level, network environment (LAN or WAN), and use of the OpenTelemetry Collector.

Select the operation mode for the test you want to run, then edit your JSON configuration file with the selected content. For example, if you want to test on a LAN without security, copy the example JSON from section Non-Secure LAN Configuration to the

config.jsonfile.

If desired, modify the hostname, ports, or security artifact paths in the

config.jsonfile. For example, to use port 9091 for Prometheus, change the “prometheusPort” field in theconfig.jsonfile from 9090 to 9091.

Run the rtiobservability script to apply your Observability Framework configuration.

$ rtiobservability -c config.json

If you have already configured Observability Framework in a different operation mode than the one you want to test, you must first remove the existing workspace as described in section Removing the Docker Workspace for Observability Framework.

6.2.3.1.1. Example LAN configurations

Table 6.3 lists the four LAN configurations supported by Observability Framework.

Configuration Name |

Network |

Data Storage |

Security |

|---|---|---|---|

NonSecureLAN |

LAN |

Prometheus and Grafana Loki |

No |

SecureLAN |

LAN |

Prometheus and Grafana Loki |

Yes |

NonSecureOTelLAN |

LAN |

Multiple through OpenTelemetry Collector |

No |

SecureOTelLAN |

LAN |

Multiple through OpenTelemetry Collector |

Yes |

6.2.3.1.1.1. Non-Secure LAN Configuration

This example configures Observability Framework with hostname “localhost”, default ports, and security disabled. Collector Service will use a LAN configuration.

{

"hostname": "localhost",

"observabilityDomain": 2,

"lgpStackConfig": {

"grafanaPort": 3000,

"prometheusPort": 9090,

"lokiPort": 3100

},

"collectorConfig": {

"prometheusExporterPort": 19090,

"controlPort": 19098

}

}

6.2.3.1.1.2. Secure LAN Configuration

This example configures Observability Framework with hostname “localhost”, default ports, and security enabled. Collector Service will use a LAN configuration.

{

"hostname": "localhost",

"observabilityDomain": 2,

"lgpStackConfig": {

"grafanaPort": 3000,

"prometheusPort": 9090,

"lokiPort": 3100

},

"collectorConfig": {

"prometheusExporterPort": 19090,

"controlPort": 19098

},

"securityConfig": {

"basicAuthUsername": "user",

"basicAuthPassword": "userpassword",

"httpsSecurity": {

"caCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"serverCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/https/ecdsa01Https01Cert.pem",

"serverKey": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/https/ecdsa01Https01Key.pem"

},

"ddsSecurity": {

"identityCaCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"permissionsCaCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"identityCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/identities/ecdsa01Peer01Cert.pem",

"identityKey": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/identities/ecdsa01Peer01Key.pem",

"signedPermissionsFile": "<rti_workspace_dir>/examples/dds_security/xml/signed/signed_ObservabilityCollectorServicePermissions.p7s",

"signedGovernanceFile": "<rti_workspace_dir>/examples/dds_security/xml/signed/signed_ObservabilityGovernance.p7s"

}

}

}

6.2.3.1.1.3. Non-Secure OTel LAN Configuration

This example configures Observability Framework with hostname “localhost”, default ports, and security disabled. Collector Service will use a LAN configuration and the OpenTelemetry exporter. The OpenTelemetry Collector routes telemetry data from the Collector Service OpenTelemetry exporter to Prometheus and Loki.

{

"hostname": "localhost",

"observabilityDomain": 2,

"lgpStackConfig": {

"grafanaPort": 3000,

"prometheusPort": 9090,

"lokiPort": 3100

},

"collectorConfig": {

"prometheusExporterPort": 19090,

"controlPort": 19098

},

"otelConfig": {

"otelHttpReceiverPort": 4318

}

}

6.2.3.1.1.4. Secure OTel LAN Configuration

This example configures Observability Framework with hostname “localhost”, default ports, and security enabled. Collector Service will use a LAN configuration and the OpenTelemetry exporter. The OpenTelemetry Collector routes telemetry data from the Collector Service OpenTelemetry exporter to Prometheus and Loki.

{

"hostname": "localhost",

"observabilityDomain": 2,

"lgpStackConfig": {

"grafanaPort": 3000,

"prometheusPort": 9090,

"lokiPort": 3100

},

"collectorConfig": {

"prometheusExporterPort": 19090,

"controlPort": 19098

},

"otelConfig": {

"otelHttpReceiverPort": 4318

},

"securityConfig": {

"basicAuthUsername": "user",

"basicAuthPassword": "userpassword",

"httpsSecurity": {

"caCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"serverCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/https/ecdsa01Https01Cert.pem",

"serverKey": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/https/ecdsa01Https01Key.pem"

},

"ddsSecurity": {

"identityCaCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"permissionsCaCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"identityCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/identities/ecdsa01Peer01Cert.pem",

"identityKey": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/identities/ecdsa01Peer01Key.pem",

"signedPermissionsFile": "<rti_workspace_dir>/examples/dds_security/xml/signed/signed_ObservabilityCollectorServicePermissions.p7s",

"signedGovernanceFile": "<rti_workspace_dir>/examples/dds_security/xml/signed/signed_ObservabilityGovernance.p7s"

}

}

}

6.2.3.1.2. Example WAN configurations

Table 6.4 lists the four WAN configurations supported by Observability Framework.

Configuration Name |

Network |

Data Storage |

Security |

|---|---|---|---|

NonSecureWAN |

WAN |

Prometheus and Grafana Loki |

No |

SecureWAN |

WAN |

Prometheus and Grafana Loki |

Yes |

NonSecureOTelWAN |

WAN |

Multiple through OpenTelemetry Collector |

No |

SecureOTelWAN |

WAN |

Multiple through OpenTelemetry Collector |

Yes |

6.2.3.1.2.1. Non-Secure WAN Configuration

This example configures Observability Framework with hostname “localhost”, default ports, and security disabled. Collector Service will use a WAN configuration with port 30000.

{

"hostname": "localhost",

"observabilityDomain": 2,

"lgpStackConfig": {

"grafanaPort": 3000,

"prometheusPort": 9090,

"lokiPort": 3100

},

"collectorConfig": {

"prometheusExporterPort": 19090,

"controlPort": 19098,

"rwtPort": 30000

}

}

6.2.3.1.2.2. Secure WAN Configuration

This example configures Observability Framework with hostname “localhost”, default ports, and security enabled Collector Service will use a WAN configuration with port 30000.

{

"hostname": "localhost",

"observabilityDomain": 2,

"lgpStackConfig": {

"grafanaPort": 3000,

"prometheusPort": 9090,

"lokiPort": 3100

},

"collectorConfig": {

"prometheusExporterPort": 19090,

"controlPort": 19098,

"rwtPort": 30000

},

"securityConfig": {

"basicAuthUsername": "user",

"basicAuthPassword": "userpassword",

"httpsSecurity": {

"caCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"serverCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/https/ecdsa01Https01Cert.pem",

"serverKey": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/https/ecdsa01Https01Key.pem"

},

"ddsSecurity": {

"identityCaCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"permissionsCaCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"identityCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/identities/ecdsa01Peer01Cert.pem",

"identityKey": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/identities/ecdsa01Peer01Key.pem",

"signedPermissionsFile": "<rti_workspace_dir>/examples/dds_security/xml/signed/signed_ObservabilityCollectorServicePermissions.p7s",

"signedGovernanceFile": "<rti_workspace_dir>/examples/dds_security/xml/signed/signed_ObservabilityGovernance.p7s"

}

}

}

6.2.3.1.2.3. Non-Secure OTel WAN Configuration

This example configures Observability Framework with hostname “localhost”, default ports, and security disabled. Collector Service will use a WAN configuration with port 30000 and the OpenTelemetry exporter. The OpenTelemetry Collector routes telemetry data from Collector Service Service* OpenTelemetry exporter to Prometheus and Loki.

{

"hostname": "localhost",

"observabilityDomain": 2,

"lgpStackConfig": {

"grafanaPort": 3000,

"prometheusPort": 9090,

"lokiPort": 3100

},

"collectorConfig": {

"prometheusExporterPort": 19090,

"controlPort": 19098,

"rwtPort": 30000

},

"otelConfig": {

"otelHttpReceiverPort": 4318

}

}

6.2.3.1.2.4. Secure OTel WAN Configuration

This example configures Observability Framework with hostname “localhost”, default ports, and security enabled. Collector Service will use a WAN configuration with port 30000 and the OpenTelemetry exporter. The OpenTelemetry Collector routes telemetry data from the Collector Service OpenTelemetry exporter to Prometheus and Loki.

{

"hostname": "localhost",

"observabilityDomain": 2,

"lgpStackConfig": {

"grafanaPort": 3000,

"prometheusPort": 9090,

"lokiPort": 3100

},

"collectorConfig": {

"prometheusExporterPort": 19090,

"controlPort": 19098,

"rwtPort": 30000

},

"otelConfig": {

"otelHttpReceiverPort": 4318

},

"securityConfig": {

"basicAuthUsername": "user",

"basicAuthPassword": "userpassword",

"httpsSecurity": {

"caCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"serverCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/https/ecdsa01Https01Cert.pem",

"serverKey": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/https/ecdsa01Https01Key.pem"

},

"ddsSecurity": {

"identityCaCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"permissionsCaCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/ca/ecdsa01RootCaCert.pem",

"identityCertificate": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/identities/ecdsa01Peer01Cert.pem",

"identityKey": "<rti_workspace_dir>/examples/dds_security/cert/ecdsa01/identities/ecdsa01Peer01Key.pem",

"signedPermissionsFile": "<rti_workspace_dir>/examples/dds_security/xml/signed/signed_ObservabilityCollectorServicePermissions.p7s",

"signedGovernanceFile": "<rti_workspace_dir>/examples/dds_security/xml/signed/signed_ObservabilityGovernance.p7s"

}

}

}

6.2.4. Start the Collection, Storage, and Visualization Docker Containers

The Docker containers used for data collection, storage, and visualization can either be run in a Linux host on the same LAN where the applications run or they can be installed on a remote Linux host (for example, an AWS instance) reachable over the WAN using Real-Time WAN Transport.

There may be different licensing requirements depending on the configuration (LAN/WAN, Secure/Non-Secure) you have chosen to run. For details on the license requirements and instructions on how to run the containers, see section Initialize and Run Docker Containers.

6.3. Running the Example

Table 6.5 lists optional command-line parameters you can use when running the Observability Framework example. Choose the options appropriate for your test environment.

Parameter |

Description |

Default Value |

|---|---|---|

|

Use this command-line option if you want to overwrite the default domain ID used by Monitoring Library 2.0 to send telemetry data to Collector Service. |

2 |

|

If you run Collector Service in a different host from the applications, use this command-line option to provide the address of the service. For example, 192.168.1.1 (for LAN), or udpv4_wan://10.56.78.89:16000 (for WAN). |

localhost |

In addition, if you run the applications in different hosts and multicast is not available, use the NDDS_DISCOVERY_PEERS environment to configure the peers where the applications run.

For simplicity, the following instructions assume that you are running the applications and the Docker containers used by Observability Framework on the same host using the default observability domain.

6.3.1. Start the Applications

This example assumes x64Linux4gcc7.3.0 as the architecture. The following

steps include instructions for non-secure and secure tests.

In a new browser window, go to http[s]://localhost:3000 and log in using your Grafana dashboard credentials. Note the use of

httpsif you are running a secure configuration.The default Grafana dashboard credentials are

admin:adminfor non-secure configurations, anduser:userpasswordfor secure configurations (as configured in the JSON file).At this point, no DDS applications are running.

From the example directory, open two terminals and start two instances of the application that publishes temperature sensor data. The command and resulting output for each instance are shown below.

The

-iparameter specifies the sensor ID that will be used. The-nparameter assigns a name to the application; this name will be used when sending commands in the Change the Application Logging Verbosity and Change the Metric Configuration sections of this example. The-pparameter enables security when using a secure configuration. See Command-Line Parameters for a description of all available options.The first instance creates two sensors.

$ ./objs/x64Linux4gcc7.3.0/Temperature_publisher -n SensorPublisher_1 -d 57 -i 0 -s 2 -v 2 ********************************************************** ******** Temperature Sensor Publisher App **************** ********************************************************** Running with parameters: Application Resource Name: /applications/SensorPublisher_1 Domain ID: 57 Init Sensor ID: 0 Sensor Count: 2 Observability Domain: 2 Collector Peer: udpv4://localhost Verbosity: 2 Security: false Running with QOS: Temperature_Profile_With_Monitoring2_Over_LAN Command>

$ ./objs/x64Linux4gcc7.3.0/Temperature_publisher -n SensorPublisher_1 -d 57 -i 0 -s 2 -p -v 2 ********************************************************** ******** Temperature Sensor Publisher App **************** ********************************************************** Running with parameters: Application Resource Name: /applications/SensorPublisher_1 Domain ID: 57 Init Sensor ID: 0 Sensor Count: 2 Observability Domain: 2 Collector Peer: udpv4://localhost Verbosity: 2 Security: true Running with QOS: Secure_Temperature_Profile_With_Monitoring2_Over_LAN Command>

The second instance creates one sensor.

Note that the sensor ids used by different instances of the temperature publisher app should not overlap. The first instance used the switches -i 0 and -s 2, creating two sensors with ids 0 and 1. The second instance used -i 2 and -s 1, creating one sensor with id 2.

$ ./objs/x64Linux4gcc7.3.0/Temperature_publisher -n SensorPublisher_2 -d 57 -i 2 -s 1 -v 2 ********************************************************** ******** Temperature Sensor Publisher App **************** ********************************************************** Running with parameters: Application Resource Name: /applications/SensorPublisher_2 Domain ID: 57 Init Sensor ID: 2 Sensor Count: 1 Observability Domain: 2 Collector Peer: udpv4://localhost Verbosity: 2 Security: false Running with QOS: Temperature_Profile_With_Monitoring2_Over_LAN Command>

$ ./objs/x64Linux4gcc7.3.0/Temperature_publisher -n SensorPublisher_2 -d 57 -i 2 -s 1 -p -v 2 ********************************************************** ******** Temperature Sensor Publisher App **************** ********************************************************** Running with parameters: Application Resource Name: /applications/SensorPublisher_1 Domain ID: 57 Init Sensor ID: 2 Sensor Count: 1 Observability Domain: 2 Collector Peer: udpv4://localhost Verbosity: 2 Security: true Running with QOS: Secure_Temperature_Profile_With_Monitoring2_Over_LAN Command>

From the example directory, open a new terminal and start one instance of the application that subscribes to temperature sensor data.

$ ./objs/x64Linux4gcc7.3.0/Temperature_subscriber -n SensorSubscriber -d 57 -v 2 ********************************************************** ******** Temperature Sensor Subscriber App **************** ********************************************************** Running with parameters: Application Resource Name: /applications/SensorSubscriber Domain ID: 57 Observability Domain: 2 Collector Peer: udpv4://localhost Verbosity: 2 Security: false Running with QOS: Temperature_Profile_With_Monitoring2_Over_LAN Command>

$ ./objs/x64Linux4gcc7.3.0/Temperature_subscriber -n SensorSubscriber -d 57 -p -v 2 ********************************************************** ******** Temperature Sensor Subscriber App **************** ********************************************************** Running with parameters: Application Resource Name: /applications/SensorSubscriber Domain ID: 57 Observability Domain: 2 Collector Peer: udpv4://localhost Verbosity: 2 Security: true Running with QOS: Secure_Temperature_Profile_With_Monitoring2_Over_LAN Command>

Note

The two Publisher applications and the Subscriber application are started with verbosity set to WARNING (-v 2). You may see any of the following warnings on the console output. These warnings are expected.

WARNING [0x01017774,0xFF40EEF6,0xEC566CA8:0x000001C1{Domain=2}|ENABLE|LC:Discovery]NDDS_Transport_UDPv4_Socket_bind_with_ip:0X1EE6 in use

WARNING [0x01017774,0xFF40EEF6,0xEC566CA8:0x000001C1{Domain=2}|ENABLE|LC:Discovery]NDDS_Transport_UDPv4_SocketFactory_create_receive_socket:invalid port 7910

WARNING [0x01017774,0xFF40EEF6,0xEC566CA8:0x000001C1{Domain=2}|ENABLE|LC:Discovery]NDDS_Transport_UDP_create_recvresource_rrEA:!create socket

WARNING [0x010175D0,0x7A41F985,0xF3813392:0x000001C1{Name=Temperature DomainParticipant,Domain=57}|ENABLE|LC:Discovery]NDDS_Transport_UDPv4_Socket_bind_with_ip:0X549C in use

WARNING [0x010175D0,0x7A41F985,0xF3813392:0x000001C1{Name=Temperature DomainParticipant,Domain=57}|ENABLE|LC:Discovery]NDDS_Transport_UDPv4_SocketFactory_create_receive_socket:invalid port 21660

WARNING [0x010175D0,0x7A41F985,0xF3813392:0x000001C1{Name=Temperature DomainParticipant,Domain=57}|ENABLE|LC:Discovery]NDDS_Transport_UDP_create_recvresource_rrEA:!create socket

WARNING [0x010175D0,0x7A41F985,0xF3813392:0x000001C1{Name=Temperature DomainParticipant,Domain=57}|ENABLE|LC:Discovery]DDS_DomainParticipantDiscovery_add_peer:no peer locators for: peer descriptor(s) = "builtin.shmem://", transports = "", enabled_transports = ""

Your Grafana dashboard should now display information about the new Hosts, Applications, and DDS entities (Participants, DataWriters, and DataReaders). There should be 1 Host, 3 Applications, 3 Participants, 3 DataWriters, 1 DataReader, and 1 Topic.

The Grafana main dashboard pictured above indicates that the system is healthy. You may see warnings in the log section related to the reservation of communication ports. These warnings are expected. You can select the Warnings panel to visualize them.

Next, you will introduce different failures that will affect the system’s health.

6.3.2. Changing the Time Range in Dashboards

While running the examples, you can change the time range in the dashboards to reduce or expand the amount of history data displayed. Use the time picker dropdown at the top right to change the time range in any dashboard.

The time picker includes a predefined list of time ranges to choose from. If you want to use a custom time range, enter the desired range in the From field. Use the format “now-< custom time >,” where < custom time > is a unit of time; Grafana supports m-minute, h-hour, and d-day time units. For example, to show a custom range of one minute, enter “now-1m” in the From field, then select Apply Time Range.

Note

The time range may be changed on any dashboard, but all changes are temporary and will reset to 1 hour when you return to the Alert Home dashboard. Changes to the time range made in the Alert Home dashboard are unique in that the selected time range will be propagated to other dashboards as you navigate through the hierarchy.

6.3.3. Simulate Sensor Failure

The DataWriters in each application are expected to send sensor data every second, and the DataReader expects to receive sensor data from each sensor every second. This QoS contract is enforced with the Deadline QoS Policy set in USER_QOS_PROFILES.xml. Refer to Deadline QoS Policy in the RTI Connext Getting Started Guide for basic information, or DEADLINE QoSPolicy in the RTI Connext Core User’s Manual for detailed information.

<deadline>

<period>

<sec>1</sec>

<nanosec>0</nanosec>

</period>

</deadline>

To simulate a failure in the sensor with ID 0, enter the following command in the first Temperature_publisher instance:

Command> stop 0

The Grafana dashboard updates to indicate the sensor failure. The dashboard does not update immediately; you may have to wait a few seconds to see the change reflecting the sensor failure as a Delay error. That error is expected because the deadline policy was violated when you stopped the sensor with ID 0.

The Grafana dashboards are hierarchical. Now that you know something is happening related to latency (or delays), you can get additional details to determine the root cause. Select the Delays panel to drill down to the next level and get more information about the error.

The second level of the Grafana dashboard indicates that there were deadline errors, which can be generated by both the DataReaders and DataWriters of the sensor Topic. Still, we do not know which sensor the problem originated from. To determine that, we have to go one level deeper; select the Missed Writer Deadline panel to see which DataWriter caused the problem.

The third level of the Grafana dashboard provides a list of entities generating the deadline metric. In this case we see three entities, or DataWriters, each associated with a different sensor. We see that an entity is failing, but what sensor does that entity represent?

Looking at the DataWriter Name column, we can see that the failing sensor has the name “Sensor with ID=0”. The example application set this name using the EntityName QoS Policy when creating the DataWriter. If you want additional information, such as the machine where the sensor DataWriter is located, select the Sensor with ID-0 link in the DataWriter Name column.

The fourth and last level of the Grafana dashboard provides detailed information about an individual entity, including location-related information such as Host Name and Process Id.

In addition, this last level provides information about individual metrics for the entity. Scroll down to see the details of the missed deadline metric.

Next, restore the health of the failing sensor to review how the

dashboard changes. Restart the first Temperature_publisher instance

using the command start 0.

Command> start 0

Go back to the Alert Home dashboard to confirm that the sensor becomes healthy. After a few seconds, the Delays panel should indicate the sensor is healthy. Note that the status part of the Delay panel still displays an “Error” state (red) if, at anytime in the displayed time range, there was a “Delay” metric in the system that was considered to be “unhealthy” (a metric whose value exceeded configured limits).

6.3.4. Simulate Slow Sensor Data Consumption

A subscribing application can be configured to consume sensor data at a lower rate than the publication rate. In a real scenario, this could occur if the subscribing application becomes CPU bound.

This scenario simulates a problem with the subscribing application; a

bug in the application logic makes it slow in processing sensor data. To

test this failure, enter the slow_down command in the

Temperature_subscriber instance:

Command> slow_down

After some seconds, the Grafana dashboard displays two new system errors related to saturation and unexpected data losses. Because the DataReader is not able to keep up with the sensor data, the dashboard indicates that there are potential data losses. At the same time, being unable to keep up with the sensor data could be a saturation sign. For example, the subscribing application may be consuming 100% of the CPU due to an application bug.

As you did when testing the sensor failure, select the displayed errors to navigate the dashboard hierarchy and determine the root cause of the problem. To go to the second level, select the Data Loss panel to see the reason for the losses. Because you slowed the subscriber application, the DataReader is not able to read fast enough. The Dropped Samples due to History Depth metric reveals the type of failure. Select the red errors to drill down and review further details about the problem.

After reviewing the errors, restore the health of the failing

DataReader. In the Temperature_subscriber application, enter the speed_up

command.

Command> speed_up

In Grafana, go back to the home dashboard and wait until the system becomes healthy again. After a few seconds, the Saturation and Data Loss panels should indicate a healthy system. Also, adjust the time window to one minute and wait until all the system status panels are green again.

6.3.5. Simulate Time Synchronization Failures

In the example, the subscribing applications have been configured to expect all system clocks are synchronized to within 1 second. The source timestamp associated with a sensor sample by the Publisher should not be farther in the future from the reception timestamp than a configurable tolerance. This behavior is configured using the DestinationOrder QoS Policy set in USER_QOS_PROFILES.xml.

<destination_order>

<kind>BY_SOURCE_TIMESTAMP_DESTINATIONORDER_QOS</kind>

<source_timestamp_tolerance>

<sec>1</sec>

<nanosec>0</nanosec>

</source_timestamp_tolerance>

</destination_order>

This final simulation demonstrates how to use logging information to troubleshoot problems. In this scenario, you’ll create a clock synchronization issue in the first instance of Temperature_publisher. The clock will move forward in the future by a few seconds, causing the DataReader to drop some sensor samples from the publishing application.

To simulate this scenario, enter clock_forward 0 in the first Temperature_publisher instance. This will cause the publishing application to artificially set the clock used for the source timestamp of the DataWriter named “Sensor with ID=0” by 2 seconds.

Command> clock_forward 0

After some seconds, three panels in the system status section will turn red: Data Loss, System Errors, and Delays. Each is affected by the same underlying problem. You can select the red errors to drill down through the dashboard hierarchy and determine the root cause of the problem.

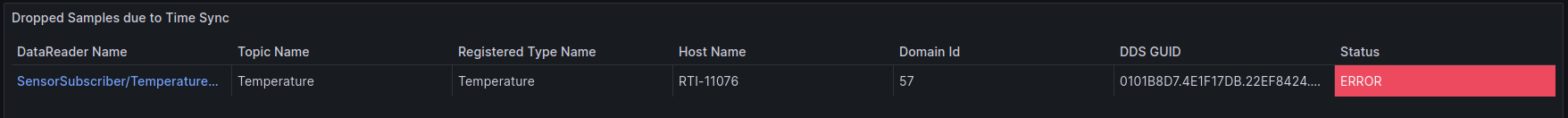

First, select the Data Loss panel to see the reason for the error. The DataReader dropped samples coming from one or more of the DataWriters due to time synchronization issues.

This error indicates that the DataReader in the subscribing application dropped some samples, but can’t yet identify the problem sensor or DataWriter. To determine that, select the Dropped Samples due to Time Sync panel.

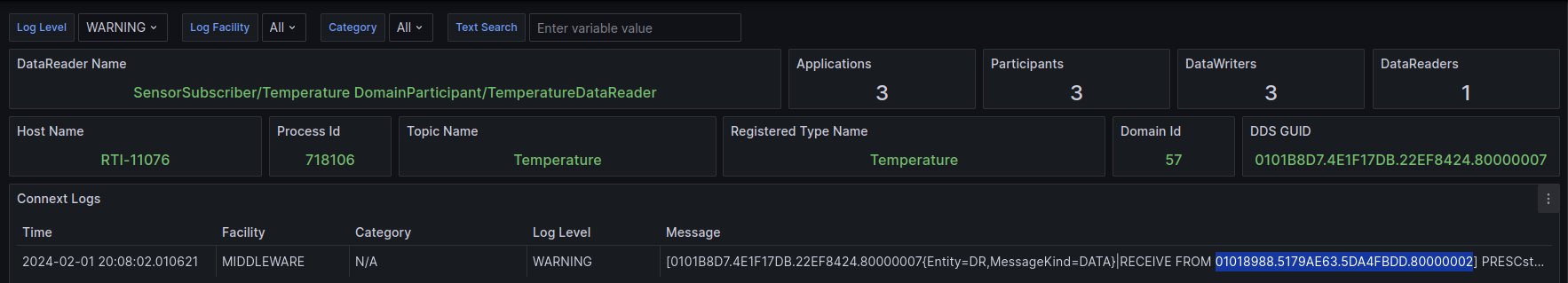

At this level, you can locate the DataReader reporting the error, but not the DataWriter causing it. Select the TemperatureSensor link in the DataReader Name column to go one more level down.

On the endpoint dashboard, there is one log warning associated with the DataReader reporting time synchronization issues. Select the red Log Warning to view the warning message logged by the DataReader.

This warning message provides information about the GUID of the DataWriter that published the sensor data that was dropped due to time synchronization issues. But how do we locate the DataWriter from its GUID?

Note the highlighted RECEIVE FROM GUID in the log message. This represents the corresponding DataWriter that created the warning. (You can copy this GUID at this point).

Select the DataWriters panel to view a list of the running DataWriters.

Now that we have a list of DataWriters, we can compare their GUIDs with the GUID in the log message to find the problem DataWriter. In this case the list does not have a lot of entries, so you can search manually.

However, when the number of entries is large, you can click on the funnel icon next to the GUID label to filter the list to the one writer with time synchronization issues by typing in the GUID or pasting the value copied from the log message.

Finally, select the problem DataWriter to learn its identity.

The problem DataWriter corresponds to sensor 0. You have successfully done root cause analysis by correlating metrics and logging.

6.3.6. Change the Application Logging Verbosity

Monitoring Library 2.0 has two verbosity settings.

Collection verbosity controls the level of log messages an application generates.

Forwarding verbosity controls the level of log messages an application forwards to the Collector Service (making the messages visible in the dashboard).

For additional information on logging, refer to Logs.

By default, Monitoring Library 2.0 only forwards error and warning log messages, even if the applications generate more verbose logging. Forwarding messages at a higher verbosity for all applications may saturate the network and the different Observability Framework components, such as Collector Service and the logging aggregation backend (Grafana Loki in this example).

However, in some cases you may want to change the logging Collection verbosity and/or the Forwarding verbosity for specific applications to obtain additional information when doing root cause analysis.

In this section, you will increase both the Collection and Forwarding verbosity

levels for the first publishing application using a remote command. To do that,

you will use the application resource name generated by using the -n

command-line option. The three applications have the following names:

/applications/SensorPublisher_1

/applications/SensorPublisher_2

/applications/SensorSubscriber

To change the Collection verbosity:

From the Alert Home dashboard, select the Applications panel to open the Application List dashboard.

From the Application List dashboard, select the SensorPublisher_1 link to open the Alert Application Status dashboard.

From the Alert Application Status dashboard, select the Configure Log Verbosity button to open the Log Control dashboard.

From the Log Control dashboard’s Log Collection Verbosity panel, select DEBUG for the MIDDLEWARE facility.

Note that the verbosity setting color changes to indicate the update. Also, the Set Collection Verbosity button becomes available.

Select the Set Collection Verbosity button. When prompted to confirm the update, select Confirm to set the Collection verbosity level to DEBUG at the application.

The selected application’s Collection verbosity is now DEBUG. If you examine the terminal window for SensorPublisher_1, you will see messages like those in the following image.

DEBUG MIGInterpreter_parse:ACK from 0X1013131,0X4856CAC6

COMMENDSrWriterService_onSubmessage:[1689963305,136481175] writer oid 0x80002102 receives ACKNACK from reader 0x1013131.4856cac6.bd3f33f5.80000007 for lead [(0000000000,00013981)] bitcount(0), epoch(41048), isPureNack(0)

DEBUG COMMENDActiveFacadeReceiver_loop:rCoTemnt##02Rcv returning message loan

DEBUG NDDS_Transport_UDP_receive_rEA:rCoTemnt##02Rcv blocking on 0X549D

DEBUG NDDS_Transport_UDP_receive_rEA:rCoTemnt##02Rcv received 64 bytes from 0X100007F|40284

DEBUG RTINetioReceiver_receiveFast:rCoTemnt##02Rcv received 64 bytes

DEBUG COMMENDActiveFacadeReceiver_loop:rCoTemnt##02Rcv parsing message

DEBUG MIGInterpreter_parse:INFO_DST from 0X1013131,0X4856CAC6

DEBUG MIGInterpreter_parse:ACK from 0X1013131,0X4856CAC6

DEBUG COMMENDActiveFacadeReceiver_loop:rCoTemnt##02Rcv returning message loan

DEBUG NDDS_Transport_UDP_receive_rEA:rCoTemnt##02Rcv blocking on 0X549D

DEBUG NDDS_Transport_UDP_receive_rEA:rCoTemnt##02Rcv received 64 bytes from 0X100007F|40284

DEBUG RTINetioReceiver_receiveFast:rCoTemnt##02Rcv received 64 bytes

DEBUG COMMENDActiveFacadeReceiver_loop:rCoTemnt##02Rcv parsing message

DEBUG MIGInterpreter_parse:INFO_DST from 0X1013131,0X4856CAC6

DEBUG MIGInterpreter_parse:ACK from 0X1013131,0X4856CAC6

DEBUG RTIEventActiveGeneratorThread_loop:rCoTemnt####Evt gathering events

DEBUG RTIEventActiveGeneratorThread_loop:rCoTemnt####Evt firing events

DEBUG COMMENDActiveFacadeReceiver_loop:rCoTemnt##02Rcv returning message loan

DEBUG NDDS_Transport_UDP_receive_rEA:rCoTemnt##02Rcv blocking on 0X549D

DEBUG RTIEventActiveGeneratorThread_loop:rCoTemnt####Evt rescheduling events

DEBUG RTIEventActiveGeneratorThread_loop:rCoTemnt####Evt sleeping {00000000,1B5E7420}

DEBUG NDDS_Transport_UDP_receive_rEA:rCoObsnt##00Rcv received 292 bytes from 0X100007F|46993

DEBUG RTINetioReceiver_receiveFast:rCoObsnt##00Rcv received 292 bytes

DEBUG COMMENDActiveFacadeReceiver_loop:rCoObsnt##00Rcv parsing message

DEBUG MIGInterpreter_parse:SECURE_RTPS_PREFIX from 0XDFCD91E1,0X6868BAE7

DEBUG MIGInterpreter_parse:INFO_TS from 0XDFCD91E1,0X6868BAE7

DEBUG MIGInterpreter_parse:DATA from 0XDFCD91E1,0X6868BAE7

At this point, the SensorPublisher_1 application is generating log messages at the DEBUG level as shown in the terminal window, but the debug messages are not being forwarded to Collector Service because the Forwarding verbosity is still at WARNING.

To set the Forwarding verbosity to DEBUG, repeat steps 4 and 5 above in the Log Fowarding Verbosity panel.

After setting both the Collection and Forwarding verbosity to DEBUG, you should see an indication that DEBUG messages are being received for the SensorPublisher_1 application by examining the Total System Logs panel on the Alert Home dashboard.

To get back to the Alert Home dashboard, click Home at the top left.

Note

If you are using the dashboards as an admin user, you will be prompted to save your changes. Select the Discard button; the changes to the dashboard do not need to be saved, since they are set in the application. The save prompt does not appear when logged into the dashboard as a user without admin permissions.

If the Warnings, Errors, and Security panels are not displayed in the Alert Home dashboard, select the Logs dashboard row. Note that there are no additional log messages indicated in the Warnings, Errors, or Security panels since those panels only show the number of Warning-level, Error-level, and Security-related log messages, respectively.

To verify that DEBUG messages are being collected, select the value in the Total System Logs panel to open the Logs dashboard. You will see that the total number of log messages received is increasing dramatically.

You can manipulate the Log Control settings to verify application and dashboard behavior as shown in Table 6.6.

Collection Verbosity |

Forwarding Verbosity |

Application DEBUG Log Output |

Grafana Connext DEBUG Logs |

|---|---|---|---|

WARNING |

WARNING |

NO |

NO |

DEBUG |

WARNING |

YES |

NO |

WARNING |

DEBUG |

NO |

NO |

DEBUG |

DEBUG |

YES |

YES |

6.3.7. Change the Metric Configuration

Metrics are the collections of counters and gauges you can use to analyze application behavior. Observability Framework gives you complete control of which metrics to collect, both before and during runtime. Data for your selected metrics is forwarded to Collector Service and made available to third-party backends.

In this example, all application metrics have already been enabled, and Prometheus is used on the backend to store collected metrics.

Note

By default, Observability Framework does not collect metrics for any DDS entities. For details on how to enable the initial metrics to be collected, see Setting Initial Metrics and Log Configuration.

The Observability Dashboards enable you to change the initial metric configuration for specific applications, DDS entities, or DDS entity instances during runtime, without restarting or reconfiguring your applications. You can dynamically change the metric configuration for a specific DDS resource (a single application, Participant, DataReader, DataWriter, or Topic), or all of the resources of a given type contained by another resource (for example, all DataWriters of an application, or all DataReaders of a Participant)

This section of the Observability Framework example will walk you through two scenarios:

6.3.7.1. Resources used in this example

In the Start the Applications section, you created and named three

applications using the -n command-line option. Table 6.7

lists the DDS entity names for these applications and the other resources

used in this example.

The DDS entity names are specified using the DDS_EntityNameQosPolicy name

field for each entity via XML or programmatically. The entity names are used

to build the resource name, which is the unique identifier used in

the remote commands to specify each resource.

For more information, see Resource Pattern Definitions.

Entity Name |

Entity Type |

Where Configured |

|---|---|---|

SensorPublisher_1 |

Application |

On start |

SensorPublisher_2 |

Application |

On start |

SensorSubscriber |

Application |

On start |

Temperature DomainParticipant |

DomainParticipant |

XML |

TemperatureDataReader |

DataReader |

XML |

Sensor with ID=0 |

DataWriter |

Code |

Sensor with ID=1 |

DataWriter |

Code |

Sensor with ID=2 |

DataWriter |

Code |

For more information about observable resource names, see Resources. The Observability Framework Dashboards build the resource names and commands based on your configuration.

The following code snippet details how to set the DataWriter entity name programmatically.

// create and initialize DataWriterQoS

DDS_DataWriterQos writerQos;

retcode = publisher->get_default_datawriter_qos(writerQos);

if (retcode != DDS_RETCODE_OK) {

return shutdown_participant(

participant,

"get_default_datawriter_qos error",

EXIT_FAILURE);

}

// create and initialize sensorName

char sensorName[64];

sprintf(sensorName, "Sensor with ID=%d", sensor_id);

// set the publication_name.name in DataWriterQoS

writerQos.publication_name.name = sensorName;

// create DataWriter entity with updated DataWriterQoS

untyped_writer = publisher->create_datawriter(

topic,

writerQos,

NULL /* listener */,

DDS_STATUS_MASK_NONE);

Note

In this example, you will access configuration dashboards several times. If you are using the dashboards as an admin user, you will be prompted to save your changes each time you navigate away from a configuration dashboard. When prompted to save, click the Discard button; changes to the dashboard do not need to be saved because they are set in the application. This prompt does not appear when logged into the dashboard as a user without admin permissions.

6.3.7.2. Changing metrics collected for a single DataWriter

Use the Observability Framework Dashboards to disable the Pushed Sample Bytes metric on one DataWriter in the SensorPublisher_1 application.

From the Alert Home dashboard, select the DataWriters panel to open the DataWriters List dashboard.

Select the SensorPublisher_1/Temperature DomainParticipant/Sensor with ID=0 DataWriter to open the Alert DataWriter Status dashboard. You may need to hover over the DataWriter Name field to see the full DataWriter resource name.

Select Configure DataWriter Metrics to open the DataWriter Metrics dashboard.

In the DataWriter Metrics dashboard, note the following:

The fully qualified resource name is displayed at the top.

The Collector Service Control URL and status display below the resource name. If the Collector Service cannot be reached, the status will be NOT AVAILABLE and changes will not be allowed.

Enable is selected for all of the metrics, indicating that they are all currently active.

In the Bandwidth/User Data Writer Traffic section, select Disable for Pushed Sample Bytes. Note the text color changes to indicate the pending update.

At the bottom of the page, select Configure Metrics.

When prompted to confirm the change, review the updates and then select Confirm.

Verify that Pushed Sample Bytes is now disabled.

To confirm the Pushed Sample Bytes metric is no longer being collected:

At the top left, select Home to return to the Alert Home dashboard.

Select the DataWriters panel to open the DataWriters List dashboard.

Select the SensorPublisher_1/Temperature DomainParticipant/Sensor with ID=0 link to open the Alert DataWriter Status dashboard.

Scroll down to the Pushed Sample Bytes graph to confirm the metric is not being collected.

At the top left, select Home to go back to the Alert Home dashboard.

6.3.7.3. Changing metrics collected for all DataWriters of an application

Disable the Pushed Samples metric on all DataWriters in the SensorPublisher_1 application.

From the Alert Home dashboard, select the Applications panel to open the Application List dashboard.

Select SensorPublisher_1 to open the Alert Application Status dashboard.

Select Configure DataWriter Metrics to open the DataWriter Metrics Multi dashboard.

In the DataWriter Metrics Multi dashboard, note the following:

The Collector Service Control URL and status is displayed. If the Collector Service cannot be reached, the status will be NOT AVAILABLE and changes will not be allowed.

The Current State panel indicates the current configuration of a metric across all DataWriters in the SensorPublisher_1 application.

Enabled. The metric is enabled for all DataWriters in the SensorPublisher_1 application

Partial. The metric is enabled for at least one, but not all of the DataWriters in the SensorPublisher_1 application

Disabled. The metric is disabled for all DataWriters in the SensorPublisher_1 application.

The DataWriters on Application “SensorPublisher_1” panel lists the DataWriters in the SensorPublisher_1 application. Use the links to access the metric control page for the selected entity.

The Configure Metrics for all DataWriters of Application “SensorPublisher_1” enables you to change the metrics configuration for all DataWriters of the SensorPublisher_1 application. Changes made in this panel are used to build a command to modify the current configuration. By default, all metrics are initialized to Don’t Change, indicating the configuration for that metric will not be changed and will remain in the state noted in the Current State panel. Selecting Enable will enable the metric for all DataWriters of the SensorPublisher_1 application regardless of the current state. Selecting Disable will disable the metric for all DataWriters of the SensorPublisher_1 application regardless of the current state.

Verify that the Current State of the Pushed Sample Bytes metric is Partial. This status indicates the metric is still enabled on the DataWriter SensorPublisher_1/Temperature DomainParticipant/Sensor with ID=1 (the default setting), but disabled on SensorPublisher_1/Temperature DomainParticipant/Sensor with ID=0 (as you configured earlier above).

Select Disable for Pushed Samples. If you do not see the Disable command, widen your browser window.

At the bottom of the page, select Configure Metrics.

When prompted to confirm the change, verify the updates and then select Confirm.

Scroll up to verify the Pushed Samples metric is disabled. It may take a few seconds for the dashboard to refresh.

In the DataWriters on Application “SensorPublisher_1” panel, select SensorPublisher_1/Temperature DomainParticipant/Sensor with ID=0 to open the DataWriter Metrics dashboard for the Sensor with ID=0 DataWriter.

Verify that both the Pushed Samples and Pushed Sample Bytes metrics are disabled.

Pushed Samples was disabled for all DataWriters resources on the SensorPublisher_1 application in this section of the example. Pushed Sample Bytes was disabled in the Changing metrics collected for a single DataWriter section.

Select your browser’s Back button to go back to the DataWriter Metrics Multi dashboard for the SensorPublisher_1 application.

In the DataWriters on Application “SensorPublisher_1” panel, select SensorPublisher_1/Temperature DomainParticipant/Sensor with ID=1 to open the DataWriter Metrics dashboard for the Sensor with ID=1 DataWriter.

Verify that only the Pushed Samples metric is disabled.

To re-enable the Pushed Sample Bytes and Pushed Samples metrics, repeat the above steps selecting enable instead of disable.

6.3.8. Close the Applications

When done working with the example, enter quit in each running

application to shut it down.