4.7.8. ARINC 653 Transport¶

This section describes the ARINC transport and how to configure it.

The ARINC transport allows a user to use queuing ports defined by the ARINC 653 APEX API for inter-partition and intra-partition communication.

There are two major components to be configured when using this transport:

The Port Manager, which manages all the ports configured for a partition;

The ARINC Interface which is configured per DomainParticipant.

4.7.8.1. ARINC Channels Configuration¶

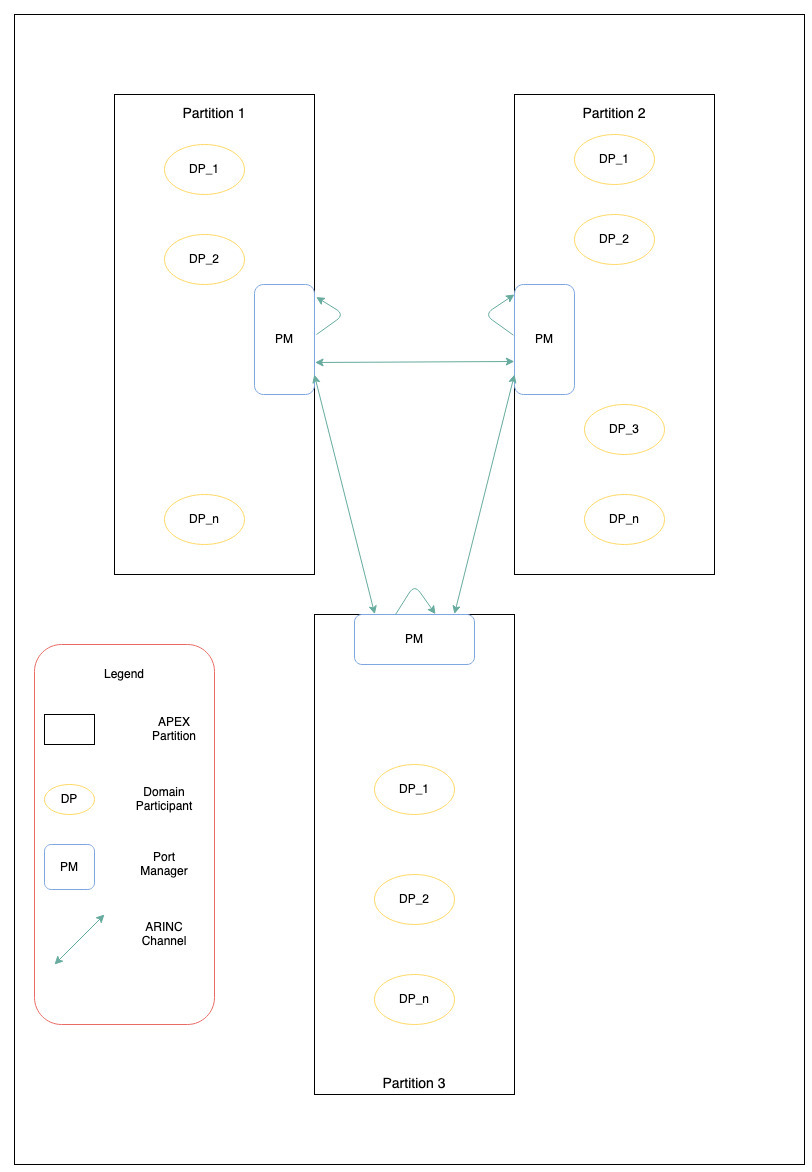

All ARINC 653 ports and channels are statically configured in the module OS (MOS) configuration. The diagram below shows a typical configuration.

To avoid a mesh network between all the DomainParticipants, the ARINC transport shares the APEX queuing ports between all the participants in a partition. This requires the user to configure a mesh network of APEX channels between all of the partitions. Also, note that a channel is required with send and receive ports on the same partition for intra-partition communication.

The properties of ARINC 653 ports must be replicated in the Port Manager properties, which are detailed below.

4.7.8.2. The Port Manager¶

The Port Manager is configured as a separate RT component per partition (once per DomainParticipantFactory) and manages all the send and receive APEX Queuing ports from this partition. The DomainParticipants share all the ports in the Port Manager. The Port Manager is also responsible for sending and receiving data from these ports and forwarding them to their destination.

Note: The Port Manager factory must be registered before any ARINC interface is registered/created.

The following table details the properties to be configured in the PortManagerFactoryProperty:

Property Name |

Property Value Description |

|---|---|

|

A sequence of ARINC_ReceivePortProperty. Configures all the receive ports used by this partition. |

|

A sequence of ARINC_RouteProperty. Configures all the send ports and their destinations used by this partition. |

|

The properties of the ARINC 653 PROCESS created to receive all the messages from different ports. It is required that this PROCESS has the highest priority. |

|

Maximum number of ARINC Interfaces managed by this port manager. This number is typically equal to the number of DomainParticipants in the partition. |

|

Boolean to configure synchronous processing of received messages. It is false by default. Note When this is set to true all messages received are processed synchronously in the context of the Port manager Process (shared between multiple DomainParticipants) all the way upto the DDS Reader. In asynchronous mode the messages are handed off to another ARINC 653 Process inside the ARINC Interface. Since the port manager is shared between many DomainParticipants synchronous processing can lead to the port manager blocking multiple participants. |

Below is an example for setting up and registering the port manager:

struct ARINC_RouteProperty *route_property;

struct ARINC_PortManagerFactoryProperty *port_mgr_prop = NULL;

struct ARINC_ReceivePortProperty *receive_port_prop = NULL;

RT_Registry_T *registry = NULL;

port_mgr_prop = (struct ARINC_PortManagerFactoryProperty*)malloc(

sizeof(struct ARINC_PortManagerFactoryProperty));

*port_mgr_prop = ARINC_PORTMANAGER_FACTORY_PROPERTY_DEFAULT;

/*Configuring APEX process attributes in OSAPI Thread property*/

port_mgr_prop->thread_property.port_property.parent.deadline = SOFT;

port_mgr_prop->thread_property.port_property.parent.period = 500000000;

port_mgr_prop->thread_property.port_property.parent.time_capacity = 500000000;

port_mgr_prop->thread_property.stack_size = 32000;

port_mgr_prop->thread_property.priority = 99;

#define RTI_PORTMGR_RECV_THREAD_NAME "rti.ARINC.pm.rcv_thrd"

OSAPI_Memory_zero(&port_mgr_prop->thread_property.port_property.parent.name, MAX_NAME_LENGTH);

OSAPI_Memory_copy(&port_mgr_prop->thread_property.port_property.parent.name,

RTI_PORTMGR_RECV_THREAD_NAME, OSAPI_String_length(RTI_PORTMGR_RECV_THREAD_NAME));

port_mgr_prop->sync_processing = RTI_FALSE;

/* Name of queuing port sending to Connext application partition */

#define RTI_APP_SEND_PORT_NAME "Part1_send"

ARINC_RoutePropertySeq_set_maximum(&port_mgr_prop->send_routes, 1);

ARINC_RoutePropertySeq_set_length(&port_mgr_prop->send_routes, 1);

route_property = (struct ARINC_RouteProperty*)

ARINC_RoutePropertySeq_get_reference(&port_mgr_prop->send_routes, 0);

OSAPI_Memory_copy(route_property->send_port_property.port_name,

RTI_APP_SEND_PORT_NAME, OSAPI_String_length(RTI_APP_SEND_PORT_NAME));

route_property->send_port_property.max_message_size = 1024;

route_property->send_port_property.max_queue_size = 20;

route_property->channel_identifier = 1;

/* Name of queuing port receiving from Connext application partition */

#define RTI_APP_RECV_PORT_NAME "Part1_recv"

ARINC_ReceivePortPropertySeq_set_maximum(&port_mgr_prop->receive_ports,1);

ARINC_ReceivePortPropertySeq_set_length(&port_mgr_prop->receive_ports,1);

receive_port_prop = (struct ARINC_ReceivePortProperty*)

ARINC_ReceivePortPropertySeq_get_reference(&port_mgr_prop->receive_ports,0);

receive_port_prop->receive_port_property.max_message_size = 1024;

receive_port_prop->receive_port_property.max_queue_size = 20;

receive_port_prop->max_receive_queue_size = 20;

receive_port_prop->channel_identifier = 1;

OSAPI_Memory_copy(receive_port_prop->receive_port_property.port_name,

RTI_APP_RECV_PORT_NAME, OSAPI_String_length(RTI_APP_RECV_PORT_NAME));

registry = DDS_DomainParticipantFactory_get_registry(

DDS_DomainParticipantFactory_get_instance());

if (!RT_Registry_register(registry,

NETIO_DEFAULT_PORTMANAGER_NAME,

ARINC_PortManagerFactory_get_interface(),

(struct RT_ComponentFactoryProperty*)port_mgr_prop,NULL))

{

printf("failed to register port mgr\n");

}

4.7.8.3. ARINC Interface¶

The ARINC interface is the NETIO transport interface that is registered with each participant. The transport interface is the lowest layer for a DomainParticipant through which all data is routed. The ARINC interface maintains the internal tables per DomainParticipant to accept and route data to the correct internal DataWriters and DataReaders. When the ARINC interface is created for the first participant it automatically creates the port manager instance.

The ARINC interface can be configured with the following properties in the ARINC_InterfaceFactoryProperty:

Property Name |

Property Value Description |

|---|---|

recv_thread |

The properties of the ARINC 653 PROCESS created to process messages destined for this Interface from the port manager. |

|

The internal queue is maintained by each ARINC interface. These are the maximum number of messages this

interface can hold as they are processed by the |

Note

If the sync_processing in PortManagerFactoryProperty is set to true, the above properties

have no effect. No receive thread or internal queues are created for the ARINC interface.

Below is an example for setting up and registering the ARINC interface:

struct ARINC_InterfaceFactoryProperty *arinc_property = NULL; RT_Registry_T *registry = NULL; arinc_property = (struct ARINC_InterfaceFactoryProperty*) malloc(sizeof(struct ARINC_InterfaceFactoryProperty)); *arinc_property = ARINC_INTERFACE_FACTORY_PROPERTY_DEFAULT; arinc_property->recv_thread.port_property.parent.deadline = SOFT; arinc_property->recv_thread.port_property.parent.period = INFINITE_TIME_VALUE; arinc_property->recv_thread.port_property.parent.time_capacity = INFINITE_TIME_VALUE; arinc_property->recv_thread.stack_size = 16000; arinc_property->recv_thread.priority = 97; #define RTI_RECV_THREAD_NAME "rti.ARINC.p2.rcv_thrd" OSAPI_Memory_zero(&arinc_property->recv_thread.port_property.parent.name, MAX_NAME_LENGTH); OSAPI_Memory_copy(&arinc_property->recv_thread.port_property.parent.name, RTI_RECV_THREAD_NAME, OSAPI_String_length(RTI_RECV_THREAD_NAME)); registry = DDS_DomainParticipantFactory_get_registry( DDS_DomainParticipantFactory_get_instance()); if (!RT_Registry_register(registry, NETIO_DEFAULT_ARINC_NAME, ARINC_InterfaceFactory_get_interface(), (struct RT_ComponentFactoryProperty*)arinc_property, NULL)) { printf("failed to register arinc\n"); }

If an application requires multiple participants in the same partition, it is

necessary to register a separate ARINC transport component for each

participant. Each component can be registered with a different configuration

in order to define a unique configuration for each participant. Below is an

example of how to register a second ARINC transport component named ar_2.

struct ARINC_InterfaceFactoryProperty *arinc_property = NULL; RT_Registry_T *registry = NULL; arinc_property = (struct ARINC_InterfaceFactoryProperty*) malloc(sizeof(struct ARINC_InterfaceFactoryProperty)); *arinc_property = ARINC_INTERFACE_FACTORY_PROPERTY_DEFAULT; arinc_property->recv_thread.port_property.parent.deadline = SOFT; arinc_property->recv_thread.port_property.parent.period = INFINITE_TIME_VALUE; arinc_property->recv_thread.port_property.parent.time_capacity = INFINITE_TIME_VALUE; arinc_property->recv_thread.stack_size = 16000; arinc_property->recv_thread.priority = 96; #define RTI_RECV_THREAD_NAME "rti.ARINC.p2.2.rcv_thrd" OSAPI_Memory_zero(&arinc_property->recv_thread.port_property.parent.name, MAX_NAME_LENGTH); OSAPI_Memory_copy(&arinc_property->recv_thread.port_property.parent.name, RTI_RECV_THREAD_NAME, OSAPI_String_length(RTI_RECV_THREAD_NAME)); registry = DDS_DomainParticipantFactory_get_registry( DDS_DomainParticipantFactory_get_instance()); if (!RT_Registry_register(registry, "ar_2", ARINC_InterfaceFactory_get_interface(), (struct RT_ComponentFactoryProperty*)arinc_property, NULL)) { printf("failed to register ar_2\n"); }

4.7.8.4. Addressing Model¶

Communication in the ARINC transport takes place over ARINC channels. ARINC channels are logical connections between ARINC Send and Receive ports. These channels have a logical address to facilitate communication configured using the channel identifier in the send and receive port property. These channel identifiers are unsigned 32-bit integers. Each Send port and the complementing Receive port which completes the channel should have the same channel identifier.

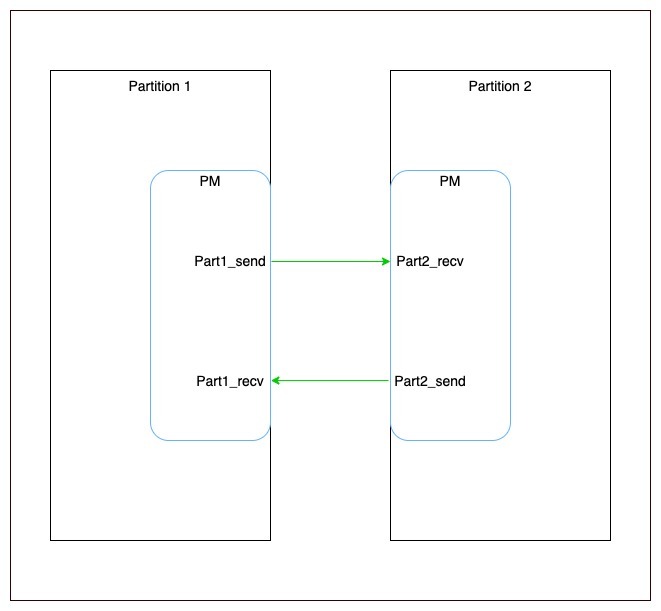

For example, if we have two channels between two partitions as shown in the diagram below:

The snippet below describes their configuration. Notice that channel identifiers match between the send and receive ports of the same channel.

/* Name of queuing port sending to Connext application partition */

#define RTI_APP_SEND_PORT_NAME "Part1_send"

ARINC_RoutePropertySeq_set_maximum(&port_mgr_prop->send_routes, 1);

ARINC_RoutePropertySeq_set_length(&port_mgr_prop->send_routes, 1);

route_property = (struct ARINC_RouteProperty*)

ARINC_RoutePropertySeq_get_reference(&port_mgr_prop->send_routes, 0);

OSAPI_Memory_copy(route_property->send_port_property.port_name,

RTI_APP_SEND_PORT_NAME, OSAPI_String_length(RTI_APP_SEND_PORT_NAME));

route_property->send_port_property.max_message_size = 1024;

route_property->send_port_property.max_queue_size = 20;

route_property->channel_identifier = 1;

/* Name of queuing port receiving from Connext application partition */

#define RTI_APP_RECV_PORT_NAME "Part1_recv"

ARINC_ReceivePortPropertySeq_set_maximum(&port_mgr_prop->receive_ports,1);

ARINC_ReceivePortPropertySeq_set_length(&port_mgr_prop->receive_ports,1);

eceive_port_prop = (struct ARINC_ReceivePortProperty*)

ARINC_ReceivePortPropertySeq_get_reference(&port_mgr_prop->receive_ports,0);

receive_port_prop->receive_port_property.max_message_size = 1024;

receive_port_prop->receive_port_property.max_queue_size = 20;

receive_port_prop->max_receive_queue_size = 20;

receive_port_prop->channel_identifier = 2;

OSAPI_Memory_copy(receive_port_prop->receive_port_property.port_name,

RTI_APP_RECV_PORT_NAME, OSAPI_String_length(RTI_APP_RECV_PORT_NAME));

#define RTI_APP_SEND_PORT_NAME "Part2_send"

ARINC_RoutePropertySeq_set_maximum(&port_mgr_prop->send_routes, 1);

ARINC_RoutePropertySeq_set_length(&port_mgr_prop->send_routes, 1);

route_property = (struct ARINC_RouteProperty*)

ARINC_RoutePropertySeq_get_reference(&port_mgr_prop->send_routes, 0);

OSAPI_Memory_copy(route_property->send_port_property.port_name,

RTI_APP_SEND_PORT_NAME, OSAPI_String_length(RTI_APP_SEND_PORT_NAME));

route_property->send_port_property.max_message_size = 1024;

route_property->send_port_property.max_queue_size = 20;

route_property->channel_identifier = 2;

/* Name of queuing port receiving from Connext application partition */

#define RTI_APP_RECV_PORT_NAME "Part2_recv"

ARINC_ReceivePortPropertySeq_set_maximum(&port_mgr_prop->receive_ports,1);

ARINC_ReceivePortPropertySeq_set_length(&port_mgr_prop->receive_ports,1);

receive_port_prop = (struct ARINC_ReceivePortProperty*)

ARINC_ReceivePortPropertySeq_get_reference(&port_mgr_prop->receive_ports,0);

receive_port_prop->receive_port_property.max_message_size = 1024;

receive_port_prop->receive_port_property.max_queue_size = 20;

receive_port_prop->max_receive_queue_size = 20;

receive_port_prop->channel_identifier = 1;

OSAPI_Memory_copy(receive_port_prop->receive_port_property.port_name,

RTI_APP_RECV_PORT_NAME, OSAPI_String_length(RTI_APP_RECV_PORT_NAME));

Now the channel identifier can be used as an address to send messages over this channel. For example, Partition 1 may set its initial peers as:

struct DDS_DomainParticipantQos dp_qos = DDS_DomainParticipantQos_INITIALIZER;

DDS_StringSeq_set_maximum(&dp_qos.discovery.initial_peers,1);

DDS_StringSeq_set_length(&dp_qos.discovery.initial_peers,1);

/*send discovery data on channel 1*/

*DDS_StringSeq_get_reference(&dp_qos.discovery.initial_peers,0) =

DDS_String_dup("_arinc://1");

Partition 1 may also set the enabled transports to receive data as follows:

struct DDS_DomainParticipantQos dp_qos = DDS_DomainParticipantQos_INITIALIZER;

/* Receive user-data traffic on channel number 2. */

DDS_StringSeq_set_maximum(&dp_qos.user_traffic.enabled_transports,1);

DDS_StringSeq_set_length(&dp_qos.user_traffic.enabled_transports,1);

*DDS_StringSeq_get_reference(&dp_qos.user_traffic.enabled_transports,0) =

DDS_String_dup("_arinc://2");

/* Receive discovery traffic on channel number 2. */

DDS_StringSeq_set_maximum(&dp_qos.discovery.enabled_transports,1);

DDS_StringSeq_set_length(&dp_qos.discovery.enabled_transports,1);

*DDS_StringSeq_get_reference(&dp_qos.discovery.enabled_transports,0) =

DDS_String_dup("_arinc://2");

If there was a second participant in Partition 1, it must use a

different ARINC interface configuration by enabling a separate ARINC transport.

Such an transport must first be registered, as described in ARINC Interface.

Below is an example of how to configure the QoS of a second participant in the

same partition to use the ar_2 ARINC transport component:

struct DDS_DomainParticipantQos dp2_qos = DDS_DomainParticipantQos_INITIALIZER;

DDS_StringSeq_set_maximum(&dp2_qos.discovery.initial_peers,1);

DDS_StringSeq_set_length(&dp2_qos.discovery.initial_peers,1);

/*send discovery data on channel 3*/

*DDS_StringSeq_get_reference(&dp2_qos.discovery.initial_peers,0) =

DDS_String_dup("ar_2://3");

/* Receive user-data traffic on channel number 4. */

DDS_StringSeq_set_maximum(&dp2_qos.user_traffic.enabled_transports,1);

DDS_StringSeq_set_length(&dp2_qos.user_traffic.enabled_transports,1);

*DDS_StringSeq_get_reference(&dp2_qos.user_traffic.enabled_transports,0) =

DDS_String_dup("ar_2://4");

/* Receive discovery traffic on channel number 4. */

DDS_StringSeq_set_maximum(&dp2_qos.discovery.enabled_transports,1);

DDS_StringSeq_set_length(&dp2_qos.discovery.enabled_transports,1);

*DDS_StringSeq_get_reference(&dp2_qos.discovery.enabled_transports,0) =

DDS_String_dup("ar_2://4");

Unlike some other transports, such as UDP, it necessary to specify the specific address or addresses in the enabled transport lists. If no address is provided with the ARINC Interface name, then creation of the entity will fail. This explicit configuration prevents unexpected behavior from unintentionally listening on an address.

4.7.8.4.1. Addressing Misconfiguration¶

The ARINC locators specified in the initial peers and enabled transports lists will be automatically verified to correspond to a channel configured in the Port Manager. If a participants’s initial peer list contains an ARINC locator which does not match a send channel, then creation of the participant will fail. If an ARINC locator in the enabled transports of a Domain Participant, Data Writer, or Data Reader does not match a receive channel, then creation of that entity will fail.

However, Micro does not consider reachability as a factor in determining matching. It is possible for an ARINC locator to be received during the discovery process which corresponds to an address which is not reachable by that participant. This locator will be ignored because it is expected that unreachable locators will sometimes be received. In that case, a Data Writer and Data Writer can match even if there is not a channel on which they can communicate. This situation is considered misconfiguration of the application.

4.7.8.5. Threading Model¶

The port manager creates an ARINC 653 process to receive messages from the ports. This process

can work in two modes dictated by the sync_processing property.

When sync_processing is true, every message received is routed to the correct interface. When

this message is being processed, it blocks messages being received from other ports and hence

blocks other participants. The ARINC interface does not create any process since messages are

processed in the context of the port manager’s Receive process.

When sync_processing is false, (the default behavior) the port manager’s process adds messages

to an internal queue for the destination ARINC interface. The ARINC interface process then

processes it asynchronously. This ARINC interface process and the queue size are configured

using the ARINC_InterfaceFactoryProperty. Asynchronous processing of messages allows the port

manager process to not block other participants but add costs as additional processes and

queues per ARINC interface.