10. Design Considerations

Enabling the Security Plugins will have an impact on your system. When you enable the Security Plugins, there are some processes that change (like Discovery) or that are new (like encrypting messages). Some of these processes involve cryptographic operations that are computationally expensive (increased CPU and memory usage); protecting data requires sending additional information on the wire (increased network traffic). This can affect both the performance and scalability of your system.

Not every system is affected the same way. How much your system is affected will depend on the system architecture and requirements, as well as on its configuration. This chapter discusses some of the considerations that you should take into account when designing your system.

10.1. Factors Affecting Performance and Scalability in General

There are some factors that have an impact on the performance and scalability of your system at all times.

Security does have a cost. There are additional processes that impact CPU and memory usage, such as validating certificates and permissions, exchanging keys, encrypting samples, etc. Take into account that the memory representation of a Secure DomainParticipant and a Secure Endpoint is larger than for their unsecure counterparts.

10.1.1. Hardware

Some processors have support for cryptographic hardware acceleration. Or your hardware may just have a fast enough processor for the additional overhead to not be a concern.

Note

The OpenSSL libraries that we provide support hardware acceleration.

10.1.2. Algorithms Used

By default, the Cryptography Plugin uses AES-256 for symmetric encryption and

decryption. This means that a 256-bit key will be used as an input for the AES

algorithm. You can choose the AES-128 or the (<< deprecated >>) AES-192

encryption option instead by configuring the

dds.sec.crypto.symmetric_cipher_algorithm property (see

Table 6.9).

Note that AES always performs the encryption and decryption operations in blocks

of 128 bits.

Using different encryption algorithms or key lengths will impact performance since CPU usage and the amount of data sent for key exchange may increase.

10.1.3. Maximum Transmission Unit (MTU)

You can control the maximum size of the RTPS messages sent on the network by adjusting the message_size_max property in your transport configuration (see NDDS_Transport_Property_t::message_size_max in the RTI Connext DDS Traditional C++ API). By setting this property to a value smaller than the maximum payload that fits in the MTU (usually 1472 bytes for UDP over Ethernet), you can avoid IP fragmentation. Hence, Connext will fragment RTPS Submessages before sending them to the transport.

Sending samples larger than the message_size_max will result in DDS fragmentation, which requires Asynchronous Publishing if the Topic is reliable (see ASYNCHRONOUS_PUBLISHER QosPolicy (DDS Extension) in the Core Libraries User’s Manual). In scenarios with a hard limit on the transport maximum message size, the Secure Key Exchange Builtin Topic may require fragmentation, which requires enabling Asynchronous Publishing since it is a reliable Topic (see Section 6.8.2).

However, if you want to use Origin Authentication Protection, RTPS messages and/or submessages will include a set of Receiver-Specific MACs. The maximum number of Receiver-Specific MACs that will be included in a message is determined by the cryptography.max_receiver_specific_macs property (see Table 6.8). Receiver-Specific MACs cannot be fragmented into different submessages. Therefore, the absolute minimum message_size_max you can use (even with Asynchronous Publishing) will depend on the cryptography.max_receiver_specific_macs value.

Note that each Receiver-specific MAC will be 20 bytes in size. Therefore, to avoid IP fragmentation, you have to limit the cryptography.max_receiver_specific_macs property to a maximum of

where \(\mathtt{usable\_rtps\_size}\) is the size of all packet bytes besides the MACs (including payload, RTPS headers and other security overhead). Generally, \(\mathtt{usable\_rtps\_size}\) should be at least \(768 \mathrm{B}\) to \(1 \mathrm{kB}\).

10.1.4. Using OpenSSL Providers

The Security Plugins have support for OpenSSL providers (see Support for OpenSSL Providers). These providers are called by OpenSSL and provide alternative functions to the OpenSSL builtin security operations. Using different cryptographic functions may affect the performance of the Security Plugins. For instance, the functions may perform optimized cryptographic operations, or use algorithms that have a reduced computational cost. On the other hand, your OpenSSL provider may implement more complex algorithms that require more CPU usage.

OpenSSL providers may interact with hardware acceleration for common cryptographic operations, depending on the provider support.

10.1.5. Security Plugins File Tracking

The Security Plugins offer a file tracking capability that periodically checks some of the Security Plugins’

mutable files for changes and automatically updates the Security Plugins’ internal state. This

functionality features a dedicated thread and internal infrastructure that is

allocated at Security Plugins creation and works until Security Plugins finalization. File tracker and

its creation can be controlled via the files_poll_interval property.

File tracker is created and enabled if the property value is greater than 0,

regardless of whether there are any files to be tracked.

If the property is 0, the file tracker is not going to be created, and Security Plugins will

consume minimally fewer resources. By default, files_poll_interval

equals 5, and the file tracker is created. If the resource consumption is a

concern and file tracking is not required, consider setting

files_poll_interval to 0. Note that it is possible to programmatically

trigger reloading of the affected files through the DomainParticipant set_qos API,

when file tracking is disabled.

Attention

Some of the RTI Infrastructure Services depend on the Security Plugins file tracking and disabling it may limit system functionality.

10.2. Security Plugins’ Impact on Scalability at Startup

When a DDS system starts up, DomainParticipants discover each other through the Simple Discovery Protocol (see Discovery in the RTI Core Libraries User’s Manual). The scale of your system has a fundamental role in the time that it will take until it reaches a steady state where every DomainParticipant knows all its peer DomainParticipants and user data flows through every part of the system where it is needed.

10.2.1. Impact of the Security Plugins on the Discovery Process

When you enable the Security Plugins, your DDS applications will require some extra steps to create the DomainParticipants and perform the DDS Discovery process, as depicted in Figure 10.1. These steps make sure that the security rules defined in your Governance Document apply, that DomainParticipants are mutually authenticated, and that they only have access to the data they are allowed to access (as defined in the Permissions Documents), to cite a few examples.

Let’s assume that we are starting a DDS application that contains a local DomainParticipant (P1) that will communicate with an existing remote DomainParticipant (P2). Let’s analyze the differences in Discovery (and application startup, in general) when we enable security.

Figure 10.1 Changes in the Discovery Process When the Security Plugins Are Enabled

10.2.1.1. Differences in DomainParticipant Creation

The first thing your application needs to communicate using DDS is a DomainParticipant. The local DomainParticipant (P1) is created from a certain configuration (default QoS or a selected profile).

When you enable security, you need to specify additional settings to make your DomainParticipant load the Security Plugins (see Building and Running Security Plugins-Based Applications). In addition, your DomainParticipant will load the security artifacts that you specify in the properties. These artifacts include the Governance Document, Permissions Document, Permissions CA, Identity CA, Identity Certificate and Private Key (see Using Security Plugins). If any of these artifacts are missing, or if they cannot be verified/don’t match (e.g., the Identity Certificate is not signed by the provided Identity CA), the DomainParticipant creation fails at this first stage.

Your DomainParticipant also needs permissions. We check whether it has a valid grant (matching the subject name in its Identity Certificate) that is valid for the current date and time. If it doesn’t, the creation of the DomainParticipant fails immediately. Next, local participant-level permissions are checked to verify whether P1 has permission to join the domain. If P1 is not allowed to join the domain, the DomainParticipant creation fails. For further details, refer to How the Permissions are Interpreted.

10.2.1.2. Differences in Endpoints Creation

The local DomainParticipant (P1) will have DataWriters and DataReaders for both builtin topics (such as the participant discovery Topic) and user-defined topics (user Endpoint for data exchange). In addition, we need to allocate resources for discovering remote DomainParticipants and Endpoint.

When security is enabled, Permissions Documents establish what DomainParticipants and Endpoint are allowed to do. When you enable Endpoint-level permissions checking for a certain Topic, P1 needs to verify whether it is allowed to publish/subscribe to that Topic before creating a DataWriter/DataReader for it (see Topic-level rules). If it is not allowed to do so, the endpoint creation fails.

10.2.1.3. Differences in Remote DomainParticipants Discovery

In the Participant Discovery phase, your local DomainParticipant learns about other DomainParticipants in the domain, and vice-versa. As part of this process, the DomainParticipants exchange some details, including GUIDs, transport locators and DomainParticipantQos. These details only involve DomainParticipant information (they do not involve Endpoint). For further information, see Simple Participant Discovery in the Core Libraries User’s Manual.

Unless Pre-Shared Key Protection (Pre-Shared Key Protection) is enabled, the DomainParticipant discovery data is exchanged unsecurely. In other words, the bootstrapping part of discovery happens without protection – this only contains information about the DomainParticipant that does not require protection and does not involve Endpoints. Participant declaration messages will include additional properties, as required by the OMG DDS Security specification:

PID_IDENTITY_TOKENcontains summary information on the identity of a DomainParticipant. The Security Plugins use the presence or absence of this token to determine whether or not the remote DomainParticipant has security enabled.PID_PERMISSIONS_TOKENcontains summary information on the permissions for a DomainParticipant. The Security Plugins currently do not use this token.PID_PARTICIPANT_SECURITY_INFOvalidates Governance compatibility. If local and remote Governance Documents are not compatible, P1 will reject matching P2. For further information, see Elements of a Security Plugins System.PID_DIGITAL_SIGNATURES_ALGOcontains summary information on the digital signature algorithms used and supported by the DomainParticipant. If local and remote algorithms are not compatible, P1 will reject matching P2. You can read about what makes the remote and local algorithms compatible in Discovery of a Remote Secure Entity.PID_KEY_ESTABLISHMENT_ALGO: contains summary information on the key establishment algorithms used and supported by the DomainParticipant. If local and remote algorithms are not compatible, P1 will reject matching P2.PID_PARTICIPANT_SYMMETRIC_CIPHER_ALGO: contains summary information on the symmetric cipher algorithms used and supported by the DomainParticipant. If local and remote algorithms are not compatible, P1 will reject matching P2.

When P1 and P2 know about each other, they proceed to mutual authentication. As part of the Authentication process (1 in Figure 10.1), P1 and P2 will exchange some artifacts by sending them on the wire as part of the handshake. These artifacts include the Identity Certificates and Permission Files. In the Authentication process, P1 verifies P2’s Identity Certificate against the Identity CA. If this verification fails, P1 will reject communicating with P2 (see Identity Certificate Validation). This mutual authentication process happens in both directions.

Next, P1 checks that P2 has the necessary permissions and that they are

valid (2 in Figure 10.1). If P2

doesn’t have a grant matching the subject name in its Identity Certificate, P1 will

reject communicating with P2. If P2’s participant-level permissions are

enabled (enable_join_access_control = TRUE), but P2 does

not have permission to join the domain, P1 will also reject communicating with

P2 (see enable_join_access_control (within a domain_rule)).

Permissions checking also happens in both directions.

As a result of the handshake, the two involved DomainParticipants will derive a Shared Key and establish a Secure Key Exchange Channel. The Cryptography plugin will use that channel to securely exchange the symmetric keys that the Endpoint need to perform secure communication. After the handshake concludes with successful authentication and Shared Key derivation, symmetric cryptography is used to exchange other keys (see Secure Key Exchange).

10.2.1.4. Differences in Remote Endpoints Discovery

During the Endpoint Discovery phase, your local DomainParticipant learns about the Endpoint of the remote DomainParticipants, and vice-versa. To do this, your DomainParticipant sends publication/subscription declarations to inform discovered DomainParticipants about the local DataReaders and DataWriters and their properties (GUID, QoS, etc.). For details, see Simple Participant Discovery in the Core Libraries User’s Manual.

These declarations are exchanged until each DomainParticipant has a complete database of information about the DomainParticipants in its peers list and their entities.

When a remote DataWriter/DataReader is discovered, Connext determines if the local application has a matching DataReader/DataWriter. A ‘match’ between the local and remote entities occurs only if the DataReader and DataWriter have the same Topic, same data type, compatible QosPolicies, and compatible security algorithms.

When security is enabled, P1 and P2 use the Secure Key Exchange Channel to exchange the Key Material for the Builtin Secure Endpoints (3 in Figure 10.1), including the Publication Secure DataWriter and DataReader used for secure endpoint discovery (see Secure Key Exchange). Note that secure endpoint discovery only applies to topics that enable it (see enable_discovery_protection (topic_rule)).

At this point, P1 performs endpoint discovery. For topics that enable

Discovery Protection, endpoint declarations are sent over the Builtin Secure Discovery Topics.

Otherwise, the unsecure version of the Builtin Discovery Topics are used. In all

cases, the Security Plugins check Governance compatibility by means of the

PID_ENDPOINT_SECURITY_INFO and PID_ENDPOINT_SYMMETRIC_CIPHER_ALGO

parameters to check the endpoint security attributes and help perform matching

decisions (see Governance Compatibility Validation).

Then we will check the endpoint permissions, this time for the writers and the readers.

Once your DomainParticipants go through all these steps, they will exchange keys for each of their Secure Entities (see Impact of Key Exchange on Scalability at Startup). After this, your DomainParticipants will enter the steady state where they will exchange user samples (see Security Plugins Impact on Scalability and Performance During Steady State).

10.2.1.5. Participant Discovery Information Validation and Updates

Initial Participant Discovery is a process that happens unprotected, if Pre-Shared Key Protection (Pre-Shared Key Protection)is disabled. However, Participant Discovery information is validated as part of the authentication process. This ensures that the right information is received during discovery, and that Participant Declaration Messages have not been injected by a malicious entity. In other words, Participant Discovery is mainly for bootstrapping, but then as part of authentication, we make sure that discovery information that we have received is valid.

After authentication (and bootstrapping), any further updates that need to be propagated through participant discovery are sent over the secure version of the participant discovery Topic.

10.2.2. Impact of the Secure Versions of Builtin Endpoints

Secure versions of the Builtin Endpoints are always created when you enable the Security Plugins, regardless of the protection kind specified in the Governance Document. This generates additional traffic at startup in the form of HEARTBEATS and ACKS when a remote DomainParticipant is discovered.

10.2.3. Impact of Key Exchange on Scalability at Startup

After Authentication (and key derivation) are complete, symmetric cryptography is used to exchange other keys. More concretely, two mutually authenticated DomainParticipants will use their exclusive secure key exchange channel (DCPSParticipantVolatileMessageSecure) to set the symmetric keys for both builtin and regular Endpoint.

When you enable security for a Topic, every DataWriter/DataReader for that Topic will have its own Sender Key that needs to be sent to every matching DataReader/DataWriter. These keys need to be generated and then exchanged between every pair of DomainParticipants publishing or subscribing to the secured Topic, which will have an impact on the network traffic and CPU usage. Note that this applies to both builtin and user-defined topics. For further details, refer to Lifecycle of Secure Entities.

The following subsections describe the Secure Entities whose Key Material must be exchanged by your DomainParticipants.

10.2.3.1. Participant Key Material

If you enable RTPS Protection by setting rtps_protection_kind to a value other than NONE in your Governance Document, your DomainParticipants will behave as Secure Entities and they will have their own Key Material. DomainParticipants secured this way will need to exchange this Key Material in order to perform any secure communication.

10.2.3.2. Builtin Secure Endpoints

You can choose to use the secure version of the builtin Endpoint to protect discovery and liveliness information for each of your topics. For example, you can use the Secure Endpoints for discovery by setting enable_discovery_protection to TRUE in one of your topics. In this case, you will want to set the discovery_protection_kind to a value other than NONE; otherwise, you will not add any security. Note that enabling discovery protection for a Topic when the discovery protection kind is NONE is still a valid configuration that you can use for debuggability purposes.

If discovery_protection_kind is other than NONE, your DomainParticipants will need to generate and exchange the Key Material for the secure version of the Builtin Discovery Endpoints, causing additional network usage. More concretely, the Cryptography Plugin will generate and exchange keys for the following Endpoint:

DCPSParticipantSecure DataWriter

DCPSParticipantSecure DataReader

DCPSPublicationSecure DataWriter

DCPSPublicationSecure DataReader

DCPSSubscriptionSecure DataWriter

DCPSSubscriptionSecure DataReader

In addition, your DomainParticipants will need to apply the corresponding cryptographic transformation to discovery samples using Submessage Protection. This comes with an additional computational cost and increased network usage (see Submessage Protection).

The same applies to Liveliness Protection. When you set liveliness_protection_kind to a value other than NONE, your DomainParticipants will exchange keys for the following Endpoints:

DCPSParticipantMessageSecure DataWriter

DCPSParticipantMessageSecure DataReader

Note that discovery_protection_kind also controls the level of protection that Builtin Secure Service Request Endpoints will have. Therefore, if discovery_protection_kind is a value other than NONE, keys for the following Endpoint may need to be exchanged:

Service Request Secure DataWriter

Service Request Secure DataReader

Note

The creation of the Builtin Secure Discovery Endpoints is fully controlled by discovery_protection_kind and does not depend on the value of enable_discovery_protection on particular topics. Similarly, the Builtin Secure Liveliness Endpoints’ creation only depends on liveliness_protection_kind, not on the value of enable_liveliness_protection on particular topics.

Finally, the Builtin Secure Logging Endpoints will apply SIGN [1] protection to RTPS Submessages for logged security events. Note that the Builtin Secure Logging Endpoints will only be created when you set the SECURITY_TOPIC mask in the logging.mode_mask property [2] (see Table 7.2). In this case, keys for the Builtin Logging DataWriter and matching DataReaders will need to be exchanged.

For further details on the Builtin Secure Endpoints, refer to Security Builtin Topics.

10.2.3.3. User-Defined Secure Endpoints

You can choose to protect each of the user-defined topics separately. The impact of exchanging keys for your user-defined secure endpoints will be directly related to the number of Endpoints in your participants.

By default, Submessage Protection and Serialized Data Protection use the same Key Material. Therefore, each of your topics will have a single key, regardless of whether you protect your Topic with Submessage Protection, Serialized Data Protection, or both.

You can choose not to use the same keys for Serialized Data and Submessage Protections by setting the cryptography.share_key_for_metadata_and_data_protection property to FALSE, as specified in Table 6.8. Then your participants will need to generate and exchange two different keys for each of your Secure DataWriters.

Without Origin Authentication Protection |

With Origin Authentication Protection (requries sending an additional receiver-specific key) |

|---|---|

364 Bytes |

380 Bytes |

You can estimate the number of keys your DomainParticipants will put on the network for your

user-defined endpoints. For each Topic, multiply the number of DataWriters by the

number of compatible DataReaders (i.e., matching DataReaders with compatible QoS). The sum

will tell you how many keys will be exchanged when new matches are discovered.

Note that if a DomainParticipant has enabled Key Material renewal (see

Limiting the Usage of Specific Key Material) and

calls banish_ignored_participants, then the DomainParticipant will send only a

Key Revision to other DomainParticipants; it will not send new keys for each individual

DataWriter-DataReader match.

In addition, when you use Topic Queries (see Topic Queries in the Core Libraries User’s Manual), one additional key is exchanged per matching DataWriter/DataReader. This is required because internally, Connext uses two GUIDs for each DataWriter. The same mechanism is put in place for Multi-channel DataWriters (see Multi-channel DataWriters in the Core Libraries User’s Manual). In this latter case, \(N-1\) additional keys are exchanged, where \(N\) is the number of channels.

10.2.3.4. Receiver-Specific Keys

If you enable the Origin Authentication Protection for a Topic, each Secure Entity for that Topic will have its own receiver-specific key. This implies that the Key Material for DataWriters and DataReaders of that Topic will be around \(1.5\times\) the size since it will include a Sender Key and a Receiver-specific Key. This implies that topics protected with Origin Authentication Protection will produce around \(1.5\times\) the traffic during the key exchange process.

Note that you can apply Origin Authentication Protection to either builtin and user-defined topics by tuning the corresponding Governance Rules (see Security Protections Applied by DDS Entities).

10.2.4. Factors Impacting Performance and Scalability at Startup

Now you know the additional processes that happen when security is enabled. Now we will list a set of factors that will impact scalability at startup.

10.2.4.1. Number of Participants and Endpoints in Your Secure Domain

The number of participants in your Secure Domain will certainly have an impact on scalability. The reason is that discovery and mutual authentication occur between each pair of DomainParticipants. This is not much different from the unsecure scenario. However, the Security Plugins add Discovery time, both by adding additional operations and sending additional messages and files. In general, you can expect \(3\times\) the traffic you would have without security for each pair of DomainParticipants.

Also, since every DomainParticipant needs to authenticate with every other DomainParticipant in your Secure Domain, the traffic will increase as the number of participants grows. If the discovery traffic for each pair of DomainParticipants increases by \(3\), then the traffic in your Secure Domain will increase by \(1.5\cdot N\cdot (N-1)\), where \(N\) is the number of participants in your Secure Domain.

The factors that you can tune and that will have the bigger impact are covered below.

10.2.4.2. Contents of the Identity Certificates

During the Authentication process, DomainParticipants exchange their Identity Certificates (as specified in the dds.sec.auth.identity_certificate property in Table 4.1. These artifacts are X.509 digital certificates in PEM format that may include a human-readable part and the Base64 representation of the certificate. By default, the Builtin Security Plugins trim out the human-readable part of the certificate before sending it to save some bandwidth. You may want to have the human-readable part sent for debugging purposes. You can control this behavior with the authentication.propagate_simplified_identity_certificate property.

10.2.4.3. Contents of Your Permissions Documents

During the Authentication process, DomainParticipants exchange their Permissions Document (as specified in the dds.sec.access.permissions property in Table 4.1. The Permissions Document is specified in an XML file that defines the access control permissions per domain and per Topic for individual DomainParticipants. The Permissions CA must sign the Permissions Document. Hence, the Permissions Document is sent “as is” on the wire so that other DomainParticipants can verify the signature.

You can potentially set the same Permissions Document across all of the participants in your system. For example, you have P1 load a Permissions Document that includes permissions for both P1 and P2. Then, you can have P2 load the same Permissions Document.

Having the same Permissions Document across all the participants in your system can be handy for prototyping. However, it requires sending the same Permissions Document on the network during each authentication.

A better option would be to define the permissions so that you use a different file for each of your participants. For P1 you will have the permissions for that participant, for P2, you will have the permissions for that different participant. This way, you will reduce network traffic by not sending unnecessary information, especially if you have a big system with hundreds of participants.

Selecting the right granularity for your Permissions Documents has a big impact on scalability. For example, compare the following scenarios in a system with \(100\) participants.

Each participant has its own Permissions Document containing only one grant: each participant sends its permissions to every other participant (\(100\times 1\times 99 = 9,900\) permission grants exchanged).

All the participants have the same Permissions Document: each participant sends around \(N\) times its permissions to every other participant (\(100\times 100\times 99 = 990,000\) permission grants exchanged)

Assuming that the permission grant for a single participant is around \(1 \mathrm{kB}\) in size, with only \(100\) participants you can reduce the total network load from \(990 \mathrm{MB}\) to around \(10 \mathrm{MB}\), saving up to \(980 \mathrm{MB}\) of network traffic.

As a general rule, \(N\times (N-1)\) Permissions Document are sent on your network (where \(N\) is the number of DomainParticipants in your Secure Domain).

10.2.4.4. Impact of Different Protection Kinds

If you use Discovery Protection, some parts of the discovery process will require additional cryptographic operations (see discovery_protection_kind (domain_rule)). In this case, part of the messages sent during discovery will be protected with Submessage Protection (see Submessage Protection), at the level specified by the discovery_protection_kind Governance Rule.

Also, when you set different protection kinds, symmetric keys need to be exchanged, which will be sent on the network through the Secure Key Exchange Channel. For instance, additional Receiver-specific keys are exchanged when you use Origin Authentication Protection. See Impact of Key Exchange on Scalability at Startup, where we explain which protection kinds require sending which keys.

Here is a summary of the keys that need to be generated and exchanged:

ENCRYPTandSIGNprotections will require exchanging one key per DataWriter and DataReader of the Topic being protected. By default, this is independent of where you apply the protection (see User-Defined Secure Endpoints).Protection kinds ending in

_WITH_ORIGIN_AUTHENTICATIONwill require exchanging one additional key per DataWriter and DataReader of the Topic being protected.

10.3. Security Plugins Impact on Scalability and Performance During Steady State

The Security Plugins’ impact on performance will depend on multiple factors, including the security requirements of your systems, hardware considerations, and interactions with other Connext features.

10.3.1. Overhead of the Different Protection Kinds

When an application uses security, the contents of the RTPS packets will be different. For example, cryptographic metadata will be added around the protected parts, which may be encrypted depending on the configured protection.

Protecting your data will always have an overhead. Depending on what you protect (user data, metadata, rtps packet, liveliness, etc), different parts of the RTPS packets will be different.

The following sections discuss the packet growth that you can expect for each of the protection kinds.

10.3.1.1. Discovery Protection

You can enable Discovery Protection by setting the discovery_protection_kind Governance Rule to a value other than NONE.

The greatest impact of enabling Discovery Protection for a Topic is at startup, since the discovery data for that Topic will be sent protected (increased CPU and network usage, as explained in Builtin Secure Endpoints). Still, it has some impact on steady state, since TopicQuery requests related to your Topic will be exchanged through the Builtin Secure ServiceRequest Topic, whose configuration is controlled the same way as Builtin Secure Discovery Endpoints (see Builtin Secure Discovery Endpoints and Interaction with Topic Queries).

You could also modify some of your DataWriter/DataReader QoS policies (such as partitions), or a network change could indirectly trigger a change in the DataWriter/DataReader information. In this situation, the policies need to be propagated. If Discovery Protection is enabled for your Topic, the endpoint declarations used to propagate the QoS will be sent using the Builtin Secure Discovery Endpoints (see Builtin Secure Discovery Endpoints). These endpoints will apply RTPS Submessage Protection to the level you configure with the discovery_protection_kind Governance Rule, increasing both CPU usage and network usage. (For the overhead caused by the Submessage Protection, see Submessage Protection.)

Finally, you could modify some of your DomainParticipantQos policies. If Discovery Protection is enabled, the participant declarations used to propagate the QoS will be sent using the Builtin Secure Discovery Endpoints (see Builtin Secure Discovery Endpoints). Note that participant declarations are also sent on IP mobility events (and these will be protected if Discovery Protection is enabled). For further information, see RTPS Protocol Changes to Support Secure Entities Traffic.

10.3.1.2. Liveliness Protection

You can enable Liveliness Protection by setting the liveliness_protection_kind Governance Rule to a value other than NONE.

When you enable Liveliness Protection for a Topic, liveliness information related to your Topic will be exchanged through the Builtin Secure Liveliness Endpoints (only if the LIVELINESS QosPolicy is set to AUTOMATIC or MANUAL_BY_PARTICIPANT). These endpoints will apply RTPS Submessage Protection to the level you configure with the liveliness_protection_kind Governance Rule, increasing both CPU usage and network usage. (For the overhead caused by the Submessage Protection, see Submessage Protection.) For further information, see RTPS Protocol Changes to Support Secure Entities Traffic.

10.3.1.3. Serialized Data Protection

You can enable Serialized Data Protection by setting the data_protection_kind Governance Rule to a value other than NONE.

When you enable Serialized Data Protection, data in the DataWriter’s queue will be protected before encapsulating it in an RTPS Submessage. Therefore, user data is only protected once and retransmissions do not need re-encryption.

The length of the data field increases (in the RTPS Submessage) because the serializedData field now includes a header and a footer with cryptographic information. The Crypto Header includes information such as an identifier for the key used to encrypt the payload. The Crypto Footer includes the generated message authentication code (MAC). This extra information allows the receiver to decrypt the message (when needed) and verify its integrity. See Security Protections Applied by DDS Entities and RTPS Protocol Changes to Support Secure Entities Traffic.

More concretely, each sample (i.e., DATA submessage) sent by your DataWriters will have an additional 40 bytes (in the case of SIGN protection) or 44 bytes (in the case of ENCRYPT protection), as shown in Table 10.2. This is independent of the sample length.

Note that, in some cases, this overhead could trigger fragmentation for samples that were just below the fragmentation limit before applying the protection.

Element |

Extra bytes when using |

Extra bytes when using |

|---|---|---|

Crypto Header |

20 |

20 |

Crypto Content |

0 |

4 |

Crypto Footer |

20 |

20 |

Total |

40 |

44 |

10.3.1.4. Submessage Protection

You can enable Submessage Protection by setting the metadata_protection_kind Governance Rule to a value other than NONE.

Submessage Protection applies to messages sent both by the DataWriter and the DataReader. Secure Endpoints will protect submessages right before putting them on the network. Note that a batch containing multiple samples is considered a single submessage. Refer to Submessage Protection for a comprehensive list of all protected submessages.

Note that Submessage Protection implies adding a Secure Prefix Submessage and a Secure Postfix Submessage to the RTPS message. For further details, see Security Protections Applied by DDS Entities and RTPS Protocol Changes to Support Secure Entities Traffic.

The growth in size caused by Submessage Protection is slightly higher than with Serialized Data Protection. However, note that Submessage Protection applies to submessages sent by both DataWriters and DataReaders for the Topic being protected. Therefore, each submessage protected by your DataWriters or DataReaders will have an additional 48 bytes (in the case of SIGN) or 56 bytes (in the case of ENCRYPT), as shown in Table 10.3. This overhead is independent of the sample length.

If you enable Origin Authentication Protection (with SIGN_WITH_ORIGIN_AUTHENTICATION or with ENCRYPT_WITH_ORIGIN_AUTHENTICATION), each submessage that your DataWriters and DataReaders protect will have an additional 20 bytes in the Crypto Footer per matching DataReader/DataWriter. This overhead is limited by the cryptography.max_receiver_specific_macs property (see Table 6.8).

Element |

Extra bytes when using |

Extra bytes when using |

Extra bytes when using |

|---|---|---|---|

SEC_PREFIX |

24 |

24 |

0 |

SEC_BODY |

0 |

8 |

0 |

SEC_POSTFIX |

24 |

24 |

20 per matching entity |

Total |

48 |

56 |

20 per matching entity |

This overhead is limited by the cryptography.max_receiver_specific_macs property (see Table 6.8).

10.3.1.5. RTPS Protection

You can enable RTPS Protection by setting the rtps_protection_kind

Governance Rule to a value other than NONE.

RTPS Protection affects the DomainParticipant and applies to every packet that is sent by protecting the whole RTPS message right before putting it on the network. The only exception to RTPS Protection is:

Traffic during the initial Participant Discovery used in the bootstrapping, which is never protected.

Traffic of the Authentication Builtin Topic (ParticipantStatelessMessage), which is never protected.

Traffic of the Secure Key Exchange Builtin Topic (ParticipantVolatileMessageSecure), which use

ENCRYPTprotection at the Submessage level instead.

For further details, see Security Protections Applied by DDS Entities and RTPS Protocol Changes to Support Secure Entities Traffic.

RTPS Protection implies a growth in every RTPS message that your DomainParticipants send on

the Secure Domain [5]. Each RTPS message sent by your participants will have an

additional 72 bytes (in the case of SIGN) or 80 bytes (in the case of

ENCRYPT), as shown in Table 10.4.

This is independent of the sample length.

If the cryptography.enable_additional_authenticated_data property

(see Table 6.8) is

set to FALSE, then the contents will include a copy of the RTPS header

(20 bytes) - for more information, read

RTPS Protection. Therefore, if Additional

Authenticated Data is disabled, each RTPS message will have a total number of

92 extra bytes (in the case of SIGN) or 100 bytes (in the case of

ENCRYPT).

If you enable Origin Authentication Protection (with SIGN_WITH_ORIGIN_AUTHENTICATION

or with ENCRYPT_WITH_ORIGIN_AUTHENTICATION), each RTPS message sent by

your participant will have an additional 20 bytes in the Crypto Footer per

receiver. This overhead is limited by the cryptography.max_receiver_specific_macs

property (see Table 6.8).

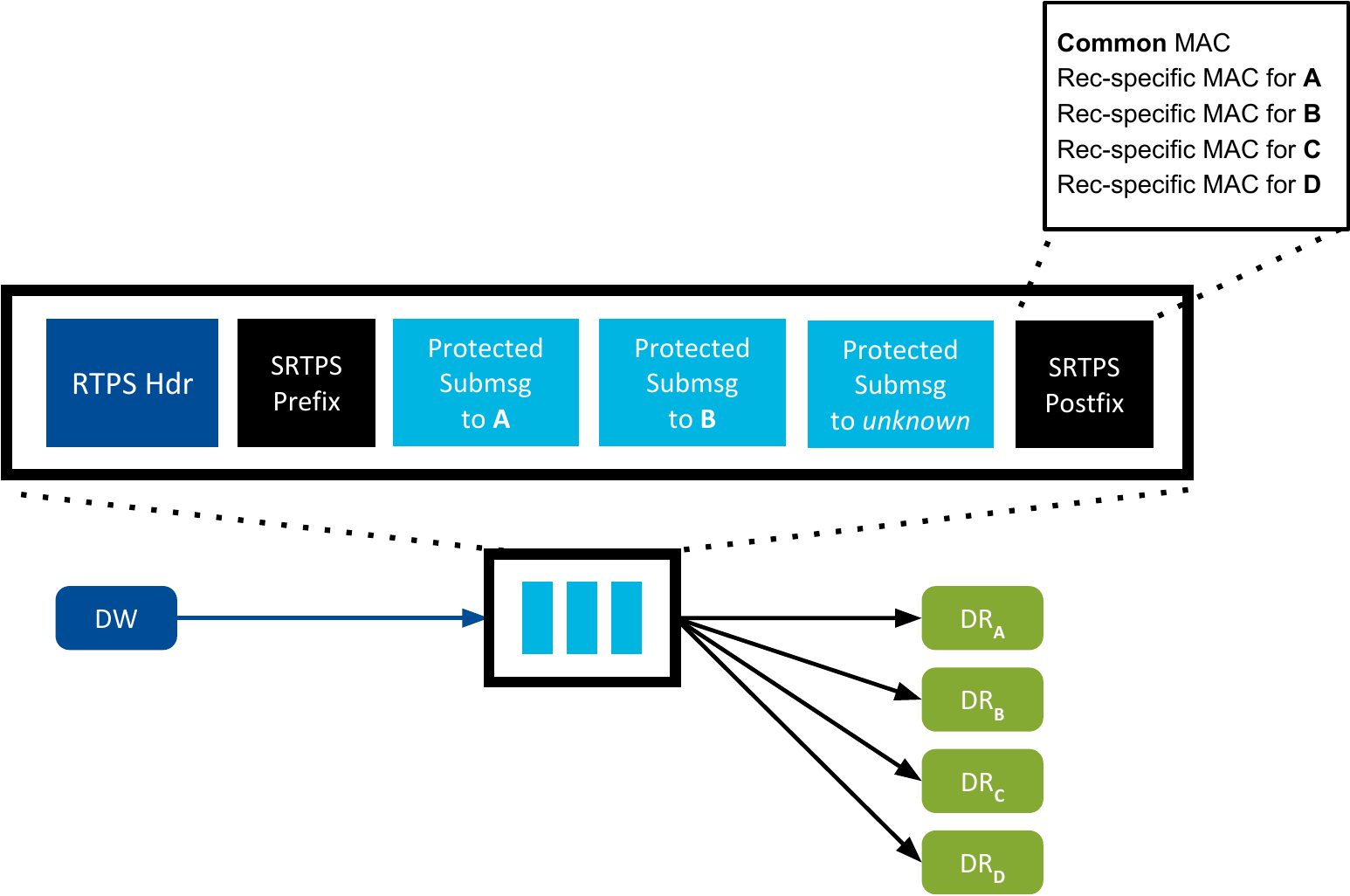

The number of Receiver-Specific MACs in an RTPS message is the union of the Receiver-Specific MACs each of its submessages would need. For example, assume that you have an RTPS message with three submessages. The first submessage is directed to a receiver A, the receiver of the second submessage is B, and the receiver of the third submessage is unknown. In this situation, if Submessage Protection was set to protect the Origin Authentication, the first submessage would have the receiver-specific MAC for A, the second submessage would have the receiver-specific MAC for B, and the third submessage would have the receiver-specific MAC for all the matching Endpoints, let’s say A, B, C, and D. Therefore, to satisfy the requirements of every submessage, the RTPS message will include the following Receiver-Specific MACs:

Figure 10.2 Receiver-Specific MACs Included in an RTPS Message that Uses RTPS Protection with Origin Authentication

Element |

Extra bytes when using |

Extra bytes when using |

Extra bytes when using |

|---|---|---|---|

SRTPS_PREFIX |

24 |

24 |

0 |

SRTPS_BODY |

24 |

32 |

0 |

SRTPS_POSTFIX |

24 |

24 |

20 per matching entity |

Total |

72 |

80 |

20 per matching entity |

This overhead is limited by the cryptography.max_receiver_specific_macs

property (see Table 6.8).

Exceptions of RTPS messages to which RTPS Protection applies are covered in DomainParticipants.

10.3.1.6. Origin Authentication Protection

Origin Authentication protection allows the Receiver to make sure that the Sender is who it claims to be (i.e., it can authenticate the origin), even when the Sender is communicating with multiple Receivers via multicast and shares the same encryption key with all of them.

You can add Origin Authentication protection to Submessage Protection by setting the metadata_protection_kind Governance Rule to SIGN_WITH_ORIGIN_AUTHENTICATION or ENCRYPT_WITH_ORIGIN_AUTHENTICATION.

You can add Origin Authentication protection to RTPS Protection by setting the rtps_protection_kind Governance Rule to SIGN_WITH_ORIGIN_AUTHENTICATION or ENCRYPT_WITH_ORIGIN_AUTHENTICATION.

This protection involves computing additional Receiver-Specific MACs with a secret key that the Sender shares only with one Receiver. More concretely, the Crypto Footer will contain 1 additional MAC for every receiver targeted by the message. For example, if a DataWriter sends a message to 10 different DataReaders (in 10 different DomainParticipants), you can expect 10 additional MACs added to the Crypto Footer. Note that Receivers in the same remote DomainParticipant will share the Receiver-specific Key and therefore, a single Receiver-Specific MAC will be added for all of them. You can limit the number of Receiver-Specific MACs that are appended to a message by tuning the cryptography.max_receiver_specific_macs property. For further information, see Origin Authentication Protection Implications.

Note that the major implication of this protection is that Receiver-Specific MACs will be added to the packet, which increases its length.

10.3.1.7. SIGN VS ENCRYPT

ENCRYPT protection is slightly more expensive than SIGN. Since ENCRYPT adds a length field in the Crypto Content, the network usage of ENCRYPT is slightly greater than the network usage of SIGN. The computational cost of ENCRYPT is also higher than SIGN, especially as the sample gets larger.

However, the difference between SIGN and ENCRYPT protections is so small that the impact on throughput and latency is usually minimal. Please note that you can combine SIGN and ENCRYPT by applying different protections at different stages. For example, you can potentially protect the integrity and confidentiality of a particular Topic’s payload (by setting Serialized Data Protection to ENCRYPT for that Topic) while protecting the integrity of all the traffic (by setting RTPS Protection to SIGN). In this scenario, your application will require \(\sim 2 \times\) CPU time to compute the cryptographic operations, if we compare it with a scenario where a single protection is enabled. Enabling different protections at the same time will certainly impact the throughput and latency of your application.

For further information on what entities are responsible for each protection kind, see Security Protections Applied by DDS Entities.

10.3.2. Factors Impacting Performance and Scalability During Steady State

10.3.2.1. Performance Impact of Different Protection Kinds

The protection kinds determine which cryptographic operations will be applied to which messages and submessages. For instance, protecting the submessages with ENCRYPT_WITH_ORIGIN_AUTHENTICATION requires more operations than protecting the same submessages with SIGN. It is also important to see what you protect. For example, if you set payload_protection_kind, metadata_protection_kind, and rtps_protection_kind to ENCRYPT in a reliable Topic, every sample that your DataWriter publishes will be encrypted three times; and every HEARTBEAT and every ACKNACK sent for that Topic will be encrypted twice. Hence, one of the major performance implications with respect to security is the protection kinds set in your Governance Document.

For best performance, adjust your Governance Document to set the levels of protection that best fits your use case.

10.3.2.2. Interaction Between the Security Plugins and Batching QoS

Payload protection is applied to an entire batch instead of to each individual sample within a batch. Therefore, with Serialized Data Protection, you will achieve the throughput of Submessage Protection when sending live batches without having to re-encode when resending repair batches.

10.3.2.3. Interaction Between the Security Plugins and Multicast

Multicast does not interact with how many times cryptographic operations are called to protect data, but can help reduce the network usage. Unlike TLS transports, which do not allow multicast at all, the Security Plugins can take advantage of multicast to boost the performance of your system. Using multicast along with the Security Plugins will provide a performance boost similar (or identical) to the one that you will have in an unsecure scenario.

In general, when you enable security, each message is protected once (see Interaction with Asynchronous Publishing). Then the protected message is delivered to the transport once per destination. When you enable multicast, the protected sample is sent once to the network, as if it were a single destination, and the network will propagate it to the actual recipients. This is a great performance improvement over TLS, where each sample needs to be protected once per destination, then sent to each destination with multiple socket write operations.

Figure 10.3 Transport Security VS DDS Security

10.3.2.4. Interaction with Reliability

Serialized Data Protection does not interact with reliability since the protection occurs before putting the samples in the DataWriter’s queue. Therefore, repairing or resending a sample does not require additional cryptographic operations when using Serialized Data Protection.

In contrast, Submessage Protection and RTPS Protection apply the cryptographic operation right before sending the sample to the transport. Therefore, repairing or resending a sample will require additional cryptographic operations, such as re-encrypting it.

10.3.2.5. Scalability Considerations for Origin Authentication Protection

When you use SIGN_WITH_ORIGIN_AUTHENTICATION or ENCRYPT_WITH_ORIGIN_AUTHENTICATION, a Receiver-Specific MAC is added to the message being protected. As an optimization, when using the DomainParticipant, this MAC is the same for every matching DataReader within a remote DomainParticipant (i.e., the number of MACs does not grow per DataReader, but per DomainParticipant). Therefore, the higher the number of DomainParticipants in your system, the bigger your RTPS packets will be.

For further details, see Origin Authentication Protection Implications.

10.3.2.6. Interaction with Content Filtered Topics

For Writer-side filtering: if Serialized Data Protection is in use and set to ENCRYPT, the DataWriter’s queue will have samples encrypted. This is done to avoid encrypting each time a sample is sent, such as for repairs. However, this comes with some drawbacks: to apply Writer-side filtering, the DataWriter needs to decrypt the samples in its queue, see if they match the filter, then send the corresponding samples. This is only needed for historical data: live samples are filtered before encrypting (i.e., which locators the sample will be sent to is decided before encrypting).

10.3.2.7. Interaction with Topic Queries

When you enable Discovery Protection for a Topic (by setting enable_discovery_protection to TRUE), TopicQuery requests related to that Topic will be exchanged through the Builtin Secure Service Request Topic. Submessage Protection is applied to this Topic, at the level defined by the discovery_protection_kind Governance Rule (see Builtin Secure Discovery Endpoints).

Protecting TopicQuery requests is needed in order not to leak information about your Topic. For example, in a hospital scenario, if you request information about a particular patient and the request was not protected, the patient name could be leaked.

For further information on the overhead produced by this kind of protection, refer to Submessage Protection.

10.3.2.8. Interaction with Asynchronous Publishing

In general, the following sequence of events is used to protect-then-send a message to multiple destinations:

Serialize the RTPS message with all its submessages.

Only when using Submessage Protection or RTPS Protection: Apply the cryptographic operations to protect the message with the configured level of protection.

Send the protected RTPS message to multiple destinations.

When you enable the Asynchronous Publishing feature (see ASYNCHRONOUS_PUBLISHER QosPolicy (DDS Extension) in the Core Libraries User’s Manual) in the Core Libraries User’s Manual`), all this processing is done independently for each destination. This is required to support mechanisms such as flow controllers. Therefore, when Asynchronous Publishing is enabled, sending a message to multiple destinations will require the following steps for each destination:

Serialize the RTPS message with all its submessages.

Only when using Submessage Protection or RTPS Protection: Apply the cryptographic operations to protect the message with the configured level of protection.

Send the protected RTPS message to a single destination.

As you can see, the latter case requires more cryptographic operations.

When you enable Serialized Data Protection, you need to increase DDS_FlowControllerTokenBucketProperty_t::bytes_per_token to include the overhead described in Serialized Data Protection if you want to publish samples at the same frequency.

10.3.2.9. Interaction with Compression

When compression and Serialized Data Protection are enabled, compression will take place first, then the sample will be protected and stored in the DataWriter’s Queue.

The combination of compression, batching and Serialized Data Protection is supported. In this combination, compression is applied to the batch, and then Serialized Data Protection is applied to the compressed batch.

Keep in mind that both compression and protection add a significant CPU overhead with performance penalties to consider, so they only make sense under specific scenarios.

10.3.2.10. Interaction with CRC

Connext provides two different ways to add CRC to every RTPS message sent. The first one is a legacy CRC mechanism sent on a dedicated, RTI-specific RTPS CRC32 submessage (see WIRE_PROTOCOL QosPolicy (DDS Extension) in the Core Libraries User’s Manual). The second option is defined in the RTPS Specification 2.5 and involves a CRC that is optionally transported on the RTPS Header Extension. Both options enable a recipient of the message to verify its integrity and discard it if corruption is detected. Only the CRC in RTPS Header Extension is compatible with Security Plugins whereas the legacy CRC is not compatible with Security Plugins.

The Security Plugins use 16-byte MACs to detect unexpected modifications on the received messages (see Cryptography). Note that the Security Plugins rely on the transport to detect and discard corrupted messages. Therefore, if the Cryptography plugin cannot validate a MAC upon a message reception, it will consider that the message was tampered with, compromising its integrity. This is a more secure approach and also discards corrupted messages.

10.3.2.11. Interaction with Transport UDPv4_WAN

UDP WAN binding pings can be protected with Pre-Shared Key Protection. See Support for RTI Real-Time WAN Transport for details.

10.4. Recommendations for usage with Observability Framework

The Security Plugins can secure DDS communications between RTI Monitoring Library 2.0 and RTI Collector Service (see Support for RTI Observability Framework for further details). If you are planning on using this capability, you should consider the following:

In the Governance Document, do not set

allow_unauthenticated_participantstoTRUE. RTI Monitoring Library 2.0 does not have the ability to communicate with both authenticated and unauthenticated DomainParticipants at the same time. Settingallow_unauthenticated_participantstoTRUEwill generate an internal logic overhead that is not necessary for RTI Monitoring Library 2.0.In the Governance Document, set

monitoring_metrics_protection_kindand/ormonitoring_logging_protection_kind(at least one of them) to a value different thanNONE. If you do not plan to protect any telemetry data, it is better to not use the Security Plugins.