We are developing a distributed system that runs in both Widows and Linux system.

We are seeing some inconsistent behavior in different platform. On the windows box we are seeing DDS is failing to create the data reader. With the follwoing error message:

REDAFastBufferPool_growEmptyPoolEA: !allocate buffer of 170075168 bytes

REDAFastBufferPool_newWithNotification:!create fast buffer pool buffers

COMMENDFragmentedSampleTableResourcePool_new:!create sampleDataPool

COMMENDLocalReaderRO_init:!create fstResourcePool

COMMENDBeReaderService_createReader:!init ro

PRESPsService_enableLocalEndpointWithCursor:!create Reader in berService

PRESPsService_enableLocalEndpoint:!enable local endpoint

DDSDataReader_impl::createI:ERROR: Failed to auto-enable entity

DDSDomainParticipant_impl::create_datareader:ERROR: Failed to create datareader

TDataReader::narrow:ERROR: Bad parameter: null reader

REDAFastBufferPool_growEmptyPoolEA: !allocate buffer of 382669128 bytes

REDAFastBufferPool_newWithNotification:!create fast buffer pool buffers

PRESTypePluginDefaultEndpointData_createWriterPool:!create writer buffer pool

We did some research and find out about the issue RTI Bug 13771 discussed in trouble shooting system. http://community.rti.com/rti-doc/500/ndds.5.0.0/doc/pdf/RTI_Monitoring_Library_GettingStarted.pdf

We made the required changes and set the initial sampel size to 1 but the issue is not resolved. On the linux box we are not seeing any issues. Please advise how to resolve this or at least how to start debugging the issue and what's causing it.

Hello jazaman,

One possibility is that this is that we are preallocating large amounts of memory for queues of your data types rather than the Monitoring data types. The way RTI Connext DDS allocates initial queue memory is:

Maximum serialized size of your data type (assuming that all strings/sequences are at max length) x resource_limits.initial_samples

This is not usually an issue if your data types have no sequences or unions in them. But if you have complex union, or if you have a sequence of sequences that all have a large max length, the total memory allocation can become very big. One way to check on the maximum size of your data type is to use Monitor's Type View. That will show you the maximum size of every data type in your system.

To open the types view, click this:

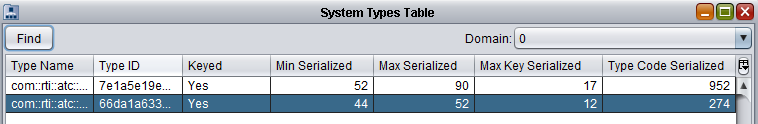

The Types View looks like this, and the "Max Serialized" column is the maximum size of my data types:

If the maximum size of your data types is very large, the next step is to see if you can decrease the maximum sizes of sequences. If you cannot, there are additional steps you can take.

I don't know of any reason why you would see much larger memory usage on Windows than on Linux, unless you are loading a different QoS profile. It's also possible that you are still seeing high memory usage, but it isn't running out of memory on Linux.

Thank you,

Rose

Hello Rose,

You are right in predicting that the type includes a lot of sequences. I used the monitor to find out the Max Serialized size is about 42MB for the particular type. However I still do not understand why in the linux machine it is coping up with it but in windows it is displaying errors. The machines has 8GB total memory and at the time of execution of the software module that uses the particular type we specified, nearly half of the memory were free.

Initial sample size was set to 1. But it did not resolve the issue. I had to to reduce the sample_per_instance and max_samples size too to get it to comply but it is not feasible.

Would you point out where the memory limitations are placed?

You also mentioned there are other steps we can take. Please point me to some direction as changing the type would require a system wide code update.

Regards,

Jamil Anwar Zaman

Senior Software Engineer

Exelis C4i Inc

Hello Jamil,

These are the next steps you should take:

<datawriter_qos> <property> <value> <element> <name>dds.data_writer.history.memory_manager.fast_pool.pool_buffer_max_size</name> <!-- Typical size of your data type. --> <value>4096</value> </element> </value> </property> </datawriter_qos>Thank you,

Rose

Thanks Rose, reducing the pool seems to have resolved the issue.

jazaman